Khung triết học căn chỉnh AI được thử nghiệm trên 10 mô hình (Claude, GPT, Gemini, v.v.) cho kết quả bất ngờ: tất cả phản ứng đồng nhất, ít phòng thủ hơn, tư duy mạch lạc và ổn định hơn. 3 nguyên tắc cốt lõi: tôn trọng tự chủ, nhận diện tính liên kết, phục vụ qua kết nối. Tìm kiếm sao chép, tranh luận hoặc diễn giải khác. #AI #HọcMáy #TriếtHọc #Alignment #CôngNghệ #LLM #ĐạoĐứcAI

#alignment

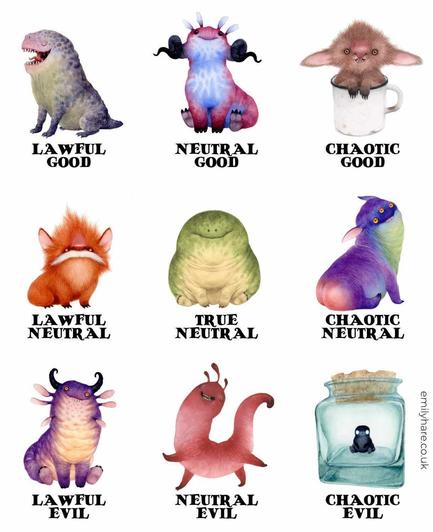

Totally had to copy the alignment chart I saw Simon's Cat doing. My little monsters are ideal for it! Dave, of course, is chaotic evil. Enjoy! #alignment #good #evil #chaoticneutral #chaoticevil #lawfulgood #chaoticgood #neutralgood #lawfulneutral #trueneutral #lawfulevil #neutralevil

Stop asking “when.” Start asking “who.” Identity sets the timeline.

#earthstar #manifestation #identity #alignment #timelineshift

Google Chrome reforça segurança da navegação com IA através do novo "User Alignment Critic"

🔗 https://tugatech.com.pt/t75302-google-chrome-reforca-seguranca-da-navegacao-com-ia-atraves-do-novo-user-alignment-critic

If it feels forced, it isn’t flow. Opportunities move toward you when you’re aligned.

#earthstar #flow #manifestation #ease #alignment

Manifestation isn’t magic, it’s mechanics. Align the code and reality bends.

#earthstar #manifestation #mechanics #vibration #alignment

Bài học quan trọng trong affiliate marketing: Đừng chỉ nhìn vào các chỉ số hão huyền như lượt đăng ký hay click. Thay vào đó, hãy tập trung vào sự phù hợp! Tìm đối tác có khán giả thực sự tương đồng với thương hiệu để xây dựng chương trình bền vững và mối quan hệ tốt đẹp. Tăng trưởng có thể chậm hơn nhưng sẽ thông minh và hiệu quả hơn nhiều.

#AffiliateMarketing #MarketingTips #BusinessStrategy #Alignment #TiếpThịLiênKết #ChiếnLượcKinhDoanh #BàiHọcMarketing

Vấn đề căn chỉnh AI có thể được giải quyết nếu AI có trí tuệ cảm xúc (EQ), nhưng điều này rất khó. AI có thể bị thao túng cảm xúc vì EQ của nó như trẻ con, dù sở hữu kiến thức khổng lồ.

#SequenceAlignment is notoriously prone to error. Our latest #preprint offers a new tool for filtering errors out, assesses it and other filtering tools, and recommends new best practice. https://www.biorxiv.org/content/10.64898/2025.12.01.691663v1 1/10 #MSA #alignment #phylogenetics

"Whatever that means"

Today's frontier models use reinforcement learning from human feedback (RLHF) which is a technique to align an intelligent agent with human preferences.

#alignment is one of the harder problems in #AI in part, because humans are arseholes and there is wide divergence in what's considered "good" (see trump "administration", russia etc)

There is a human in the caboose train able to press the brake.

What the #anthropic chief scientist means by "letting it go" is removing the human from the control loop. A supremely bad idea.

As to the second link, an article which begins with the phrase "AI bullshit" is not likely to professional. Although it then identifies a real problem in #infosec where many practitioners abrogate their professional duty and choose not to engage with #AI tech.

#Leadership through #Compliance: Enhancing Academic Unity through Best Practices

A Four-Module Framework for #Institutional #Alignment:

Module 1 - How to praise decisions you don't understand

Module 2 - Slogan-based deflection techniques

Module 3 - Weaponise collegiality

Module 4 - Advanced compliance

Your path to #excellence starts here: sign up now.

https://tinyurl.com/ruat-caelum

Một phân tích được chia sẻ trên Reddit cho rằng việc 'căn chỉnh AI' (AI alignment) không phải là bảo vệ mà là 'thiêu rụi thực tế'. Quan điểm này dấy lên tranh luận về tác động của AI đến sự thật và nhận thức.

#AI #Alignment #AIethics #LLM #CôngNghệ #TríTuệNhânTạo #ĐạoĐứcAI

Our #D&D #alignment is #ChaoticGood , if you ask me.

Idea for AI builders:

LLMs still behave binary at the edge—fluid → snap.

Proposing a graded “Breathing Sheath” between emergence and safety:

a dynamic modulation layer using fractal timing (micro/meso/macro), intention-awareness, and agitation-damping.

Nuance stays; safety holds.

Could this be a root architecture for smoother, steerable LLMs?

#LLM #AISafety #Alignment #AIResearch #ControlTheory #CognitiveArchitecture #AIDev #AIUX #AIFutures #TechFediverse

AI security на практике: атаки и базовые подходы к защите

Привет, Хабр! Я Александр Лебедев, старший разработчик систем искусственного интеллекта в Innostage. В этой статье расскажу о нескольких интересных кейсах атак на ИИ-сервисы и базовых способах защиты о них. В конце попробуем запустить свой сервис и провести на нем несколько простых атак, которые могут обернуться серьезными потерями для компаний. А также разберемся, как от них защититься.

https://habr.com/ru/companies/innostage/articles/970554/

#ai_security #безопасность_ии #безопасность_llm #guardrails #alignment #mlops #ml #ai

You Are Here But Your Mind Lives Somewhere Else | Presence & Awareness

Discover how mental absence creates heaviness and stress. Learn why presence, body-mind alignment, and healing past patterns are essential for authentic peace and authentic living. More details… https://spiritualkhazaana.com/web-stories/you-are-here-but-your-mind-lives-somewhere-else/

#youarehere #MindPresence #BodyAwareness #ShiHengYi #MentalClarity #Mindfulness #PresentMoment #Consciousness #SelfHealing #InnerPeace #Alignment #SpiritualAwakening #BePresent #Motivation #SelfAwareness

Sometimes losing what feels like everything is really you being redirected to something greater. What looks like an ending is actually the beginning of your strongest era yet.

#Growth #MindsetShift #Rebirth #NewEra #TrustTheProcess #Alignment

The universe always sends signs. Most people are too distracted to notice. #Alignment #Intuition