The remarkable energy efficiency of the Human brain: One #Spike Every 6 Seconds !

In the groundbreaking paper "The Cost of Cortical Computation" published in 2003 in Current Biology, neuroscientist Peter Lennie reached a stunning conclusion about neural activity in the human brain: the average firing rate of cortical neurons is approximately 0.16 Hz—equivalent to just one spike every 6 seconds.

This finding challenges conventional assumptions about neural activity and reveals the extraordinary energy efficiency of the brain's computational strategy. Unconventional? Ask a LLM about it, and it will rather point to a baseline frequency between 0.1Hz and 10Hz. Pretty high and vague, right? But how did Lennie arrive at this remarkable figure?

The Calculation Behind the 0.16 Hz Baseline Rate

Lennie's analysis combines several critical factors:

1. Energy Constraints Analysis

Starting with the brain's known energy consumption (approximately 20% of the body's entire energy budget despite being only 2% of body weight), Lennie worked backward to determine how many action potentials this energy could reasonably support.

2. Precise Metabolic Costs

His calculations incorporated detailed metabolic requirements:

- Each action potential consumes approximately 3.84 × 109 ATP molecules

- The human brain uses about 5.7 × 1021 ATP molecules daily

3. Neural Architecture

The analysis factored in essential neuroanatomical data:

- The human cerebral cortex contains roughly 1010 neurons

- Each neuron forms approximately 104 synaptic connections

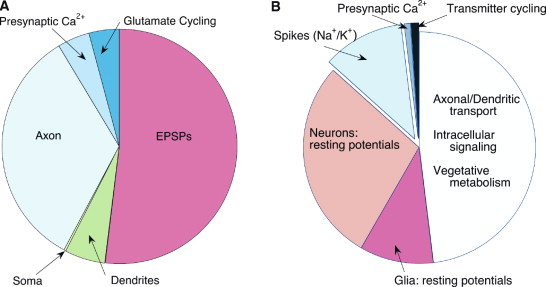

4. Metabolic Distribution

Using cerebral glucose utilization measurements from PET studies, Lennie accounted for energy allocation across different neural processes:

- Maintaining resting membrane potentials

- Generating action potentials

- Powering synaptic transmission

By synthesizing these factors and dividing the available energy budget by the number of neurons and the energy cost per spike, Lennie calculated that cortical neurons can only sustain an average firing rate of approximately 0.16 Hz while remaining within the brain's metabolic constraints.

Implications for Neural Coding

This extremely low firing rate has profound implications for our understanding of neural computation. It suggests that:

- Neural coding must be remarkably sparse — information in the brain is likely represented by the activity of relatively few neurons at any given moment

- Energy efficiency has shaped brain evolution — metabolic constraints have driven the development of computational strategies that maximize information processing while minimizing energy use

- Low baseline rates enable selective amplification — this sparse background activity creates a context where meaningful signals can be effectively amplified

The brain's solution to energy constraints reveals an elegant approach to computation: doing more with less through strategic sparsity rather than constant activity.

This perspective on neural efficiency continues to influence our understanding of brain function and inspires energy-efficient approaches to #ArtificialNeuralNetworks and #neuromorphic computing.