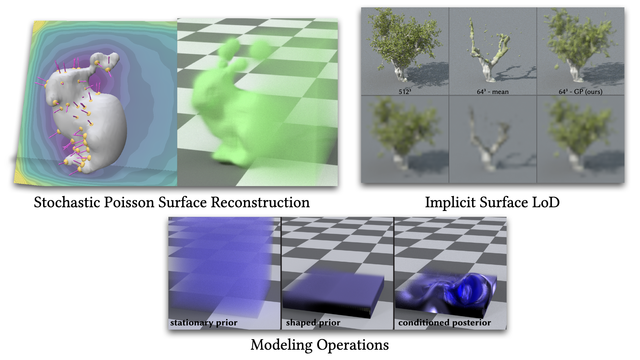

We have opened applications for a few additional research scientist intern positions next summer: https://metacareers.com/jobs/440476391758875/

I'm specifically looking for someone with a strong graphics background who is also familiar with diffusion models!