@davidgerard Out of all the horrible comedies Matt Lucas has been a part of, I think this Marc Andreessen character has to be one of his worst creations.

Design enthusiast. Experienced user.

I have been making these videos about tech & design: https://www.youtube.com/playlist?list=PLwvAAoSdsXWxHwFbULgbpVGiLmJZv4X1g

and in audio: https://pnc.st/s/faster-and-worse

An Australian in Amsterdam

Verizon’s new AI chatbot — customer disservice

We don’t care. We don’t have to. We’re the phone company.

https://pivot-to-ai.com/2025/06/25/verizons-new-ai-chatbot-customer-disservice/ - text

https://www.youtube.com/watch?v=EGeDLdr-jgs&list=UU9rJrMVgcXTfa8xuMnbhAEA - video

tell your nearest big ai-critical account to make sure to call them "products" instead of "tools" or "technologies" please

@samir @impactology slower and better

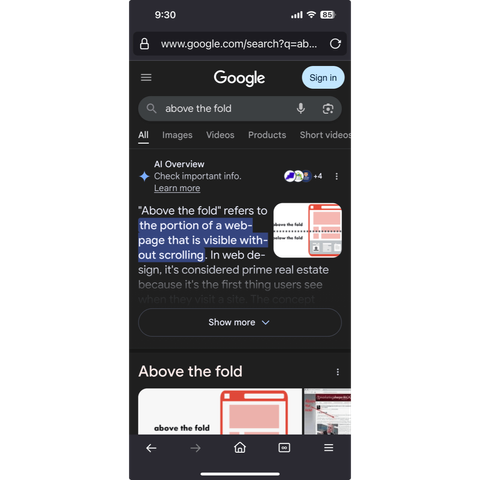

Google's AI overviews are annoying and useless to anyone who has defined their own purpose for the product as being a *web* search.

Anthropic AI wins broad fair use for training! — but not on pirated books

https://pivot-to-ai.com/2025/06/24/anthropic-wins-broad-fair-use-for-training-but-not-on-pirated-books/ - text

https://www.youtube.com/watch?v=MwoScPzTWiA&list=UU9rJrMVgcXTfa8xuMnbhAEA - video

ICYI the full Eric Schmidt - Charlie Rose interview from 2006 is here

https://archive.org/details/Charlie-Rose-2006-04-13

Google's AI overviews are annoying and useless to anyone who has defined their own purpose for the product as being a *web* search.

I talk about it in this dispatch. The way they let us project our own purposes on their products while they chip away at their own purpose in the background.

"I'm feeling lucky" wasn't a silly joke. It was the plan all along.

It shouldn't be a surprise that they see themselves as an *answer* search, not a *web* search. The web was a resource for them.

delighted to announce that my new zine "The Secret Rules of the Terminal" is out today!!

You can get it for $12 USD here: https://wizardzines.com/zines/terminal

@quitthebiz me too!

Veeeery tempting, Slack. But I've been around long enough to remember "functionalities" being a lazy internal catch-all for "the shit we need to cram in to the product before the imaginary sales deadline"

@txo_elurmaluta added an edit!

@txo_elurmaluta oh just the fact that there are no actual search results above the fold haha. I was being annoyingly vague

how is this not hostile to the open web?

edit: *because there are no search results above the fold

how is this not hostile to the open web?

edit: *because there are no search results above the fold