💾 🤗 Call for Participation for the Critical Code Studies Working Group (CCSWG 24) #critcode #dh

(Deadline extended to January 31!)

Topics for this year include:

☑ Queering Code

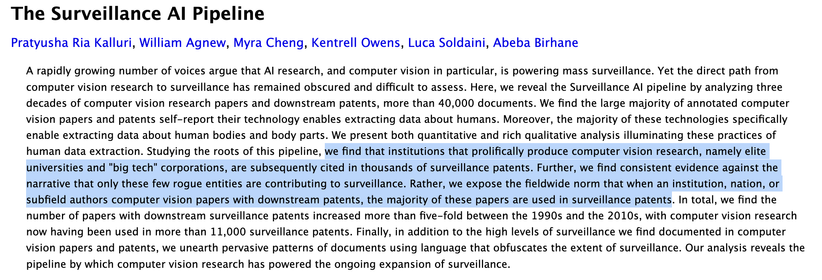

☑ CCS and AI

☑ The DHQ Special issues

☑ Teaching Code and Code Studies

https://docs.google.com/document/u/0/d/1TVYqLOIbYYs6qsMd5ah-DQEaQCEhe5GEQCk7ITdDvoI/mobilebasic