Just published part 3 of my blog post series on making beautiful slides for your talks 🎨✨

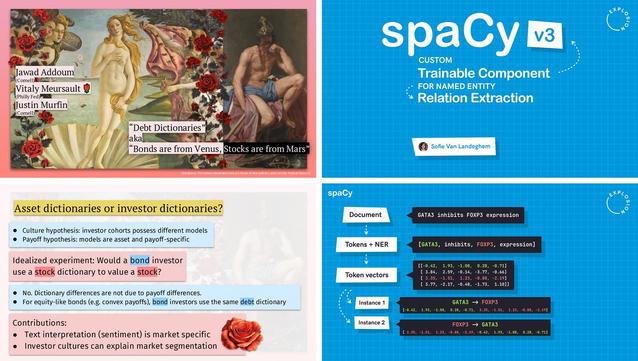

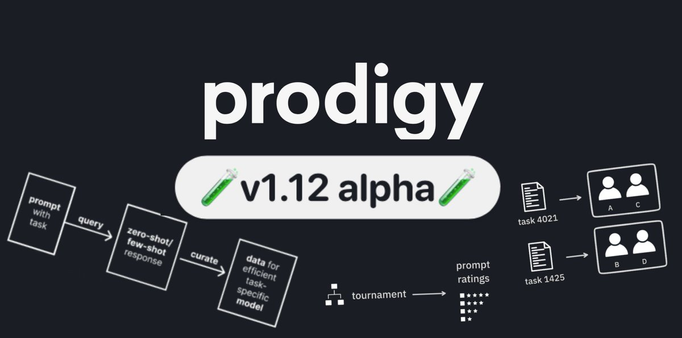

This one is about presenting technical content and making dry and abstract topics more interesting. Featuring many examples, including talks by Vitaly Meursault and @sofie!

https://ines.io/blog/beautiful-slides-talks-part-3-technical-content/