Yes yes, I hate making slides. But more as a matter of structure, what tools/services would you recommend to describe an emergent / non-linear structure of connected thoughts (a la mindmap) that can be meaningfully presented to others? (Tried w Prezi before w ok-ish results.)

Sr Director of SentinelLabs @ SentinelOne. Adj. Professor @ Johns Hopkins SAIS. LABScon Organizer, Cyber Paleontologist, Fourth-Party Collector.

@agreenberg I have named names and boy do they get pissy.

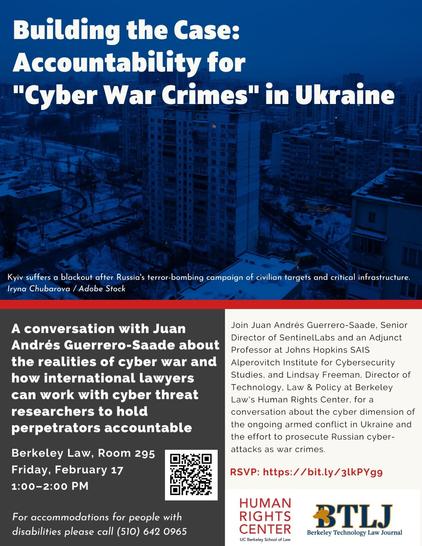

Join @hrcberkeley Technology, Law & Policy Director Lindsay Freeman in conversation with cyber threat researcher @jags of @SentinelOne on the dimensions of #cyberwarfare in Ukraine on February 17 at @berkeleylaw.

#RSVP now: https://bit.ly/3lkPYg9

Is everyone actually over here now? Twitter is dead.

(Lazy |)Writers of Mastodon,got dictation / speech to text recommendations? Bonus points for a pleasant workflow in iOS/MacOS.

👉 New on #SentinelLabs! .NET malware loader, dubbed MalVirt, is being distributed through malvertising are using obfuscated virtualization for anti-analysis and evasion in an ongoing campaign. By @milenkowski and @hegel

https://www.sentinelone.com/labs/malvirt-net-virtualization-thrives-in-malvertising-attacks/

@n0x08 this is what I’ve been obsessing over! If it hadn’t been for ChatGPT I’m not sure we could’ve gotten twenty students of completely different backgrounds and technical skills to go through that course. I’m really glad you had a similar experience!

I accomplished a new milestone in my reverse engineering today. A colleague asked me to figure out how the “getfw” tool used in some Cisco images to decrypt firmware out of their downloadable images works so he could use Python to extract them at-scale.

So I threw it in IDA, narrowed in on a function called “fwdec” then dropped the assembly into ChatGPT … wait wut?

https://alperovitch.sais.jhu.edu/an-experiment-in-malware-reverse-engineering/

My good friend @jags recently showed that ChatGPT is extremely useful for RE newbs like me so I ran with it.

It was able to explain the assembly code was loading strings into memory and those strings?

OpenSSL decryption commands; including the passphrase, actually 2 passphrases (one from 2017, one from 2018). Worked like a charm once my colleague plugged it into his code and 100% of the images were decrypted.

Yeah, it’s not reversing stuxnet but considering it took me - with nearly zero IDA skills - under an hour to figure it out I thought it was pretty damn cool.

Last week, we conducted an experiment at @alperovitch — an intensive primer on Malware Analysis for non-technical students. Unlike beginner MA courses that give a light smattering of approachable tools and concepts, we’d walkthrough the analysis of a single sample end-to-end.

In order to keep myself intellectually honest, we plucked a malware sample I had never analyzed before– an Agent.BTZ sample– and started with initial triage -> light static analysis w HIEW -> deeper static analysis with IDA -> pinpoint debugging w x64dbg -> report writing.

We asked students to do an inordinate amount of prep for a weeklong course– reading a minimum 14 chapters of Sikorski's Practical Malware Analysis course, and a list of quick start references. And *surprisingly*, a majority of them did, making it possible to move quicker.

Despite prep, there's one seemingly insurmountable aspect of this subject w students of varying subject familiarity– every student was some combination of: don't know assembly, don't know how to code, not familiar w programming concepts, hadn't used any of these tools, etc.

That's where @openai ChatGPT stepped in as a teaching assistant able to sit next to each student and answer all 'stupid' questions that would derail the larger course. It was a first-attempt TA that helped students *refine* their questions more meaningfully.

Was it ever wrong? Absolutely! And it was amazing to see students recognize that, refine their prompts, and ask it and me better questions. To feel empowered to approach a difficult side-topic by having chatGPT write a python script or tell them how to run it and move on.

Fearmongering around AI (or outsised expectations of perfect outputs) cloud the recognition of this LLMs staggering utility: as an assistant able to quickly coalesce information (right or wrong) with extreme relevance for a more discerning intelligence (the user) to work with.

Thankfully, in a professional development course, there's little room for performative concerns like plagiarism– you're welcome to rob yourself but the point here is to learn how something is done and have a path forward to the largely esoteric practice of reverse engineering.

I'm staggered by the sincere engagement of our students. Even after 5-6 hours of instruction, I'd receive 11pm messages telling me they'd unobfuscated a string in the binary and wanted to understand how it might be used. They pushed themselves way past their comfort zone.

In the end, we went from some vague executable blog to seeing how an old Agent.BTZ sample would attempt to infect USBs, unobfuscate hidden strings, resolve APIs, establish persistence, and callout to a satellite hop point to reach a hidden command-and-control server.

This was a purely experimental endeavor in the hope of bolstering meaningful cybersecurity education. Some may choose to further engage malware analysis, many more will hopefully enter the larger policy discussions around this subject with a rare grasp of the subject at hand.

My sincere thanks to @ridt + @EllyRostoum + @alperovitch faculty for their support in enabling this first time course at every level. Also thank you to @HexRaysSA for educational access to IDA Pro, and @openai for inadvertently superpowering our educational experiment.

More here: https://alperovitch.sais.jhu.edu/five-days-in-class-with-chatgpt/

Last week I was a student for five days, five hours per day—with ChatGPT fully integrated into teaching. Here's what we learned, just in time for Spring Term (which starts tomorrow. Class was Malware Analysis, taught by @jags https://alperovitch.sais.jhu.edu/five-days-in-class-with-chatgpt/

Ok, be honest, people, HexRayPyTools has been borked for some time now. What are folks using to handle structure creation, discovery, expansion, etc on the newer versions of IDA (and thereby python3)????

@mattd when you put it that way…

Man, I’d love to get HackedTeam[.]com but it’s apparently owned by a squatting domain asking for $3K >.<

@_vventura It's not where I'd *start* with Wittgenstein, specially not in particular regard to that topic, but if you're more familiar, it's proving an interesting vignette.

For folks interested in starting–

- Ray Monk's biography (The Duty of Genius)

- Philosophical Investigations (PI)

|_+ The Blue Book probably.

More specific to AI, it's usually excerpts from PI + his notebooks on Philosophy of Language / Philosophy of Psychology specifically (they can be found as individual collections by topic)

|_ the easier route is Stuart Shank's collection of most relevant remarks:

https://www.amazon.com/Wittgensteins-Remarks-Foundations-Stuart-Shanker-ebook/dp/B0013PKPBK/

I get the sense that this particular corner of my knowledge of Wittgenstein could use updating as it seems folks have added new scholarship in the area. (Wether meaningful or not ¯\_(ツ)_/¯)

More central to Later Wittgenstinian thought are the discussions on Logical Grammar–

- On Certainty

|_+ Wittgenstein on the Arbitrariness of Grammar https://www.amazon.com/Wittgenstein-Arbitrariness-Grammar-Michael-Forster-ebook/dp/B002WJM4KG/

@sanjuanswan Wittgensteinians of Infosec, ASSEMBLE!