@danbarber damn, this looks sick. I like that it doesn't require disabling SIP

Author, The Complete Guide to Rails Performance. Co-maintainer of Puma. http://speedshop.co (he/him)

Anyone use yabai on macOS and can comment on a) stability and b) responsiveness? I've been a long-time Amethyst user but having to constantly restart it and the slightly-slow layouting isn't great

@jamie That's the _queue_ time only, not service/response time.

When setting cooldowns for scaling up or down horizontally:

It is important you EVENTUALLY scale down, but it's not important that you scale down QUICKLY. The exact opposite is true for scaling up.

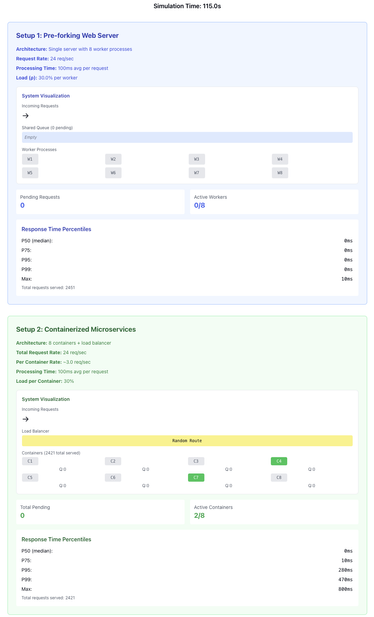

Try it yourself with this demo: https://claude.ai/public/artifacts/19d65052-ef7c-4ba6-a1a4-05d200795f45

Which web app server is more performant:

1. 1 container with 8 forked child processes

2. 8 containers with 1 process each, round robin/random load balancer in front

It's #1 and it's not even close! This extremely relevant if you have to have to use random/RR load balancers.

@tenderlove are you doing (or are aware) of any work in Ruby that might give us either:

a) our own allocator

b) two heaps, one for general purpose use and one for objects that we know (or are marked?) to be long-lived

@zenspider hey these days thats pretty good 😂

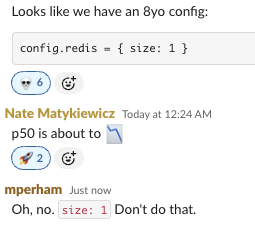

From the CGRP Slack. Don't be stingy with Redis connection pool sizes. Redis is pretty efficient w/idle connections so I will just set size to either 100 (if using mike's connection_pool gem) or equal to the concurrency of the puma/sidekiq threadpool.

As a consultant, you can be 99% certain what I tell you is correct, and you can make fast, big bets and decisions based on that.

If an LLM tells you something, you can't just immediately go sign up for $300k worth of yearly AWS spend without significant addt'l research.

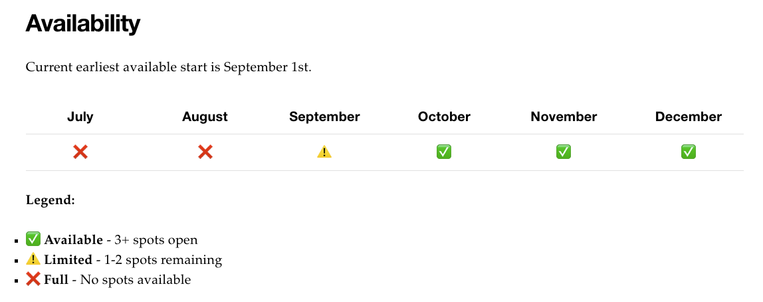

Retainer full for the summer!

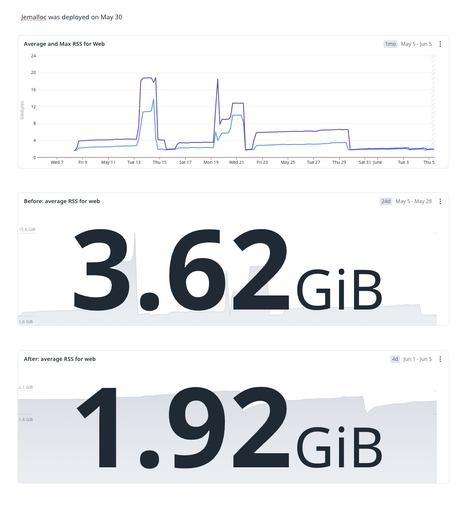

NEVER MISSES. Jemalloc STRIKES AGAIN, this time for a retainer client.

47% less memory with two lines changed in the Dockerfile. $2000/year saved on Fargate costs.

Kinda crazy that 9 months ago I felt the need to excuse this, now I just sort of assume everything is ai-generated-garbage

The more I use it, the more I'm "all-in" on Datadog. I love every new addition they make to the product, and the stuff they're modifying in old parts too.

1. Love the new Scorecards feature.

2. The new Notebook 2.0 UI is amazing.

3. CMD-K to nav thru the massive feature set

@getajobmike What do you think about making Manager#workers public in some way? Give me a method to add or remove 1 (or n) number of Processor threads.

If you're a team of 5+ and you don't have formal, tracked SLOs around:

* Request queue time (web server)

* Background job queue time (per queue)

... you should, because you already have an informal one (your customers complaining).

@soaproot yeah that's kinda my point actually. We have no good measurements of productivity, so it's really hard to evaluate the effectiveness of LLMs.

Heroku "Redis" is just Valkey now. You might be using Valkey without even realizing!

I'm seeing a LOT of AI-boosting right now re: coding that is basically staging a PERFORMANCE of creating value: 15 open agents, etc.

AI has to produce _software_ that is _valuable_, not to type things into computers.