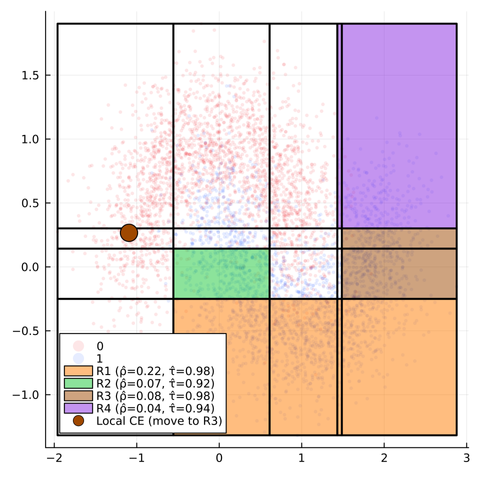

To address this need, CounterfactualExplanations.jl now has support for Trees for Counterfactual Rule Explanations (T-CREx), the most novel and performant approach of its kind, proposed by Tom Bewley and colleagues in their recent hashtag #ICML2024 paper: https://proceedings.mlr.press/v235/bewley24a.html

Check out our latest blog post to find out how you can use T-CREx to explain opaque machine learning models in hashtag #Julia: https://www.taija.org/blog/posts/counterfactual-rule-explanations/