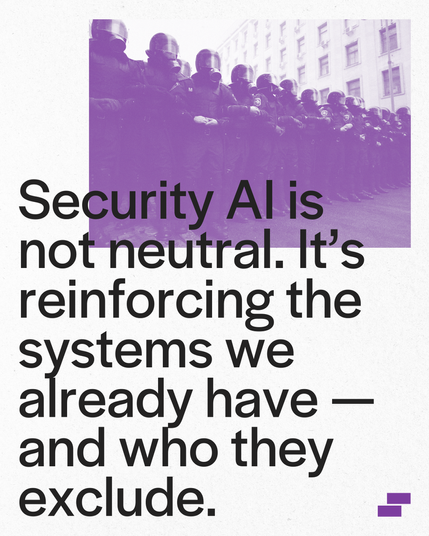

Security AI is not neutral. It’s reinforcing the systems we already have — and who they exclude.

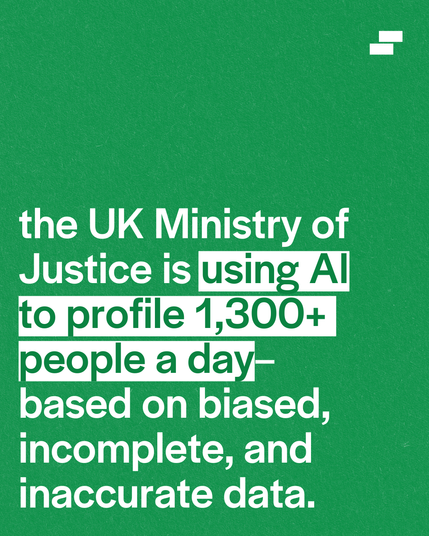

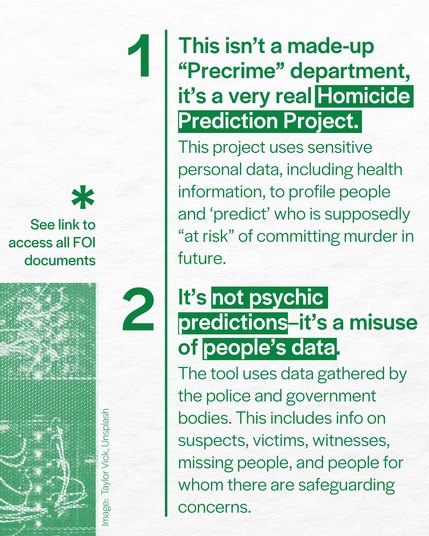

Facial recognition, risk scoring, behaviour prediction — EU governments are building AI tools into policing and migration systems already marked by racism, over-surveillance and discrimination.

Policymakers talk about “debiasing” these tools, but sidestep the real issue: AI is being deployed to reinforce structures of exclusion, not to dismantle them.

📓 Read our report: https://buff.ly/5QD3kXD