Starting off with the Doctoral Consortium at #IUI2023. Happy to be a mentor for the future generation of HCI/AI researchers.

Reader in Responsible and Interactive AI, University of Glasgow

5 days of AI + HCI coming up at #IUI2023. Looking forward to the conference and exploring the intersection of these important fields.

Happy to announce that I have taken on the leadership of the GIST research section here at University of Glasgow. Onwards and upwards!

Want to get certified online training to design inclusive technology whatever the gender of your users? Get started now http://gendermag.org/onlinetraining.php

Last post before the new year. Have stopped checking my email. Didn’t get everything done I wanted to do but practising not beating myself up about it. Let’s all enjoy a festive time and happy holidays everyone!

We’ll be presenting our TiiS paper about lay users finding and fixing ‘fairness bugs’ at in Sydney. Woohoo! @nkwyri

If you work in interactive machine learning you will need to be equally good at HCI and AI. Discuss.

The 10th Heidelberg Laureate Forum will be held September 24-29, 2023 in Heidelberg, Germany. It brings together Laureates in computing with 200 early career researchers, who can apply directly or be nominated. Nominators need to register using the ACM “Organization code”: ACM49263.

Deadline to apply: February 11, 2023

For more info, see https://www.heidelberg-laureate-forum.org/faq/.

Off to give a seminar and examine a PhD tomorrow. Travelling only the second time since the pandemic started. Excited and slightly apprehensive at the same time as I’ve forgotten most of airport etiquette.

Very busy start to week working on Responsible AI proposals and projects. Hot area to be in.

Super fun week giving guest lectures on Responsible AI to our grad apprentices here in Glasgow and on Explainable AI to students at Cambridge. I hope that many follow in my footsteps to tackle these important issues.

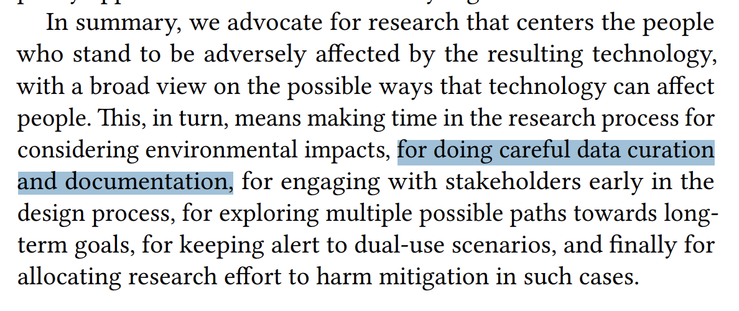

![Screencap: "When we rely on ever larger datasets we risk incurring documentation debt, 18 i.e. putting ourselves in a situation where the datasets are both undocumented and too large to document post hoc. While documentation allows for potential accountability [13, 52, 86], undocumented training data perpetuates harm without recourse. Without documentation, one cannot try to understand training data characteristics in order to mitigate some of these attested issues or even unknown ones. The solution, we propose, is to budget for" and footnote 18 "On the notion of documentation debt as applied to code, rather than data, see [154]."](https://files.mastodon.social/cache/media_attachments/files/110/067/729/289/126/981/small/8427c162a41ca9c8.png)

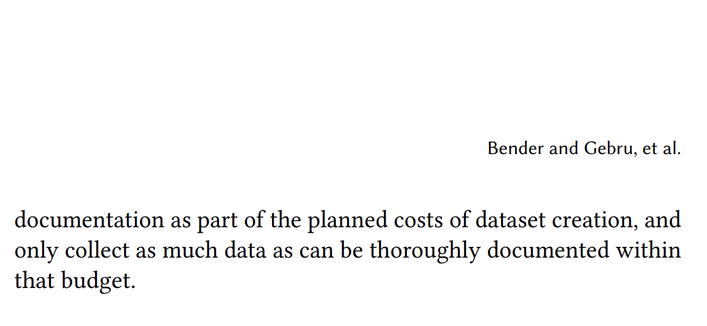

![Screencap "As a part of careful data collection practices, researchers must adopt frameworks such as [13, 52, 86] to describe the uses for which their models are suited and benchmark evaluations for a variety of conditions. This involves providing thorough documentation on the data used in model building, including the motivations underlying data selection and collection processes. This documentation should reflect and indicate researchers’ goals, values, and motivations in assembling data and creating a given model. It should also make note of potential users and stakeholders, particularly those that stand to be negatively impacted by model errors or misuse. We note that just because a model might have many different applications doesn’t mean that its developers don’t need to consider stakeholders. An exploration of stakeholders for likely use cases can still be informative around potential risks, even when there is no way to guarantee that all use cases can be explored." 2nd-4th sentences highlighted in blue.](https://files.mastodon.social/cache/media_attachments/files/110/067/729/539/431/707/small/45238e14a976de33.png)