I think the focus on accuracy of #ai generated summaries replacing traditional search misses the point.

Sure, there have been embarrassing early mistakes (put glue on the 🍕, hahaha), but these are technical issues that will be solved. In all cases, surely not, but is traditional search perfect? When justifiably criticizing something new, we should not make the mistake of idealizing the old.

The main issues are elsewhere, IMHO.

First, we centralize interpretation. Instead of everyone making their own sense of the contradictory information we find online, we get a summary that makes it all seems coherent. This is the old problem of the selection of sources prefiguring the answer, but on steroids.

Second, when culture becomes primarily training data, there is no motivation to produce it in the first place. Neither a financial one because it ruins many of the economic models, nor a social one because it undermines reciprocity at the heart of human communication (I write, you read, and perhaps we even talk). The dead internet theory is right.

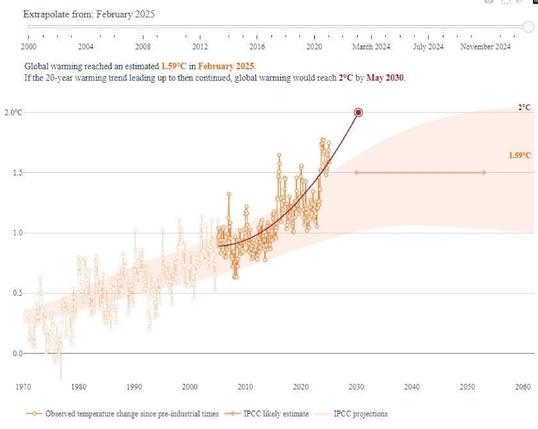

Third, ecological inefficiency. Instead of becoming smarter in terms of resource use, we become dumber. We do more or less the same, with more.