Current business phase is getting us accustomed to just reading the overview and accepting it (while ignoring the source links), training us to become even less critical consumers of information. Phase two is full on manipulation of the information, as the sources become more and more undiscoverable, the platform more and more locked in.

digital librarian, DH, DS, minimal infrastructure, static web

Classic AI overview risk example:

I searched for info about swimming on Rice Lake in Duluth. It gave me a very confident overview about pollution and closures due to monitoring, and then suggestions about better places to swim in Duluth.

This is sort of correct. But also, the monitoring thing didn't sound right. Sure enough if you check the source links they are all about other Rice Lakes 300 miles south...

Bringing together real information in wrong ways, with broken context.

= Why these ai overview searches are so dangerous. So easy to just read it (because it is basically what you want) and not realize how biased and inaccurate it is.

And that is even assuming the ai platform is a honest actor trying to give you the correct info. Which isn't true, ultimately they are trying to make money off your engagement.

By a 50-50 vote +1 by Vance—MAGAs voted for:

•$5T deficit increase

•Cut Medicaid for 16M

•Gut SNAP & food access

•Cut taxes for wealthy & raise taxes for working people

•$8B for ICE, $50B for a wall, $59B for concentration camps, $150B for war

This bill will kill countless people. It will make the wealthy even wealthier on the mass deaths of working class people. The cruelty is the point. Listen: MAGA Is Fascism. Period.

Slice of Life is a feature-length documentary about former Pizza Huts that have been transformed into everything from bars to churches to candy stores. “When things continue to transform, beauty can come from it, good things can come out of it.” https://kottke.org/25/07/slice-of-life

If you're a humanities researcher who wants to understand more about #openAccess publishing, Uni of London Press has created a free, online course. It takes about 1.5 hours. https://uolpress.co.uk/2025/06/new-open-access-in-the-humanities-online-training-course-launched/ #histodons

None of this is news. AI can easily replace people, if those people are dishonest or delusional.

From an editorial in Science: The #Trump #GoldStandard for science "officially empowers political appointees to override conclusions and interpretations of government scientists, threaten their professional autonomy, and undermine the scientific capacity of research and regulatory agencies."

https://www.science.org/doi/10.1126/science.adz3920

#Censorship #DefendResearch #TrumpVResearch #USPol #USPolitics

Little Nemo by Winsor McCay (1905-1914)

#comicstrip #littlenemo #comicstrips #fantasy

Oliver North being invited to Fox News as a “military analyst” talking about how Iran shouldn't have missiles and is a threat to peace in the middle east, as if it wasn't him who sold missiles to Iran in order to create untraceable funds through which to support the Contras in Nicaragua when congress cut funding (which was high treason).

You're a threat to peace everywhere.

“ Canada is a more urban country, more multicultural and less religious. It remained steadily socially progressive while the United States got more divided. Christian nationalism, political polarization and sharp inequality are all higher in the U.S. Canadians live longer, are healthier, shoot each other less often, hate each other less, are more open to immigration. Fewer Canadians die deaths of despair.” https://macleans.ca/society/canadas-new-nationalism/

Army Officer Resigns In Protest of #Trump Policy, Defies Order to Keep Silent https://www.dailykos.com/stories/2025/6/30/2331002/-Army-Officer-Resigns-In-Protest-of-Trump-Policy-Defies-Order-to-Keep-Silent?pm_campaign=trending&pm_source=sidebar&pm_medium=web #LGBTQIA

Hi @histodons

We (@SafeguardingResearch) have been working on creating a backup of Chronicling America.

(1 large pull + many smaller ones)

https://sciop.net/datasets/chronicling-america

Do you know of any similar activities?

Would be great to combine efforts :)

Boosts ✅

#SafeguardingResearch #histodons

Republicans want to give a $300 million subsidy to American coal companies that ship the coal to China.

Yes, you read that right. Republicans want to spend your tax money so that China can get cheaper coal.

https://www.reuters.com/sustainability/coal-used-make-steel-gets-break-trumps-tax-bill-2025-06-30/

Interesting results in Club World Cup, so I am enjoying the typical whiney english soccer commentators basically saying that the "good" teams can't win cause the grass is too bad..

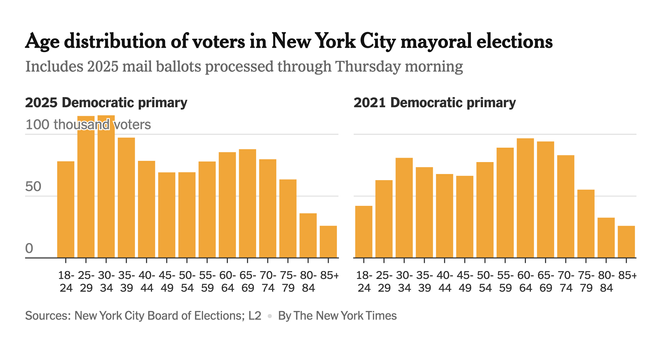

I never want to see pundits say that young people are not interested in politics ever again.

Young people aren't the problem. Uninspiring candidates are the problem.

I just don't think kids can consent going to church

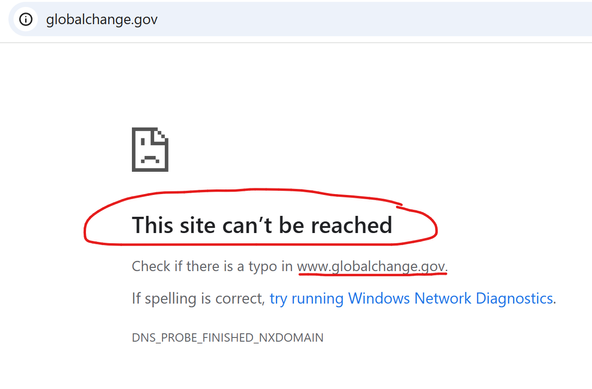

Trump has deleted the entire US Global Change Research Program website -- a research program required by federal law.

I know many people have downloaded and preserved documents from that website in recent days. The problem is bigger though: the entire future research effort has been cancelled. Existing documents can be saved. End future research blinds the public and policymakers to current and future risks to the country and public health.

@roopikarisam what is Reviews in DH going to do re: pubpub migration? Do you have an alternative platform?

this little EV build is hilarious