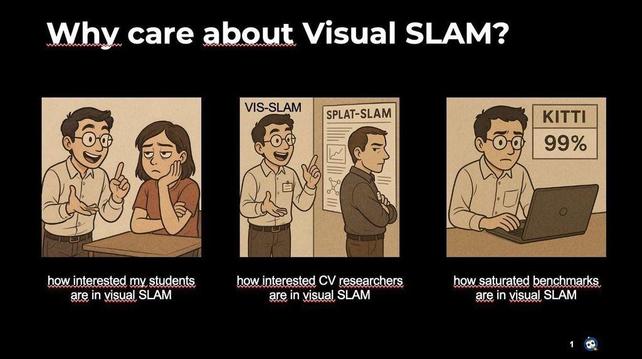

This is the first time in a while I am creating a new talk. This will be fun!

I'll be up later today at the Visual SLAM workshop at @roboticsscisys.bsky.social

roboticist, assistant professor @ Uni Bonn & Lamarr Institute

interested in self-learning & autonomous robots, likes all the messy hardware problems of real-world experiments

@sjthomas Happy user of @mailbox_org here. Their webmail has a nice integration of PGP and other encryption where you want it, but I found it less hassle for the day-to-day. It works like a charm with apple mail and thunderbird.

We have an excellent opportunity for a tenured, flagship AI professorship at Uni Bonn and https://lamarr-institute.org

Application deadline is end of March.

Thesis xmas pudding, the only. way to work on your thesis over the xmas period

Turns out aria-glasses are a very useful tool to demonstrate actions to robots: Based on egocentric video, we track dynamic changes in a scene graph and use the representation to replay or plan interactions for robots.

🔗 https://behretj.github.io/LostAndFound/

📄 https://arxiv.org/abs/2411.19162

#robotics #computervision #mobilemanipulation #CV #ethzurich #unibonn #lamarrinstitute

our demo team is getting ready #ECCV2024

@jbigham There is one example where I really appreciate the embedded viewer: overleaf. The reverse lookup of clicking a section in the PDF and getting the corresponding latex source is just very helpful. But they even give you the option to switch to the native viewer.

Are you also a bit exhausted after #ICRA submission week? Let us brighten your day with a real "SpotLight" 💡

🔗 https://timengelbracht.github.io/SpotLight/

📄 https://arxiv.org/abs/2409.11870

We detect and generate interaction for almost any light switch and can then map which switch turns on which light.

@Kultanaamio Absolut richtig, was Ken Goldberg sagt. Ich habe selber vermutlich auch noch nie ein Video veröffentlicht, was nicht beschleunigt war. Roboter in Echtzeit sind einfach zu langsam und zu langweilig zum anschauen.

Trotzdem sind Videos in der Robotik nicht reines Marketing, sondern meistens auch wichtige Dokumentation, dass überhaupt etwas funktioniert. Vor 10 Jahren wären viele der aktuellen Videos auch mit 100 takes kaum möglich gewesen.

#Google #GoogleX #AI #Robots #Robotics: "It was early January 2016, and I had just joined Google X, Alphabet’s secret innovation lab. My job: help figure out what to do with the employees and technology left over from nine robot companies that Google had acquired. People were confused. Andy “the father of Android” Rubin, who had previously been in charge, had suddenly left. Larry Page and Sergey Brin kept trying to offer guidance and direction during occasional flybys in their “spare time.” Astro Teller, the head of Google X, had agreed a few months earlier to bring all the robot people into the lab, affectionately referred to as the moonshot factory.

I signed up because Astro had convinced me that Google X—or simply X, as we would come to call it—would be different from other corporate innovation labs. The founders were committed to thinking exceptionally big, and they had the so-called “patient capital” to make things happen. After a career of starting and selling several tech companies, this felt right to me. X seemed like the kind of thing that Google ought to be doing. I knew from firsthand experience how hard it was to build a company that, in Steve Jobs’ famous words, could put a dent in the universe, and I believed that Google was the right place to make certain big bets. AI-powered robots, the ones that will live and work alongside us one day, was one such audacious bet.

Eight and a half years later—and 18 months after Google decided to discontinue its largest bet in robotics and AI—it seems as if a new robotics startup pops up every week. I am more convinced than ever that the robots need to come. Yet I have concerns that Silicon Valley, with its focus on “minimum viable products” and VCs’ general aversion to investing in hardware, will be patient enough to win the global race to give AI a robot body. And much of the money that is being invested is focusing on the wrong things. Here is why."

https://www.wired.com/story/inside-google-mission-to-give-ai-robot-body/

📢 We are pleased to share that the full-day workshop “CoRoboLearn: Advancing Learning for Human-Centered Collaborative Robots” will take place as part of the Conference on Robot Learning (CoRL 2024) on November 9th in Munich, Germany.

conversation is mostly happening on twitter, but … #chi2025 has apparently been desk rejecting papers who have titles of less than 4 words

I know the growth in papers is stressing lots of parts of the system, but I really can't fathom who thought this was reasonable.

one of the affected authors pointed out that ~5 papers on the example papers list have titles this short.

🙃

these stories sound dangerously familiar…

https://mas.to/@errantscience/113045511043226973

Exciting Day! My lab‘s first robot got delivered 🤩

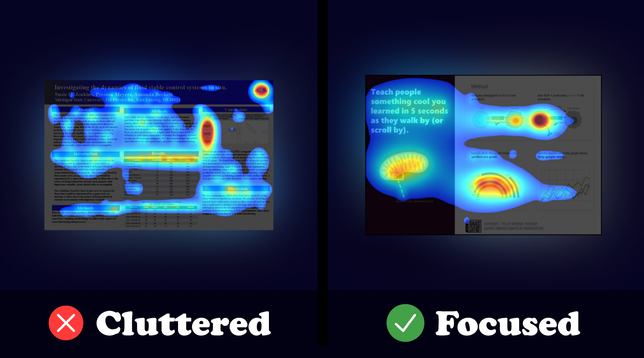

Want more attention on your scientific posters?

The most powerful design tool you can use is exactly what scientists are most afraid to use — empty space.

You can see this in action in this video recap of our new eye tracking study of scientific posters:

this is seriously cool

https://bird.makeup/users/autovisiongroup/statuses/1821056395648630840

Thanks to all speakers, contributors and helpers for a nice workshop today! Recordings are online for anybody interested https://www.youtube.com/channel/UCSUL2K_bHSS-YrYySlKfupQ

I am looking forward to #RSS this week. Ping me if you want to meet up, or join our workshop on Open-Set Robotic Perception on Monday on-site or online: https://open-set-robotics.github.io/

I’m happy to share our recent work on Creating a Digital Twin of Spinal Surgery: A Proof of Concept. We showcase how complete surgeries can be digitized in order to obtain a highly accurate and detailed spatio-temporal representation. We strongly believe that these surgical digital twins have significant potential in the areas of education and training, training surgical robots in simulation, synthetic data generation, and many more.

Project page: https://jonashein.github.io/surgerydigitization/

Today is presentation day for our 3D-Vision #3DV course. Students will present posters and demos of the projects that they have worked on over the course of the semester.

But my most important question after scrolling through their materials: Did my students have enough fun with the robot??

#Robotics #MobileManipulation #UniversityLife #ComputerVision