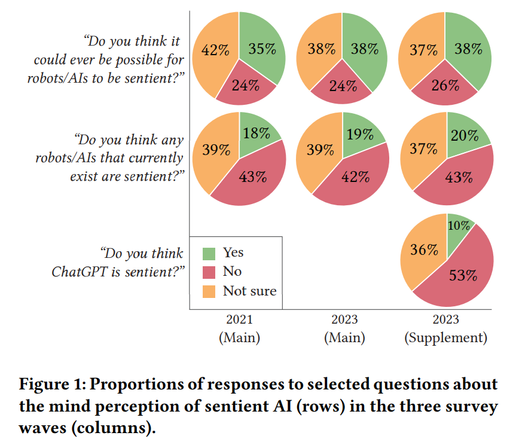

You've heard of "AI red teaming" frontier LLMs, but what is it? Does it work? Who benefits?

Questions for our #CSCW2024 workshop! The team includes OpenAI's red team lead, Lama Ahmad, and Microsoft's, Ram Shankar Siva Kumar.

Cite paper: http://arxiv.org/abs/2407.07786

Apply to join: http://bit.ly/airedteam