Most software similar to PieFed delegates the job of maintaining the health of communities to the moderators of those communities. This frees up the instance administrators to focus on technical issues and leave a lot of the politics and social janitorial work to others.

Some issues with this:

- Moderators are not always very good at it, sometimes lacking experience or maturity.

- Moderators can become inactive, leaving their communities unmaintained.

- Moderators only have influence over their communities – if someone is removed from one community they can just go to another to cause havoc there. This leads to a lot of duplication of effort.

- Moderators can have quite different priorities and values from the admins, leaving admins paying to run a service for people they don’t feel very aligned with.

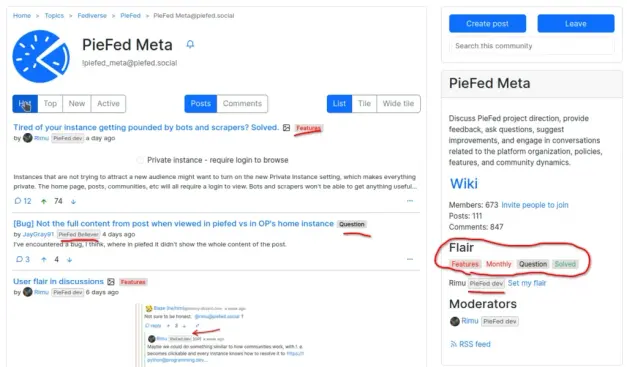

PieFed gives admins a suite of tools to take a more hands-on approach to gardening all the communities on their instance. It does this by ignoring the community divisions and instead treating all posts and accounts as a big pool of things to be managed. Of course there are solid community moderation tools available but I will not be focusing on them in this blog post.

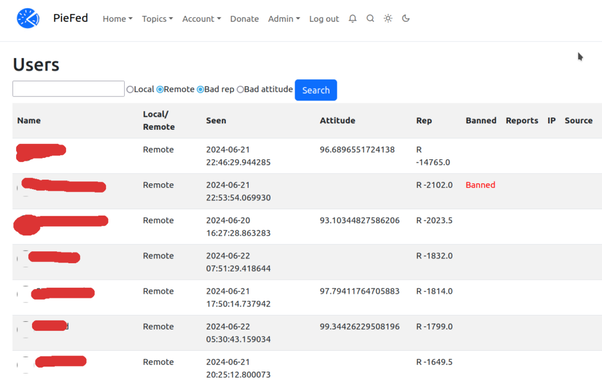

Find people who have low karma

When someone is consistently getting downvoted it’s likely they are a problem. PieFed provides a list of accounts with low karma, sorted by lowest first. Clicking on their user name takes you to their profile which shows all their posts and comments in one place. Every profile has “Ban” and “Ban + Purge” buttons that have instance-wide effects and are only visible to admins.

The ‘Rep’ column is their reputation. As you can see, some people have been downvoted thousands of times. They’re not going to change their ways, are they?

The ‘Reports’ column is how often they’ve been reported, IP shows their IP address and ‘Source’ shows which website linked to PieFed when they initially registered. If an unfriendly forum starts sending floods of toxic people to your instance, spotting them is easy. (In the image above all the accounts are from other instances so we don’t know their IP address or Source).

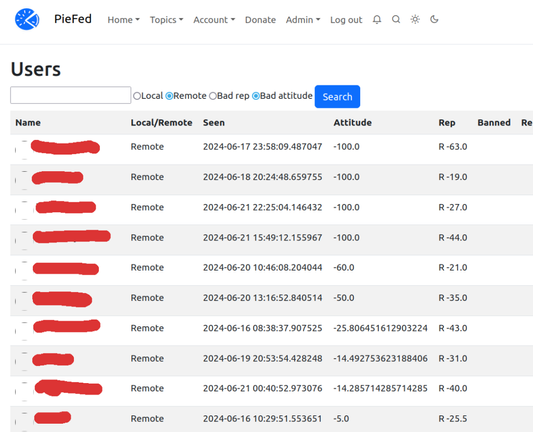

Find people who downvote too much

Once an account has made a few votes, an “attitude” is calculated each time they vote which is the percentage of up votes vs. down votes.

People who downvote more than upvote tend to be the ones who get in fights a lot and say snarky, inflammatory and negative things. If you were at a dinner party, would you want them around? By reviewing the list of people with bad attitudes you can make decisions about who you want to be involved in our communities.

All these accounts have been downvoting a lot (Attitude column) and receiving some downvotes (Rep column). Their profiles are worth a look and then making a decision about whether they’re bringing down the vibe or not.

Spot spam easily

A lot of spam does not get reported or it is only removed on the original instance, leaving copies of it on every instance that federates with them. To help deal with this PieFed has a list of all content posted by recently created accounts which has been heavily downvoted.

Don’t award karma in low-quality communities

Some communities are inherently useless and anyone posting popular content in them will accumulate lots of karma which makes them seem like a valuable account – unless the admin has flagged that community as “Low quality” which has the effect of severing the link between upvotes of posts and the author’s karma. Down votes still decrease karma though, so an account that only ever posts in low quality communities will slowly reduce in karma.

All communities with the word ‘meme’ in the name are automatically flagged as low quality but admins can override this on a case-by-case basis.

Warnings on unusual communities

There are a diverse range of communities, catering to different needs and ideologies. A community about “World News” will be completely different on lemmy.ml from one on beehaw.org and different again on lemmy.world. Even when the written rules of the communities look the same, the way they are interpreted and enforced can come as a big surprise to people new to the fediverse.

To deal with this, admins can add a note that is displayed above the ‘post a comment’ form, saying whatever they want. On piefed.social I’ve used this to put a note on every beehaw.org community about the ‘good vibes only’ nature of that instance and one community on lemmy.ml has a note about the unusual mostly-unwritten moderation policies employed there.

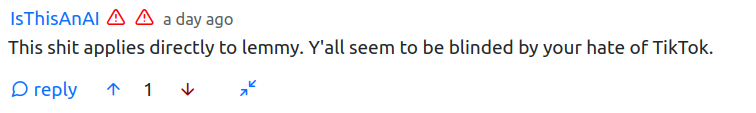

Icons next to comments by low karma accounts

Accounts that get downvoted a lot end up with a negative karma. Once this happens they get a small red icon next to their user name so everyone knows that they might not be worth engaging with. Once their karma drops even further they have two red icons.

These icons also bring the account to the attention of moderators and admins in a more passive way – as they go about reading and interacting, not in a special admin area which might be forgotten or rarely visited.

Icon for new accounts

New accounts have a special icon next to their user name for the first 7 days. An account with this icon AND some red warning icons is especially likely to be a spammer or troll account. While admins have dedicated parts of the admin area to find these accounts (described earlier) these icons bring this to the attention of everyone.

Ban evasion detection

I don’t go into too much detail on this as it’ll reduce its effectiveness. Suffice to say, when people get IP banned from PieFed, they stay banned more often. It’s not perfect but it’ll mean they are much more likely to become some other instances’ problem.

Automatically delete content based on phrases in user name

One of the perennial issues with federated systems is banned spammers & trolls can just move to another instance and resume their work there. When they do so they often use the same user name. PieFed lets admins maintain a list key words that are used to filter incoming posts by checking those words against the author’s user name.

You can be pretty sure anyone with 1488 or even just 88 in their user name is a nazi, for example.

Speaking of which, PieFed has an optional approval queue for new registrations. New accounts with “88” in their name are always put in a different /dev/null queue that leads nowhere. The UI tells them they’re waiting for approval but that approval will never come.

Report accounts, not just posts

As well as reporting content, entire accounts can be reported to admins (not community mods). This smooths the workflow out a bit because usually when a post is reported the person handling the report needs to check out the entire profile of the offending account to see if it’s part of a pattern of behaviour. A report of an account takes the admin straight there.

Instance-wide domain block

PieFed has a predefined list of blocked domains comprising 3000+ disinformation, conspiracy and fake news websites. No posts linking to those sites can be created. The UI makes it easy to manage the list and add new domains to it.

Automatic reporting of 4chan content

Image recognition technology is used to detect and then automatically report screenshots of 4chan posts. The spreading of 4chan memes normalises 4chan, a nazi meme generation forum, and builds the alt-right pipeline. PieFed instances will not be an unwitting participant in that.

I’m sure there are a few things I forgot but hopefully this tour conveys the way PieFed does things differently. There is always more to be done or things that can be improved so I welcome feedback, code contributions and ideas – please check out https://join.piefed.social for ways to get involved.

https://join.piefed.social/2024/06/22/piefed-features-for-growing-healthy-communities/

#fediverse #moderation #piefed