@floe Ah, I see, it's this time of the year again 😆 I guess this counts as (hay) fever-induced engineering.

I make things. (he/him)

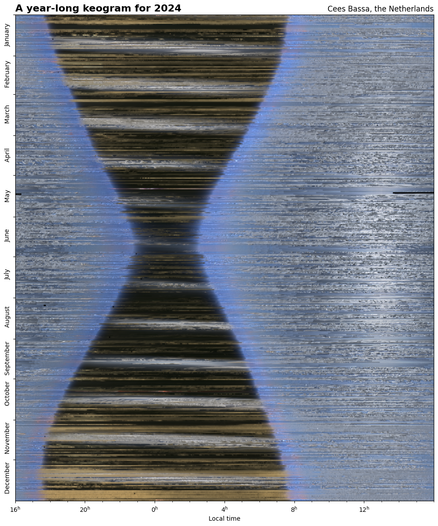

For the 4th year in a row, my all-sky camera has been taking an image of the sky above the Netherlands every 15 seconds. Combining these images reveal the length of the night changing throughout the year, the passage of clouds and the motion of the Moon and the Sun through the sky. #astrophotography

@stk Die WP hatte da mal vor einiger Zeit eine interaktive Übersicht: https://www.washingtonpost.com/technology/interactive/2023/ai-chatbot-learning/

as a: frog

I want to: be boiled

so that: I maximize shareholder value

Coolest video I've seen today: an electronic scanner-paint plotter from 1970!

@floe Last year some numbers were reported. Looked it up: "The rate of moderation automation is very high at Meta: At Facebook and Instagram, respectively, 94% and 98% of decisions are made by machines". Will differ a bit by language for sure, but yeah, probably no scamming report will be seen by humans unless escalated multiple times...

And together with the indomitable @volzo, we present the LensLeech, an all-in-one soft silicone widget that will turn any camera into a tangible input device: https://dl.acm.org/doi/10.1145/3623509.3633382 #tei2024

The campaign to fund OpenCV 5 is now live! Open your wallets to help secure a future for open source, non-profit, computer vision and AI technology that is freely available to anyone with the desire to learn. #OpenSource #ComputerVision #AI #MachineLearning #NonProfit https://igg.me/at/opencv5

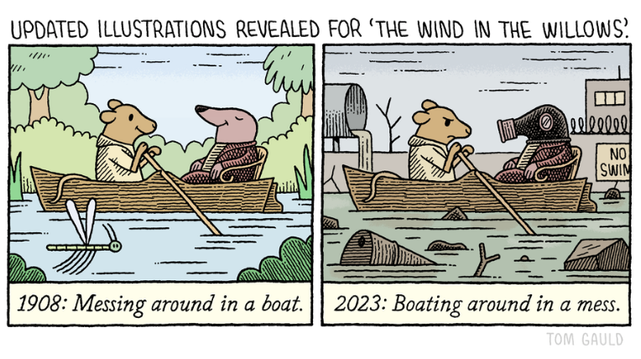

Tom Gauld on the #watercrisis

@vsaw @floe Sure, always appreciated, but don't expect too much... If you just want to get a feeling for the kind of data that can/could be generated with the app, there are two example datasets available: https://despat.de/visualize/#dataset_erfurt

@floe @vsaw Nah, wouldn't make sense. The state-of-the-art is simply moving too fast when it comes to any ML applications and I assume the app is of little use now almost half a decade later. Compiling from source would probably work, but Android 13 might require additional permissions for the background operations (waking up, CPU locks and camera access with disabled screen)

This was -- in its entirety -- quite a huge chunk of things to test, try, and learn. Especially starting from zero.

If you want to know more about how silicone molding with integrated lenses can be done, I did a separate video about this topic:

If you want to learn how the application examples work, I did a video about the hybrid viewfinder (and viewfinders in general):

If there is a pattern printed on the silicone, it's straightforward to detect the deformation of the pattern and detect what the fingers do with the silicone.

You can find the pre-print paper on arxiv: https://arxiv.org/abs/2307.00152

and additional info here: https://volzo.de/thing/lensleech/

Quick summary:

If you want to enable some kind of on-lens interaction you need to track fingers on or slightly above a camera lens.

With a piece of soft and clear silicone, it's easy to create a protective barrier for the lens, but the finger will be out of focus and just a skin-colored smudge.

By molding the clear silicone in the shape of a positive lens, it's possible to refract the light in a way that the focus of the camera will always be on the fingertip if it touches the silicone.

Have you ever felt the urge to touch a lens like a button or a joystick? I built some soft silicone blobs that can transform camera lenses into physical input elements.

I tried to squeeze a paper into the shape of a YouTube video: https://youtu.be/lyz52IzMcnM

Recently I was looking into 1D LIDAR scanning for facade measurements and it's crazy how hard it is to find one that works well and doesn't break the bank. The best price/range/precision ratio has the 6-year old Garmin Lidar V3, while all of it's successors and competitors perform worse, cost 3x or both. Looks like shitty 2D sensors (1D sensors that spin very fast) captured the whole market...

The hard and labor-intensive part is the UX for the vector lines interface. Yet, they offer their service by charging 99$ for the plastic part.

I guess they could simply allow people to print a pattern on a regular piece of paper (just as they do with their computer vision assisted router) and charge a few cents for the web service. Do people really hate pay-what-you-use so much?

So, Shaper has a new product and it's a plastic computer vision marker frame to digitalize hand-drawn lines with a photo in a web app:

https://www.kickstarter.com/projects/shaper/shaper-trace-go-from-sketch-to-vector-in-seconds

The marker pattern looks very much like Pi-Tag by Bergamasco et al. but I guess they don't rely need all the fancy tricks of that paper.

I generally very much like what they do (and they are one of the few succcessful companies that emerged from the field of human-computer interaction). But the pricing is a bit weird on this one.

A while ago I stumbled upon the fact that cameras can reverse the perspective of an image. The only thing you need for that is a lens larger than whatever you want to take a photo of.

Because I enjoy taking the most horrible photos of faces I can I set out to find the largest lens I could get.

If you're curious how that looks, there is a YouTube video: https://youtu.be/d0Njtko93RQ

In case you went to a wedding recently (or you are old, sorry), you may know disposable cameras. ~27 pictures on film and a lot of plastic waste.

I wondered if it's possible to make something useful with them instead of throwing them away. The answer is: kind of.

Re/Upcycling disposable cameras for toy lenses:

Video: https://www.youtube.com/watch?v=mnvB71b50wY

Blog post: https://volzo.de/thing/recyclinglenses/