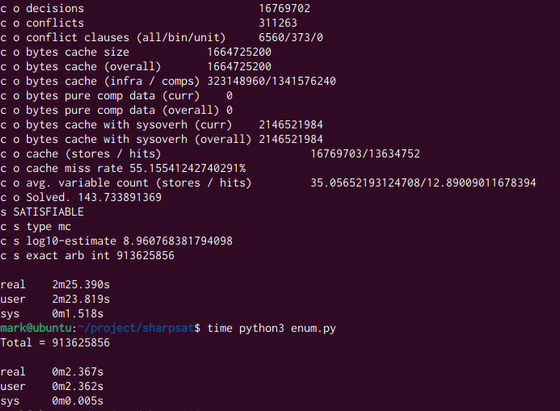

Weekend project: try to solve some #combinatorics #enumeration problems by reduction to #SharpSAT. (Which, to be clear, I thought was unlikely to succeed!)

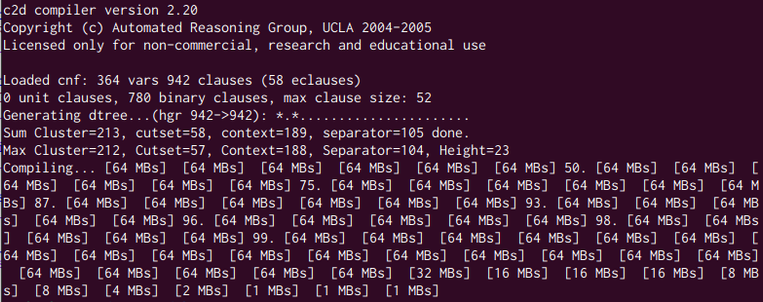

I picked c2d http://reasoning.cs.ucla.edu/c2d/ because it scored highly in the 2020 Model Counting Competition https://arxiv.org/abs/2012.01323 but I am not sure this is the same version. The one I got is dated 2005 and was 32-bit only. It ran out of memory on this 364-variable 942-clause instance (corresponding to 6 playing cards chosen from a standard 52-card deck.)

Looking at the 2023 competition instead, I think I should try SharpSAT-TD https://github.com/Laakeri/sharpsat-td but it is not as well documented. For example, I don't know if it supports the "eclauses" (exactly-one clauses) extension of the Dimacs CNF format.

#Satisfiability