@adgaps this is nice, font looks good! just wanted to let you know, you have a typo there in the second bullet: msot common type of church 😌

biased opinions on subjects that scarcely matter

"Emacs is a Gnostic cult. And you know what? That’s fine. In fact, it’s great. It makes you happy, what else is needed? You are allowed to use weird, obscure, inconvenient, obsolescent, undead things if it makes you happy." -- You can choose tools that make you happy

https://borretti.me/article/you-can-choose-tools-that-make-you-happy

@tommorris maybe instead abolish copyright altogether, that benefits largely big corpos and not authors/artists?

This is cool, I didn't know about FSRS. Maybe that's why Anki kind of never grew on me 🤔 going to try that out

I got access to Gemini Diffusion, Google's first diffusion LLM, and the thing is absurdly fast - it ran at 857 tokens/second and built me a prototype chat interface in just a couple of seconds, video here: https://simonwillison.net/2025/May/21/gemini-diffusion/

@tealeg Same source - another view: https://www.technologyreview.com/2025/05/20/1116274/opinion-ai-energy-use-data-centers-electricity/

By the way - you can read the ALT-Text for more ( ;

@tealeg there are alternative, less bleak estimates. This also doesn't take into account that AI gets cheaper. You can literally run a small model that takes less memory than your browser, which was not possible a year ago. Besides, how much energy is saved by AI doing a task in minutes that previously required hours to complete?

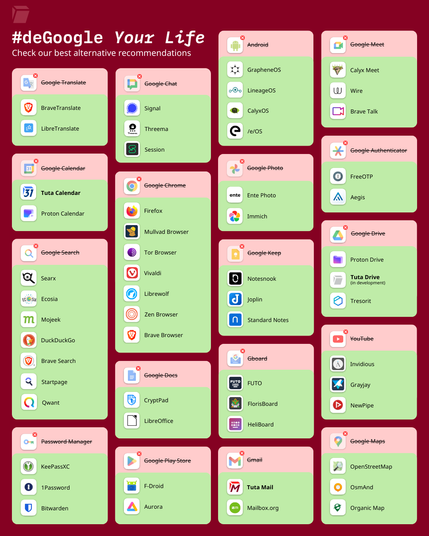

DeGoogling is possible, and it doesn't need to be difficult. 👏

Take a look at our in-depth guide of Google alternatives to learn how you can take back your privacy in 2025. ❤️🔒

👉 https://tuta.com/blog/how-to-leave-google-gmail

Have you already DeGoogled? If so, let us know your favorite Google-free apps.

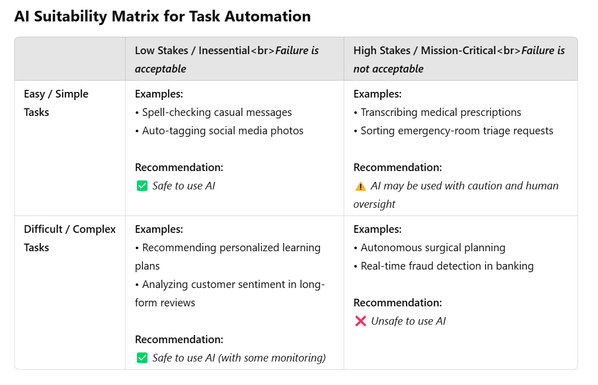

I was planning to write here a short text about how, when considering whether to use AI for a task, that one should take into account not only the difficulty/complexity of the task, but also the acceptable failure rate; for instance, using an AI to suggest a recipe for dinner has an acceptable failure rate when just cooking for oneself, but would be inadvisable for a head chef preparing a state banquet for a high-profile diplomatic function, even if the two tasks are essentially of comparable difficulty (per person served, at least). But I realized that since this writing task was itself simple and with a high acceptable failure rate, it made sense to just to let an AI summarize this point directly in a table form, as enclosed below; it contains minor imperfections, but certainly suffices for the task at hand. [My prompt for this can be found at https://chatgpt.com/share/67e813bc-590c-800e-90a3-79d4115a5053 ]

Hmm just realized I don't see toots from some of the people I follow in my feed. They are on other instances. I thought #mastodon was supposed to pull their toots for me after I follow them? Is this not how it works? If I open up their profile I see their toots that they made after I subscribed to them. #issue #mastodonquestion

My points:

- cost of running LLMs has been and will continue to plunge. We've not yet reached the limit there

- not everyone needs an LLM or "AI" or "chatbots"

- the use-cases boil down to: dealing with mundane, routine, center-of-the-distribution texts. It's your job then to go out of the center into the fringes

(2/2)

Can someone #recommend #blog's / #newletter's / whatever, that are chaotic, broadly-themed and eschew the keywords "rationalist", "bayesian", "effective altruism" and the like? I know this is very unspecific but no way I could make that more precice than: think anti-Scott Alexander.

@galdor@emacs.ch @hajovonta Yes! These are the exact words told to newcomers to the #Emacs land, that if they want total control over the software, Emacs is the way to go. Once you already know how to operate it, it becomes evident how to utilize the power it gives to you. But before that, all you see is a rather unfriendly window and a lengthy tutorial, and you ask yourself, is it worth it?

By the way I *did* set up #gnus to read my #RSS feed after all but not sure if I want to continue dabbling in it

Trying out Gnus is a humbling experience that also provides a perspective on why people might not want to deal with Emacs, preferring alternative editors: it is not immediately obvious that overcoming a steep learning curve would bring benefits compared to an easier solution (like using a different news client). I just want to read my RSS feed, presented in a concise, elegant fashion, I don't want to battle with an UI that might've made sense back in the modem era

Something from ago.

aftrimage — Superconductivity

Join us tonight at #Emacs London meetup (Sept 26th).

Register to attend at https://www.meetup.com/london-emacs-hacking/events/295656446

Add your topics to https://github.com/london-emacs-hacking/london-emacs-hacking.github.io/blob/master/meetup-agenda.org or ping me and I can add.

Help get the word out and boost 🙏

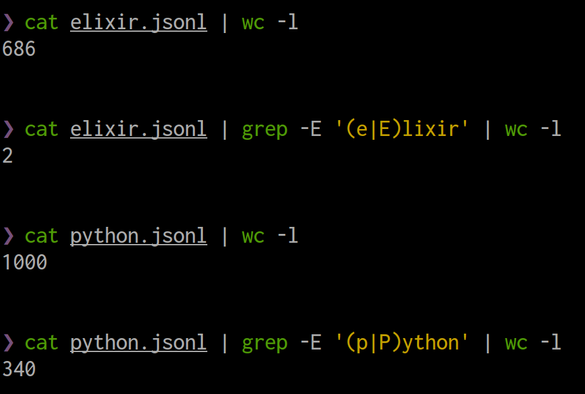

LinkedIn job search hit absolute rock bottom. If you search for "Elixir" jobs in NL, it returns you 686 results. Guess how many of them even have the word "Elixir" in them. Two!

LinkedIn literally ignores what you ask for and instead returns 17 pages of "promoted" irrelevant ads.

For Python, the ratio is about 34%. Which is better but I think more a coincidence, lots of positions today at least mention Python.

@qed@emacs.ch Thanks! I actually learned this today when I was reading on the universal-argument