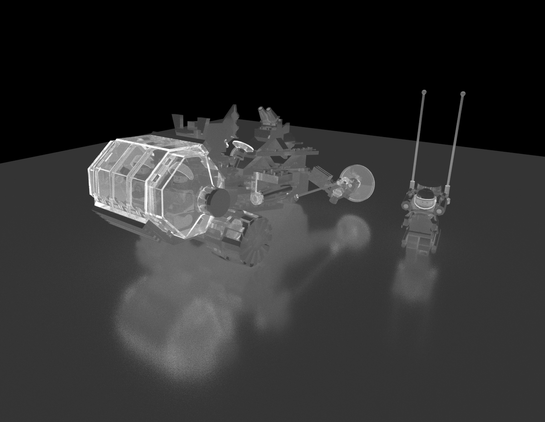

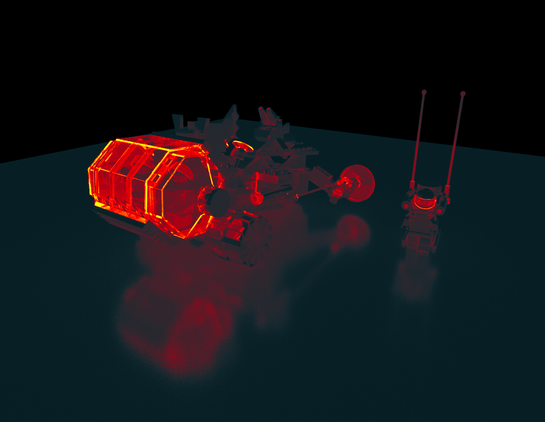

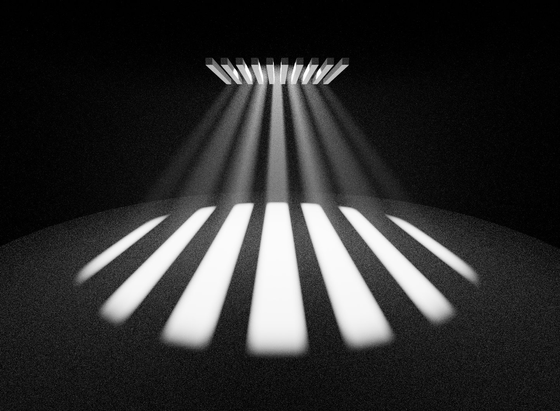

Just added a mode that lets you render out ray depth rather than surface color to three-gpu-pathtracer to help visualize where some of the more expensive surfaces are.

These pictures show the debug image raw and with a postprocess color ramp applied.