Google introduced its location tracking service Latitude in February 2009. A year later, in March 2010, I bought my first Android phone: the Google Nexus One. A few days later I’ve enable Google Latitude on it and my first checkin was on 31 March 2010 at 16:20:48 CEST. And apart from when I was without a smartphone, I never stopped logging my location.

Back then, this was a godsend to e.g. match photos taken with a proper camera to their location as phone cameras of the time were of pretty bad quality and digital cameras didn’t have GPS built-in. Even in 2023 I’ve used this several times to finally assign a location tag to some still untagged photos from over 10 years ago. It’s also great to do your work timesheets as you can easily see when you arrived at a site and when you left again.

Google Latitude was sunset in 2013 but the location tracking feature lived on in Google Maps as Location History, or later Timeline.

php-owntracks-recorder

In 2018, I was looking for a way to take more control over that pretty personal data. After a short search, I’ve found and forked tomyvi‘s php-owntracks-recorder. A simple PHP GUI that shows a map with where you were at what time and that can work with location data reported via the free OwnTracks app. The OwnTracks app was one of the very few, that only needed a low single-digit percentage of precious battery juice per day.

I’ve added support for SQLite3 and various different OpenStreetMap-based maps. And I was able to import all historic data from Google as well. My ca. 500 MiB Location History.json resulted in a 200 MiB SQLite3 database. Thanks to WAL mode, adding a new entry was still happening fast enough and I was pretty happy with the overall result.

PhoneTrack

Five years later, I figured there must be a similar app but that’s still in active development and more of a “standard” than what I’m using. My research ended with me choosing PhoneTrack as I already had a small NextCloud installation on my webspace. PhoneTrack can work with data packets from OwnTracks as well, so apart from changing the URL and adjust the device id, nothing else was needed.

As I used NextCloud with SQLite3 as well, moving over the data was as easy as connecting to the NextCloud db, attaching the previous database into the session and running a pretty straightforward INSERT..SELECT command.

ATTACH /tmp/owntracks.db3 AS ot;INSERT INTO oc_phonetrack_points (deviceid, lat, lon, timestamp, accuracy, altitude, batterylevel, useragent, speed, bearing) SELECT 1, latitude, longitude, epoch, accuracy, altitude, battery_level, "OwnTracks", velocity, heading FROM ot.locations WHERE tracker_id="mb";

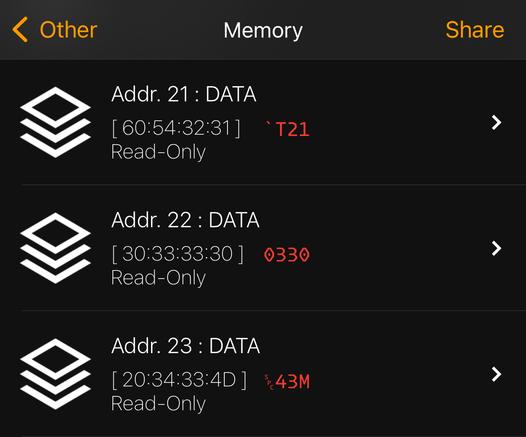

Now, after processing an incoming location record from OwnTracks, PhoneTrack gathers the latest locations of your NextCloud friends and sends them back for OwnTracks to display. However, with my 13 years of location history, the database took several seconds to give a result for my freshest record.

With me having switched to an iPhone in the meantime, this often was enough to blow the time iOS allotted for background tasks and thus the records would pile up in the queue on my phone. I then had to open the app and let them get synced to PhoneTrack. This wasn’t ideal, so I’ve disabled the code for returning the friends list in my installation. It worked great after that.

Traccar

Upon reading a recent discussion on Hacker News, I was reminded of Traccar again. As I’m pretty deep in the Apple ecosystem and perfectly happy with their native apps and iCloud, I never used my NextCloud installation. And I figured if I could replace PhoneTrack, I could get rid of my NextCloud installation and thus have one huge do-it-all app less to take care of.

I dismissed Traccar before, because it is a Java application and I usually preferred “traditional” PHP apps I can just plop onto my UberSpace server. Now, that I’m using Docker everywhere I can, I welcomed that Traccar can be installed via Docker these days.

So I pulled the default traccar.xml from GitHub, modified it for the desired database and with a simple Docker Compose file like this, Traccar was up and running in seconds:

version: "3.8"services: db: image: mariadb:latest environment: - MARIADB_AUTO_UPGRADE=1 - MYSQL_RANDOM_ROOT_PASSWORD=1 - MYSQL_DATABASE=traccar - MYSQL_USER=traccar - MYSQL_PASSWORD=traccar volumes: - /opt/docker/traccar/mysql:/var/lib/mysql:Z - /opt/docker/traccar/mysql-conf:/etc/mysql/conf.d:ro restart: unless-stopped server: image: traccar/traccar:latest restart: unless-stopped depends_on: - db volumes: - /opt/docker/traccar/conf/traccar.xml:/opt/traccar/conf/traccar.xml:ro - /opt/docker/traccar/logs:/opt/traccar/logs:rw ports: - 8082:8082/tcp - 5144:5144/tcp #- 5000-5150:5000-5150/tcp #- 5000-5150:5000-5150/udp labels: traefik.enable: "true" traefik.http.routers.traccar.rule: Host(`traccar.mydomain.tld`) traefik.http.routers.traccar.entrypoints: websecure traefik.http.routers.traccar.tls: "true" traefik.http.routers.traccar.tls.certresolver: le traefik.http.routers.traccar.service: traccar traefik.http.services.traccar.loadbalancer.server.port: "8082" traefik.http.routers.traccar-ot.rule: Host(`traccar.mydomain.tld`) traefik.http.routers.traccar-ot.entrypoints: traccar traefik.http.routers.traccar-ot.tls: "true" traefik.http.routers.traccar-ot.tls.certresolver: le traefik.http.routers.traccar-ot.service: traccar-ot traefik.http.services.traccar-ot.loadbalancer.server.port: "5144"

(I had to create the traccar entrypoint on my Traefik, to allow incoming logs from OwnTracks to port 5144 of the Traccar server. Traefik is also doing the HTTPS for the incoming OwnTracks connection.)

Import PhoneTrack history to Traccar

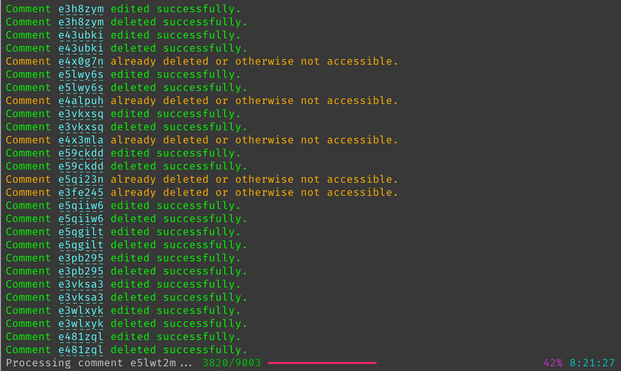

Now that Traccar was happily running, I wanted to import my history from PhoneTrack into it. And luckily, I wasn’t the first with that idea. So it was as easy as exporting all PhoneTrack data to a CSV file, like:

sqlite3 --csv owncloud.db "select * from oc_phonetrack_points" > oc_phonetrack_points.csv

And then creating a MySQL query to import that CSV back into Traccar’s database. As I wanted to keep metadata, especially the battery level, I had to use some trickery to get a valid JSON structure for Traccar.

LOAD DATA LOCAL INFILE '/tmp/oc_phonetrack_points.csv'INTO TABLE tc_positionsFIELDS TERMINATED BY ',' LINES TERMINATED BY '\n'IGNORE 1 ROWS ( @id, @deviceid, @lat, @lon, @timestamp, @accuracy, @satellites, @altitude, @batterylevel, @useragent, @speed, @bearing) SET protocol="owntracks", deviceid=1, servertime=FROM_UNIXTIME(@timestamp), devicetime=FROM_UNIXTIME(@timestamp), fixtime=FROM_UNIXTIME(@timestamp), valid=1, latitude=@lat, longitude=@lon, altitude=@altitude, speed=@speed, course=@bearing, accuracy=@accuracy, attributes=JSON_COMPACT( JSON_MERGE_PATCH( IF(LENGTH(@batterylevel)>0, CONCAT("{\"batteryLevel\":", FLOOR(@batterylevel), "}"), "{}"), IF(LENGTH(@bearing)>0, "{\"motion\":true}", "{}") ) );

And one restart of Traccar later, all my old records were there. Time to decommission my NextCloud installation.

https://blog.mbirth.uk/2024/01/06/tracing-my-steps-logging-where-ive-been.html

#google #gps #latitude #location #tracking