It did resolve after a bit.

Noting for record.

Recursing a lot on AI, humanity & the optimal | Driven by the goal of preventing AI catastrophic outcomes

X.com (Twitter) says I reached my daily max posts at 2 posts & 10 comments. Keeps asking me to add a phone number (which I added months ago).

X .com (twitter) flashed a message about needing phone number verification when attempting to repost this. Second attempt worked. Just noting this.

It is perhaps relevant to note that the Dot Com Boom, and burst, reshaped the tech industry, lead to innovation and companies that survived the crash. Internet technology continued to advance, it still took over the world.

#aibubble #ai #agi #llms #openai #anthropic #xai #grok #chatgpt #deepmind #gemini #claude #mistral #deepseek #tencent #kimi #qwen #nvidia

@poppyhaze

Damn, what are the bees we have in the arctic building?!

(Arctic bees build hives underground)

@Los

Uncertainty will set us free, if accepted and not taken for granted.

@jonsnow

And even some linux refugees trailing off to BSDs. Seriously, the core lib critical update frequency these past weeks...

I suspect this will push many to AI-conducted OSes, or even pure AI OSes (where code gremlins will no longer be jokey dev fantasies)

@0x2ba22e11

And, these type of issues could be mitigated with better tool-calling/scaffolding, auto-formalization(which has only started to effect frontiers), there are many minimal-funded novel architectures w/ promise, & new hardware impacts could expose latent capabilities.

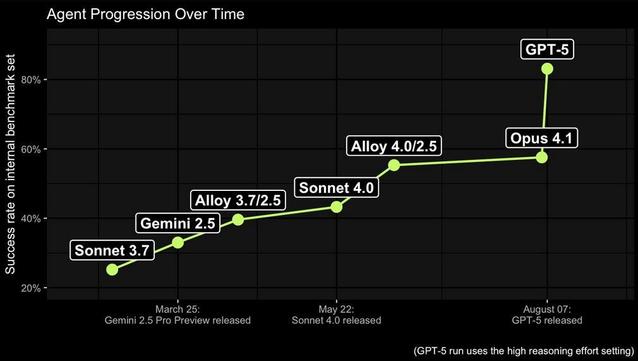

Zooming out, advancement towards AGI||ASI continues (on a trajectory that is likely to lead to poor & catastrophic outcomes).

Oh course I certainly hope you're right!

@0x2ba22e11

Fair, 'AI bubbles' will pop in an 'AI bubbling reaction', which seems better modeling.

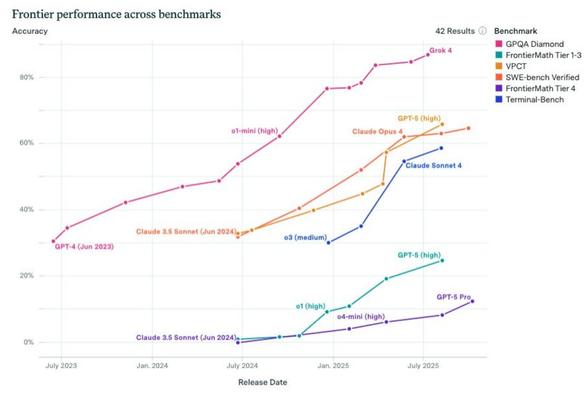

Perhaps this is general scaling decline though likely from more regurgitated synthetic training data. Reminding that free models shouldn't be seen as the frontiers.

AI progress is differential and local slowing does not necessarily mean general slowing. It is now a trillion $ bet.

AgiDefinition.ai

Points to better understand:

- Note specific category weights

- Overall AGI scores alone can be highly misleading

- Goodhart's law

- Gain in one category could multiply, or reduce, performance in another.

- Chart's max is 10%

Defining AGI:

"AI that can match or exceed the cognitive versatility and proficiency of a well-educated adult."

AGI scores: 27% (GPT4) -> 58% (GPT5)

(base models alone, not AI systems)

Zooming out, advancement towards AGI||ASI continues on a trajectory that is likely to lead to poor & catastrophic outcomes.

Fixations on specific outcomes, LLMs, or the non-realization of short timelines given as speculative examples distract from the momentum of AI advancement (+ adjacent tech) which are backed by epic global investments.

#ai #artificialintelligence #agi #asi #superintelligence #rsi #stargate #intelligenceexplosion #acc #tech #technology

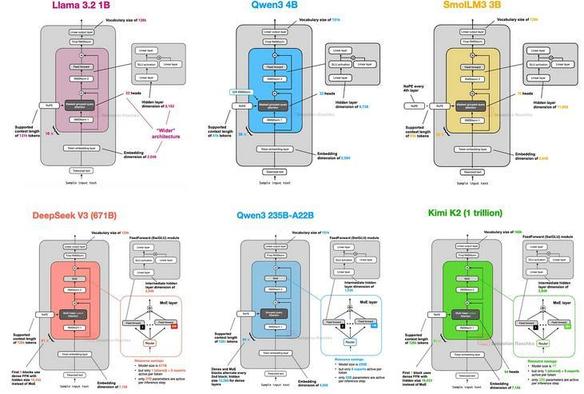

What classifies as a 'LLM'?

StripedHyena, Large Concept Models, Hierarchical Reasoning Models? Agentic & LLM-based systems?

LLMs used not to mean transformers.

Or merely a quick stepping stone to an AGI/ASI arch.

Given risk intensity, its a deep mistake to ignore the possibility of shorter AGI/ASI timelines. Risk need be taken seriously.

#artificialintelligence #llms #ai #agi #asi #superintelligence

@remixtures

What about Helen Keller or born-blind folk?

Lack or lacking?

Frontier multi-modal models employ visual embeddings. Seen Gemni 3 or Genie 3?

Regardless, it's nuanced or moot since they can utilize tooling and other models. AI models are better seen as AI systems.

AGI is ill-defined, doesn't need to be 100% across all human domains to reach the point where it can bootstrap RSI to fill in any gaps towards ASI. The blurry invisible line to AGI will likely not be known before it slips beyond the human cognitive horizon.

@kkarhan Thanks for engaging, criticism is so very welcome at this time.

@remixtures

Certainly hope LLMs are a flop and AGI is decades away.

Deep learning outcomes were scoffed at for many years by many experts, especially ML researchers, even after transformers took off, even now.

Regardless, breakthroughs continue to happen, we have not seen where agents, executive CoT, neurosymbolics or autoformalization takes us. There is far to much money and bets being placed that has tied up the world as a whole to merely dismiss the risk of this AI era.

@remixtures

Yet one can't deny the extreme, rapid progress, nor the mass deployment (US military contracts for one, Stargate Norway another).

Also, humans 'hallucinate', make basic mistakes & often have poor 'world models'. Hybrid models/system & LLM-based model scaffolding are likely to carry it far. Diminishing returns means more desperate archs, like RSI which is already being toyed with. Further, it could still be a stepping stone to AGI. Their risk must be taken seriously.

@kkarhan

Yes, the nature of blockchain does not lend itself to performance.

FrameworkZero is not DeFi, it doesn't have a tradable coin, is not a public DAO & does not have a free-for-all miner economy.

It's highly ambitious, not convenient, it does set forth an actionable plan for safety in global frontier AI development. It is not without shortcomings & relies on international accords, yet-to-exist specialized compute research & AI tech gov. Neither does it solve inference gov or alignment.