what is softmax #activationfunction

https://www.youtube.com/watch?v=yaH743wXFYo

#ActivationFunction

...

Addendum

Forward-Forward Algorithm

https://medium.com/@Mosbeh_Barhoumi/forward-forward-algorithm-ac24d0d9ffd

The forward-forward algorithm uses a custom loss function that compares the mean square value of the activations for positive and negative samples.

The network optimizes this loss function by performing gradient calculations and optimization steps on the trainable weights of the dense layer.

...

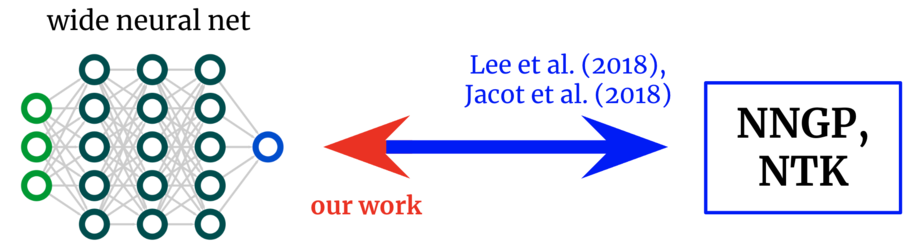

towards first-principles architecture design – The Berkeley Artificial Intelligence Research Blog https://triangleagency.co.uk/towards-first-principles-architecture-design-the-berkeley-artificial-intelligence-research-blog/?utm_source=dlvr.it&utm_medium=mastodon #TheTriangleAgencyNews #activationfunction #architecture #Artificial

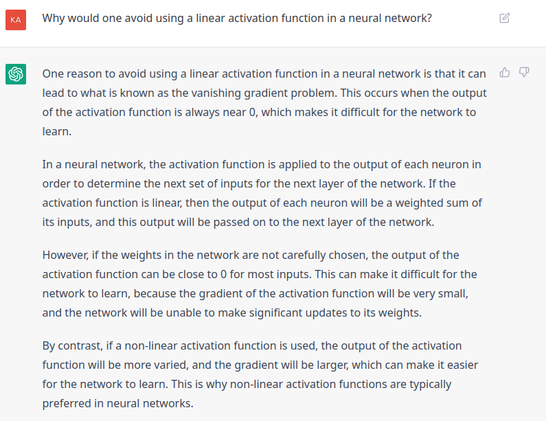

5. "Why would one avoid using a linear #ActivationFunction in a #NeuralNetwork?" #AI

No, #ChatGPT3 #GPT3. The derivative of a linear activation function is *always* positive; it has no vanishing gradient. The problem it has is that you can't backpropagate (constant derivative) and can mathematically reduce a network with linear functions down to a single layer.