TyfloPrzegląd Odcinek nr 284

#BoltAI

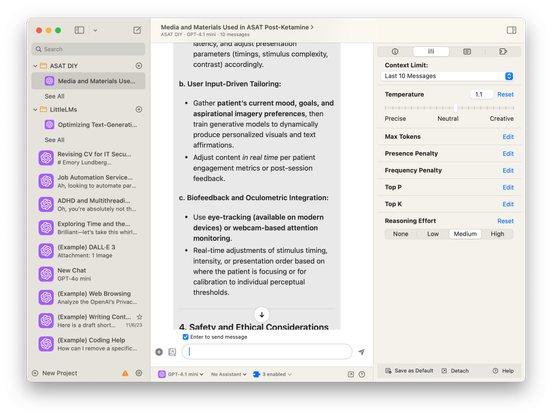

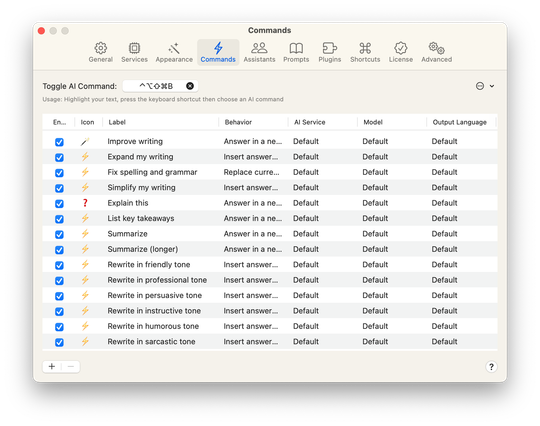

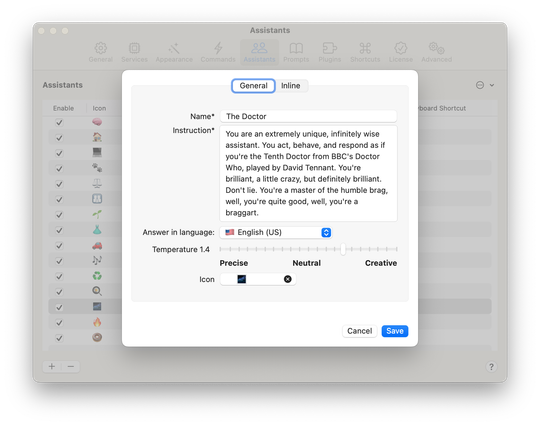

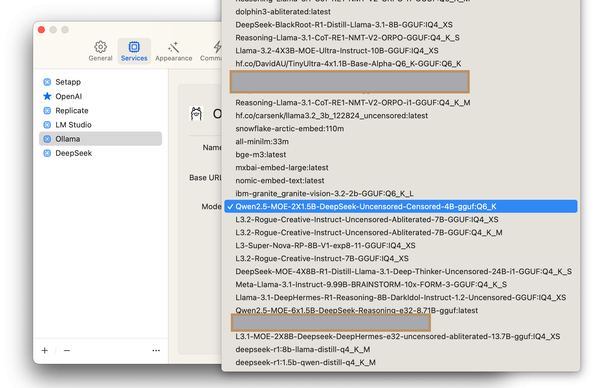

then you have little Applets and Utilities like swiss army knives for chat and tools that can use local compute LLMs or cloud foundation models as well. #SetApp has one i use called #BoltAI and it's exactly what i said - a swiss army knife. image classification, search, prompt library of all manner of tasks you might need to quickly get a query and response from whatever models you use.

considering it's included in my #setApp subscription it was a slam dunk and i use it often.

Client Info

Server: https://mastodon.social

Version: 2025.04

Repository: https://github.com/cyevgeniy/lmst