Good question. Surely all the bug reports and interface and workflow improvements contributed immensely, as did the drive to improve the CNNs doing the segmentation. The actual training data I doubt it: the nature of the images is dramatically different.

First, the retina EM volume was stained for extracellular contrast, and synapses or intracellular organelles weren't visible.

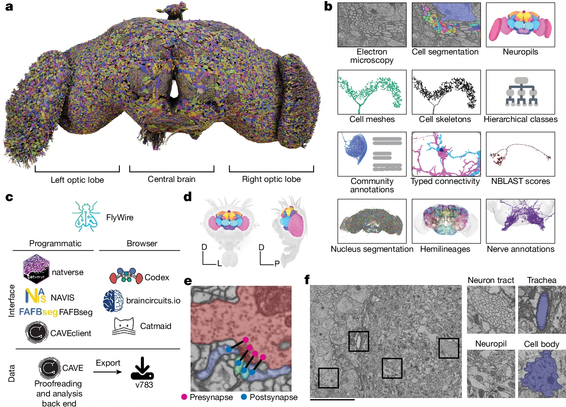

Second, the resolution and imaging modality are vastly different, with the retina imaged with SBEM at about 12x12x25 nm per pixel, whereas the female adult #Drosophila brain (#FAFB) was imaged at 4x4x40 nm per pixel with ssTEM. Both are anisotropic but rather very differently so.