@cragsand@mastodon.social

Discussion regarding Twitch moderation AI spread to Reddit where I clarified some questions that arose:

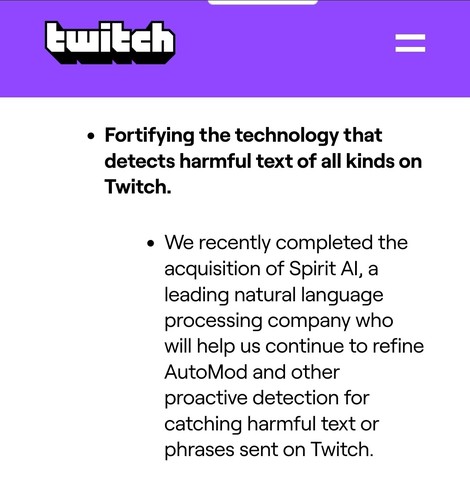

Since this global AI AutoMod remains an undocumented "feature" of Twitch chat from a while back a lot of the conclusions I've listed in the thread are based on deduction from watching active chatters get suspended and tell their stories on Discord and social media.

Most can luckily get their account reinstated after appealing but it relies on having an actual human look at the timestamp of the VOD and take their time to figure out what actually happened as well as get the complete context of what was going on on stream when it occurred. I've seen many apologies from Twitch moderation sent in emails after appealing, but if you get unbanned, an apology or stay banned seems mostly random.

Being banned like this will also make it much less likely that you want to participate and joke around in chat in the future, leading to a much worse chatting experience.

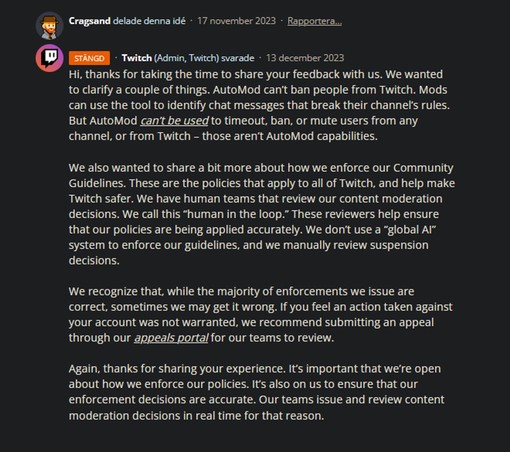

I see some discussions are arguing that all AI flagged moderation events are actually reviewed by humans (but poorly) and this is a possibility. Because of the lack of transparency from Twitch regarding how this works it's very difficult to know for sure how these reviews are done. A manual report in combination with an AI flag is almost certainly a ban. One thing is sure though, and that is that too much power is given to AI to judge in these cases.

Seeing as permanent suspensions from accounts who have had active paying subscriptions for YEARS on Twitch can be dished out in seconds, either those reviewing are doing a lousy job, or its mostly done by AI. Even worse, if those reviewing are underpaid workers who get paid by "number of cases solved per hour" there is little incentive for them to take their time to gather more context when reviewing.

It's likely that if Twitch gets called out for doing this, they have little incentive to admit it as it may even be in violation of consumer regulations in some countries. Getting a response that they "Will oversee their internal protocol for reviewing" may be enough of a win which results in them actually turning this off. Since there is no transparency we can't really know for sure.

A similar thing happened on YouTube at the start of 2023, where they went through all old videos speech-to-text transcripts and issues strikes retroactively. It got a lot of old channels to disappear, especially those with hours of VOD content where something could get picked up and flagged by AI. For the communities I'm engaged in, it meant relying less on YouTube for saving Twitch VODs. It was brought up by MoistCritical about a year ago since it also affected monetization of old videos.

#Twitch #Moderation #BadAI #AI #Enshittification #AutoMod #AIAutoMod #ModMeta #ModerationMeta