My book review of "Lexical variation and change" by Geeraerts et al. is out on linguist list, check it out here:

https://linguistlist.org/issues/35-3402/

#linguistics #distributionalSemantics

#distributionalSemantics

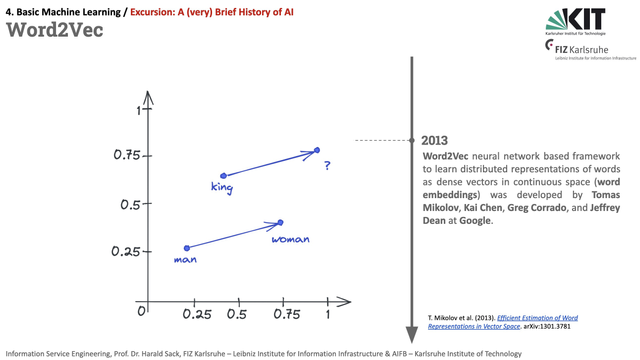

In 2013, Mikolov et al. (from Google) published word2vec, a neural network based framework to learn distributed representations of words as dense vectors in continuous space, aka word embeddings.

T. Mikolov et al. (2013). Efficient Estimation of Word Representations in Vector Space. arXiv:1301.3781

https://arxiv.org/abs/1301.3781

#HistoryOfAI #AI #ise2024 #lecture #distributionalsemantics #wordembeddings #embeddings @sourisnumerique @enorouzi @fizise

Besides Wittgenstein, we also quote linguist John Rupert Firth (1890–1960) with "You shall know a word by the company it keeps!" when introducing the principles of distributional semantics as the foundation for word embeddings and large language models.

J.R. Firth (1957), A synopsis of linguistic theory, Studies in linguistic analysis, Blackwell, Oxford: https://cs.brown.edu/courses/csci2952d/readings/lecture1-firth.pdf

#lecture #llm #nlp #distributionalsemantics @fizise @fiz_karlsruhe @enorouzi @sourisnumerique @shufan #wittgenstein

In lecture 05 of our #ise2024 lecture series, we are introducing the concept of distributed semantics and are referring (amongst others) to Ludwig Wittgenstein and his approach to the philosophy of language, and combine it with the idea of word vectors and embeddings.

lecture slides: https://drive.google.com/file/d/1WcVlkcUr33u5JmFcadkwtePpXJrv03n2/view?usp=sharing

#wittgenstein #nlp #wordembeddings #distributionalsemantics #lecture @fiz_karlsruhe @fizise @enorouzi @shufan @sourisnumerique #aiart #generativeai

"You shall know a word by the company it keeps." Distributional Semantics as the basis for self supervised learning as in today's large language models. This is what my colleague @MahsaVafaie and I are going to talk about in this #kg2023 lecture excursion.

OpenHPI video: https://open.hpi.de/courses/knowledgegraphs2023/items/4fwf0437tRYK49o8Xd18Eg

youtube video: https://www.youtube.com/watch?v=EYAY8nmhpdg&list=PLNXdQl4kBgzubTOfY5cbtxZCgg9UTe-uF&index=61

slides: https://zenodo.org/records/10185262

@fiz_karlsruhe @fizise @tabea @sashabruns @enorouzi #semantics #distributionalsemantics #llm #llms #cooccurrence #wittgenstein

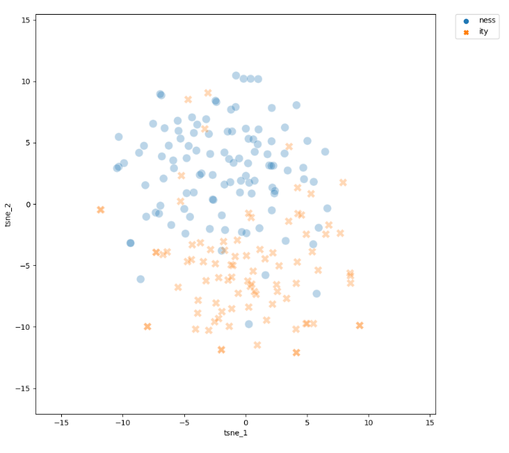

Getting ready to leave for #iclc16 in Düsseldorf, here a small teaser for my talk on Tuesday 14:45 on the #affix rivalry between -ity and -ness in #English:

Why to some adjectives take -ity (insular -> insularity), while others take -ness (red -> redness)? Many factors have been considered, I use #distributionalSemantics to explore the role of the adjective's meaning. Mapping the vectors on a two dimensional space with t-SNE, a dimensionality reduction technique, the resulting visualization shows that adjective meaning might indeed be a highly relevant factor. For example, even for adjectives with the same ending -ive, the bases of those taking -ity (e.g. narrativity) and those taking -ness (distinctiveness) fall into two clear clusters.

Looking forward to seeing some of you there :)

#wordformation

Last #NLP chapter of our #ISE2023 lecture last week was on distributional semantics and word embeddings. Of course, Wittgenstein had to be mentioned...

#lecture #distributionalsemantics #wittgenstein #stablediffusionart #creativeAI

Today's #ise2023 lecture was focusing on Naive Bayes Classification, POS Tagging, and distributional semantics with Word Embeddings

https://drive.google.com/drive/folders/11Z3_UGQjGONyHyZbj_kIdgT-LglZH4Ob

#nlp #lecture #classification #wordembeddings #languagemodels #word2vec #hiddenMarkovModel #distributionalsemantics @fizise @KIT_Karlsruhe #stablediffusionart #creativeAI

I somehow just learned about semantic folding

Still trying to learn more about it, but what's really messing with my head is that word embeddings are matrices. Are there any interesting connections to be made between this approach and things like DisCoCat?

#NLP #DistributionalSemantics #DisCoCat

https://en.wikipedia.org/wiki/Semantic_folding

https://en.wikipedia.org/wiki/DisCoCat