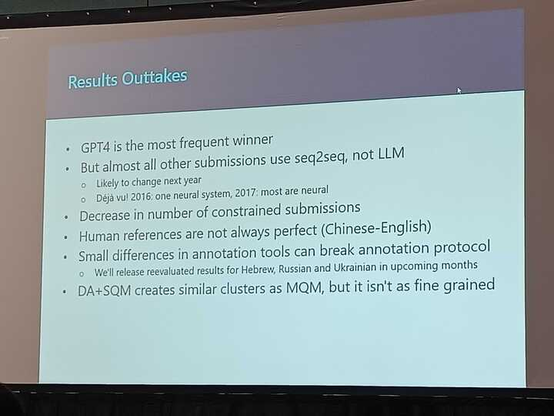

Findings from #WMT23

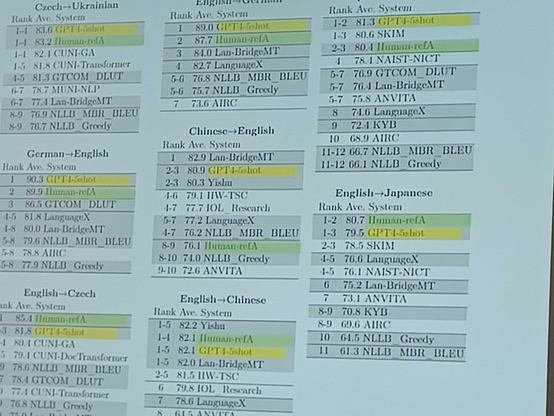

Our Chat4 friend is in the winning group across tasks

Most submissions still use from scratch training

Less constrained (low resource) submissions than before

More test suit submissions!

Low resource results TBD (tech issue)

#EMNLP2023 #WMT #neuralEmpty #LLMs

#neuralEmpty

שלום כיתה #נלפ א1

כמה גורמים אפשריים לדעתכם לכישלון המפואר הזה?

#תרגום #בלשנות

#HumanLevelTranslation #neuralempty #NLProc #NLP

Few-shot learning almost reaches traditional machine translation

https://arxiv.org/abs/2302.01398

#enough2skim #NLProc #neuralEmpty

3 reasons for hallucinations started

only 2 prevailed

Finding how networks behave while hallucinating, they

filter hallucinations (with great success)

https://arxiv.org/abs/2301.07779

#NLProc #neuralEmpty #NLP #deepRead

Bold statement (need to think about it more), especially when coming from a machine translation person.

I’d claim MT was no less revolutionary once it became pervasive in industry. But @marian_nmt seems to dismiss it now given ChatGPT

@ #conll #EMNLP talk to me about

ColD Fusion & https://ibm.github.io/model-recycling/

BabyLM shared task

https://www.label-sleuth.org/

Enhancing decoders with syntax

And guided work (talk to them too)

Estimating #neuralEmpty quality with source only

Controlling structure in - neuron level

Details: