psa: "TikTokSpider" does not obey robots.txt if you're looking for something to block today (if you haven't blocked it already)

#webadmin

psa: that "Thinkbot" user agent that you may have seen in your server logs does not obey robots.txt and should be blocked. rather than blocking its ip (it actually uses multiple ip addresses) as its user agent suggests, i would add a rule in your server config to give it a 403 on anything it tries to access

Hey people with websites:

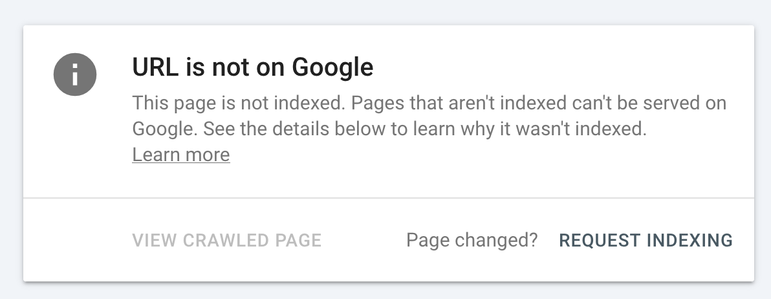

I noticed that the URL inspector of the Google Search Console rather consistently claims a 'URL is not on Google', while anyone can actually find it using Google Search.

Why?!

I actually trusted the (very slow) tool and wasted a lot of time adding pages manually. Because Google also refuses to check the sitemap.xml on a regular basis.

Let's Encrypt API is having trouble. 😄 The current status is orange; they are a little okay but still recovering due to high traffic. 😄 I want to make my next Mastodon instance that maybe lasts for ≤ 1 hour lol 😄. If God wills, He will grant my instance to last for ≥ 1 month. 😄

#LetsEncrypt #Mastodon #Fediverse #WebAdmin #TechHumor #SSL #API #WebHosting #ShortLivedInstance #SysAdminLife #DevOps #WebSecurity #CertificateProblems #HighTraffic #ExperimentalHosting

seems like a lot of the web scanning bots (the ones searching for admin login pages) are switching to a Chrome/121 user agent

* note: the Chrome/129 web bots are still present, and afaict are not looking for admin login pages or exploits, they seem to be trying to scrape all the site's content, probably for an llm or something (conjecture), but why they would go to the trouble of setting that up through what appears to be tor is beyond me

i also found out that googlebot (the legit one operating from google's address space) will occasionally use a user agent of Chrome/99, despite using a more up to date ua most of the time

looking at the unusual Chrome/129 traffic again, it's definitely some kind of botnet, it's using hundreds of mostly unique ips, and there's periodic (hourly?) surges of requests. i'm sure there's some real people with outdated browsers getting mixed in with my apache rule, so i'm glad i didn't just do a straight block and went with a redirect page instead

additionally, Mozilla/4.0 is only used by Internet Explorer in compatibility mode, so you may want to block that as well, since all versions of IE are deprecated at this point and shouldn't be used for normal web browsing. most versions of IE can't even connect to modern websites anymore, the only one that supports TLSv1.2 is IE11 on Win10, so if you have old protocols disabled it's almost guaranteed that any traffic you see with older IE user agents is not real (i do know there are ways to get older versions of IE working through a local tls proxy, i've done it myself for retrocomputing purposes)

although i'm not sure if edge in IE mode will use that user agent, i haven't played around with it much

you can block user agents that match ^Mozilla but not ^Mozilla/[45]\.0 \(

* note: this will block some web crawlers such as bingbot/gptbot/claudebot as well because they put the AppleWebKit/537\.36 token in the wrong place (before the first parentheses), but you should be blocking at least the latter two of those anyway