Wonderful time in Heidelberg w/ @jekely.biologists.social.ap.brid.gy + our HFSP team (Thomas Kiørboe & Alastair Simpson) discussing the origins of eukaryotes and their excitable behaviours! can you feel the ⚡⚡⚡in the room?? (funded by @uniheidelberg.bsky.social International Guest Professorship)

Professor at the Centre for Organismal Studies (COS), University of Heidelberg, Germany

and at the

Living Systems Institute (LSI), University of Exeter, UK.

#neuroscience of marine larvae, #connectomics, #cilia, ciliary swimming, cell and nervous system #evolution, #GPCR, #neuropeptides, #Platynereis, #Trichoplax, #Nematostella, #Schistosoma, #coral #neuroscience #rstat #evolution #fedi22

Very interesting read about the AI hype in scientific research:

"Instead of identifying a problem and then trying to find a solution, we start by assuming that AI will be the solution and then looking for problems to solve."

https://www.understandingai.org/p/i-got-fooled-by-ai-for-science-hypeheres

💸 Germany sends €204.5M to Microsoft annually while European open-source projects need investment.

Our Account Manager's analysis shows how redirecting these funds could:

✔️End vendor lock-in cycles

✔️Strengthen data sovereignty

✔️Create local tech jobs

✔️Support FLOSS innovation

XWiki, CryptPad and other open-source solutions prove alternatives exist, they just need proper funding.

Read the full analysis here: https://xwiki.com/en/Blog/why-governments-should-invest-in-open-source/

From Elizabeth Marin at Zoology Dept., Cambridge University:

"Together with Greg Jefferis (MRC LMB, Cambridge), Wei-Chung Allen Lee (Harvard Medical School), and Meg Younger (Boston University), I have secured a £4.8M Wellcome Discovery Award to generate a mosquito brain connectome and investigate chemosensory circuits involved in human host-seeking."

"We are currently recruiting for two research assistant positions based in the Zoology department at Cambridge University. Please share this post with any likely candidates :)."

This is so exciting! The German National Library of Medicine @ZBMED just had its first open meeting on the plan for an open and independent PubMed safety net. Here's my write-up @PLOS on the meeting & how institutions & others can help: https://absolutelymaybe.plos.org/2025/05/14/germanys-plan-for-an-open-and-independent-pubmed-safety-net/

@SecurityWriter This has been my hypothesis for the last few years, but more on the cloud side.

Cloud’s fundamental problem is that compute requirements scale in human terms, maybe growing by 10-20% a year for a successful business. Compute and storage availability doubles every year or two.

This means that, roughly speaking, the dollar value of the cloud requirements for most companies halves every couple of years. For a lot of medium-sized companies, their entire cloud requirements could be met with a £50 Raspberry Pi, a couple of disks for redundancy, and a reliable Internet connection.

Most of the cloud growth was from bringing in new customers, not from existing customers growing.

Worse, the customers whose requirements do grow are starting to realise that they have such economies of scale that outsourcing doesn’t win them much: Microsoft or Amazon’s economies of scale don’t give them much bigger savings and those savings are eaten by profit.

They really need something where the computer requirement is so big that no one really wants to do it on prem. And something where the requirements grow each year.

AI training is perfect. You want infinite GPUs, for as short a time as possible. You don’t do it continuously (you may fine tune, but that’s less compute intensive), so buying the GPUs would involve having them sit idle most of the time. Renting, even with a significant markup, is cheaper. Especially when you factor in the infrastructure required to make thousands of GPUs usable together. And each model wants to be bigger than the last so needs more compute. Yay!

Coincidentally, the biggest AI boosters are the world’s second and third largest cloud providers.

My review of an article suggesting to pay reviewers extra:

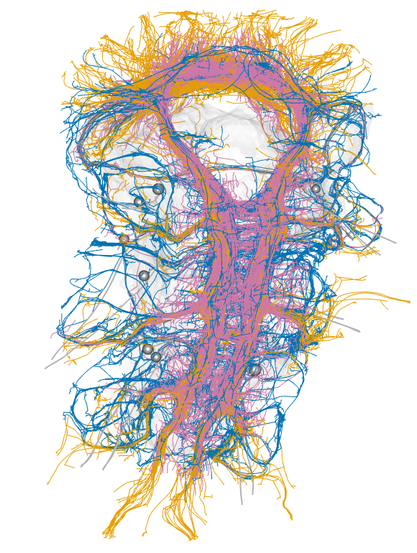

Arrived at the Volume Electron Microscopy

Gordon Research Conference

https://www.grc.org/volume-electron-microscopy-conference/2025/

near Barcelona. I will speak about Volume EM and #connectomics in marine zoomplankton.

Hundreds of thousands of Computers won't be able to upgrade to Windows 11, but that shouldn't make them eWaste.

Kudos to the @kde team for this amazing initiative!

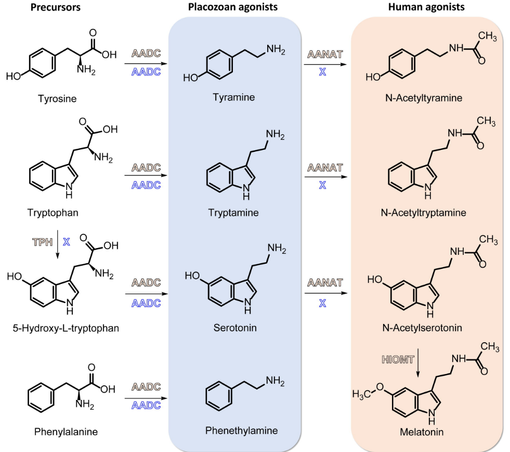

New insights into neurotransmitter evolution from a GPCR screen in Trichoplax, a neuron-less animal.

with Yanez-Guerra et al.

https://www.biorxiv.org/content/10.1101/2025.04.18.649542v1

Trichoplax has tryptamine, tyramine, and phenethylamine receptors that are homologous to human GPCRs.

However, Trichoplax GPCRs are activated by monoamines that lack the acetylation present in ligands such as melatonin in humans.

Trichoplax thus uses monoamines without the final step of the human biosynthesis pathway

@albertcardona Thanks, nice. Here is a R implementation: https://acclab.github.io/dabestr/reference/dabest_plot.html #rstats

I am under a DDoS attack. Not my server, not my service. Me.

And like everything these days, it has to do with #AI.

This is going to be a thread because I'm annoyed and have much to say. It should be called something like "How I wasted a good part of the last 7 years having to react to a dubious technology instead of doing my job". Or perhaps "How a science disappeared". You can decide. /0

I love it when Adam gets angry.

“Dire wolves remain very extinct”

https://arutherford.substack.com/p/dire-wolves-remain-very-extinct

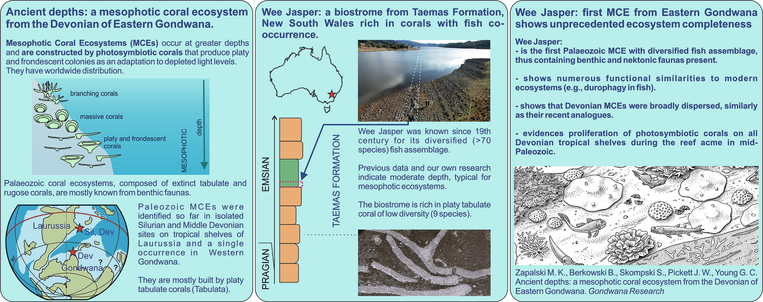

More evidence for the photosymbiosios of tabulate corrals:

""as early as the Early Devonian, evidencing proliferation of photosymbiotic, habitat-forming tabulate corals during the reef acme in the middle Palaeozoic.""

https://www.sciencedirect.com/science/article/pii/S1342937X25000887

Public service announcement: this is *not* a dire wolf.

It is a genetically modified grey wolf made to resemble an extinct species, so a bullshit company can raise more money for their bullshit projects.

#Deextinction is a scam on so many levels I don't even know where to begin. It will *not* help us save endangered species. Instead, we should spend our money on preserving their habitats *before* they go extinct.

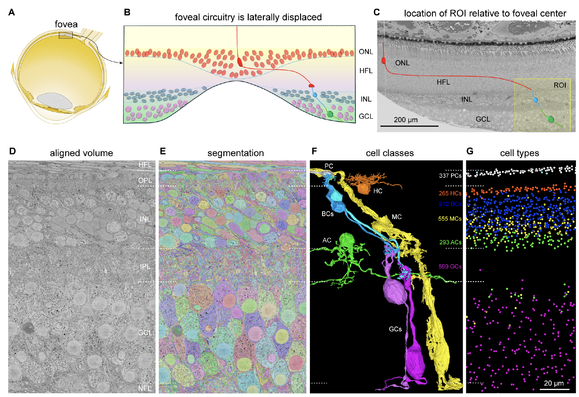

1/ We are excited to share our new manuscript. Here, we provide a nanoscale connectome of the human foveal retina. Our dataset represents the first connectome of any complete neural structure in the human nervous system.

Visual pathway origins: an electron microscopic volume for connectomic analysis of the human foveal retina https://www.biorxiv.org/content/10.1101/2025.04.05.647403v1?med=mas

We are looking to hire a Senior Scientist or #Postdoc to study brains, biophysics, and behaviour using #Drosophila as a model. Please spread the word!

The title of my email newsletter today is "Decimation": https://buttondown.com/carlzimmer/archive/fridays-elk-decimation/

#Bluesky now redirects links through "go.bsky.app".

This is a kludge similar to Twitter's "t.co" to pass on referer information (already available on the open web) from mobile apps, to make Bluesky look better in analytics reports.

Intercepting clicks is always problematic from a privacy/security perspective; in addition, it introduces a technical layer that can fall over (the redirection service).