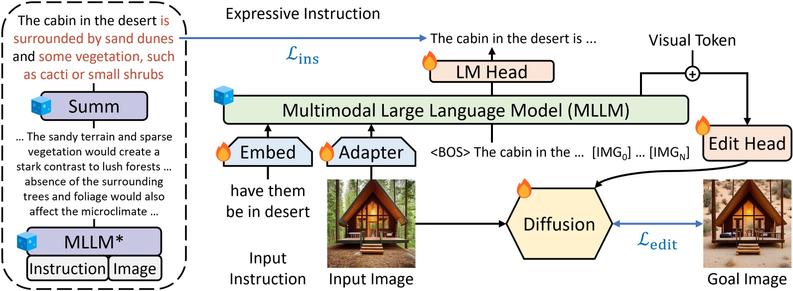

If you would like to learn more how it works: Guiding Instruction-based Image Editing via Multimodal Large Language Models. Check out the code repository for the ICLR'24 Spotlight paper by Tsu-Jui Fu, Wenze Hu, Xianzhi Du, William Yang Wang, Yinfei Yang, and Zhe Gan.

https://github.com/apple/ml-mgie

#ICLR24 #ImageEditing #MLLMs #AIResearch

#ICLR24

Happy to share our paper:

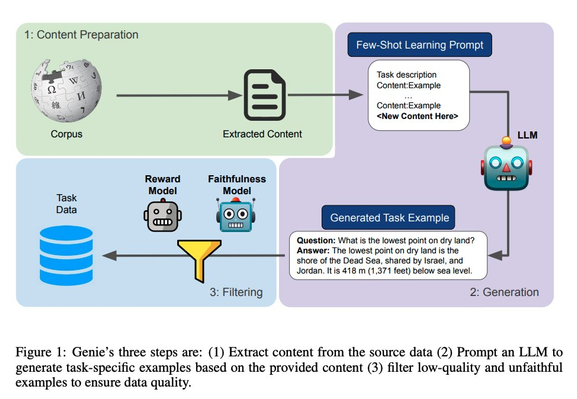

Genie🧞: Achieving Human Parity

in Content-Grounded Datasets Generation

was accepted to #ICLR24

From your content

Genie creates content-grounded data

of magical quality ✨

Rivaling human-based datasets!

https://arxiv.org/abs/2401.14367

#data #NLP #nlproc #ML #machinelearning #llm #RAG a

Client Info

Server: https://mastodon.social

Version: 2025.04

Repository: https://github.com/cyevgeniy/lmst