Research in mechanistic interpretability and neuroscience often relies on interpreting internal representations to understand systems, or manipulating representations to improve models. I gave a talk at the UniReps workshop at NeurIPS on a few challenges for this area, summary thread: 1/12

#ai #ml #neuroscience #computationalneuroscience #interpretability #NeuralRepresentations #neurips2023

#NeuralRepresentations

How to measure (dis)similarity between #NeuralRepresentations? This work by Harvey et al. (2023) ( @ahwilliams lab) illuminates the relation between #CanonicalCorrelationsAnalysis (#CCA), shape distances, #RepresentationalSimilarityAnalysis (#RSA), #CenteredKernelAlignment (#CKA), and #NormalizedBuresSimilarity (#NBS):

Looking very much forward to the upcoming #iBehave seminar with Carsen Stringer ( @computingnature ) on “Unsupervised pretraining of #NeuralRepresentations for #TaskLearning” 👌

⏰ October 06, 2023, at 12 pm

📍 online

🌏 https://ibehave.nrw/news-and-events/ibehave-seminar-series-talk-by-dr-carsen-stringer/

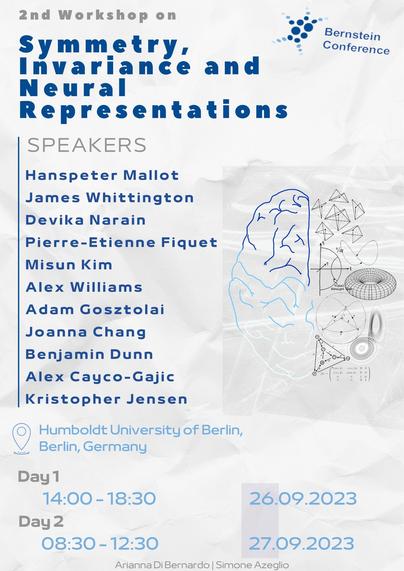

Looking forward to tomorrow’s 2nd workshop on #symmetry, invariance and #NeuralRepresentations at the #BernsteinConference: #GroupTheory, #manifolds, and #Euclidean vs #nonEuclidean #geometry #perception … I’m pretty excited 🤟😊

#CompNeuro #computationalneuroscience