Display Product and Price Book Entry Fields in the Same Flow Data Table

The Salesforce Flow Data Table component is a powerful screen element that allows users to view and interact with records in a structured, spreadsheet-like format within a Flow. It supports features like record selection, sorting, and filtering, making it ideal for building guided user experiences. For example, in a product selection use case, a sales rep can launch a Flow that displays a list of products retrieved from the Product2 or PriceBookEntry objects. Using the data table, the rep can easily compare options and select multiple products to add to an opportunity, all within a single, streamlined Flow screen.

The data table component has been added to Salesforce based on the success of Eric Smith’s open source data table component published on UnofficialSF. The out of the box component is still not as powerful as the unofficialSF sibling.

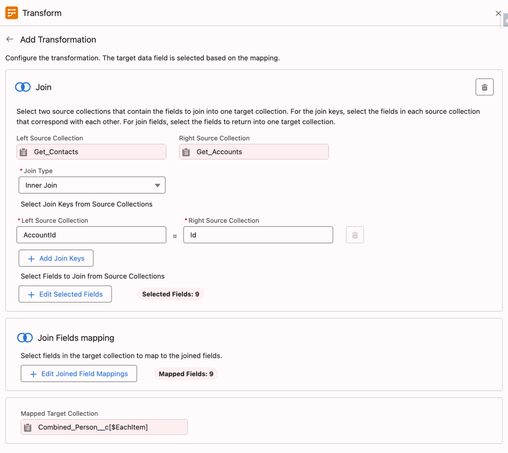

In this post, I will show you how I leveraged the transform element inner join functionality to bring together Product2 or PriceBookEntry field values which I showed in the unofficial SF data table component.

The inner join functionality is a powerful one. It falls short of its full potential, because flow builder does not offer a way for us to generate custom data types to hold the information we bring together.

I created a placeholder Apex-defined data type which I used on the output side of the transform element. The unofficial SF data table supports the display of Apex-defined collection data. Leveraging this functionality, I brought the field values of both Product and Price Book Entry objects for the user to make an informed product selection.

🚨 Use case 👇🏼User will select products and add them to the opportunity record. When making the selection, user should be able to see product information and price book entry information from the selected price book on the same row: Product name, code, family, description and unit price.

Apex-Defined Data Types in Flow

Apex-Defined Data Types allow developers to create custom, structured objects in Apex that can be used as inputs and outputs within Flow. These types enable more complex data handling than standard Flow variables, supporting multiple fields, including nested data, within a single variable. For example, you might define an Apex class that bundles together a product’s name, price, discount, and inventory status, then use it in a Flow to display custom pricing logic or pass structured data between Flow and Apex actions. This approach enhances flexibility and scalability when building advanced automation.

The key to defining an Apex-defined data type available for flow is the @AuraEnabled annotation in the Apex class. Once you write an Apex class that defines the fields in the Apex-defined object and deploy it to production, you don’t need to do anything in the flow builder to make this data type available in flow. In the areas where and Apex-defined resource selection is allowed, the new data type will be accessible.

I decided to create an Apex-defined data type with various multiple fields that I can use in the flow builder. The fields I generated are:

- 4 strings

- 2 numbers

- 2 currency fields

- 1 boolean (checkbox)

Here is the simple (the name says complex, but it is simple) Apex code that does the trick:

/** * ComplexDataCollection - Apex-defined data type for Salesforce Flow */public class ComplexDataCollection { @AuraEnabled public String string1 { get; set; } @AuraEnabled public String string2 { get; set; } @AuraEnabled public String string3 { get; set; } @AuraEnabled public String string4 { get; set; } @AuraEnabled public Decimal number1 { get; set; } @AuraEnabled public Decimal number2 { get; set; } @AuraEnabled public Decimal currency1 { get; set; } @AuraEnabled public Decimal currency2 { get; set; } @AuraEnabled public Boolean boolean1 { get; set; } }You will need a test class to deploy this code to production. That should be easy especially with the help of AI, but let me know if you need me post the test class.

Transform and Join Product and Price Book Entry Field Values to Populate the Apex-Defined Data Type

Follow these steps to prepare your data for the data table component:

- Get all the Price Book Entries for one Price Book.

- Get all the Products in the Org (limit your get at 2,000 records for good measure).

- Join the two collections in the transform element using the Product2 Id.

- Map the fields from source collections to the Apex-defined data type.

Here is more detail about the transform element configuration:

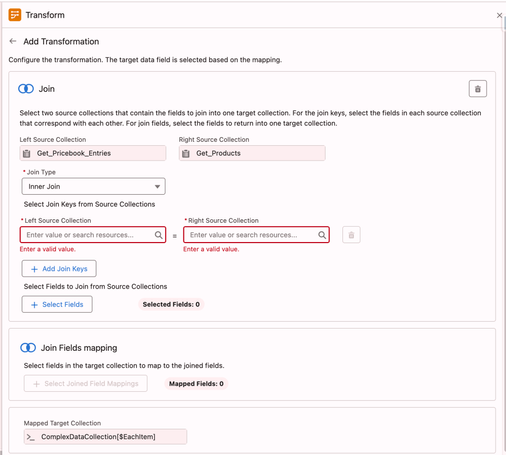

- Add the transform element.

- Add the price book entries collection from the get element on the left side.

- Add the product collection on the left side.

- Add an Apex-defined collection on the right side. In my case this is called “

ComplexDataCollection“. Search by name. Make sure you check the collection checkbox. - Click on the first collection on the left side at the top collection level (not next to the individual fields). Connect this to the collection on the right side. You will see instructions for inner join.

- Click on the second collection on the left side. You should see a join configuration screen. Configure your join. More instructions will follow.

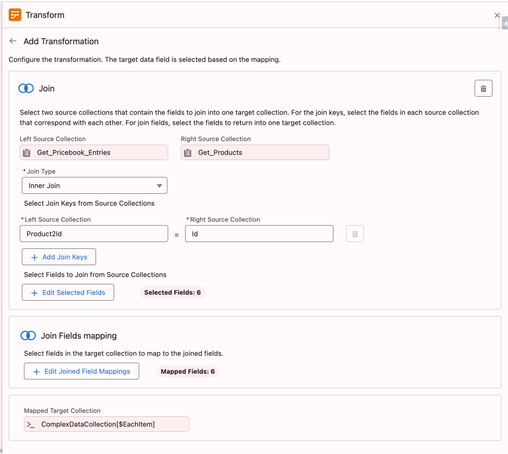

Configure your join:

- Left source and right source order does not matter for inner join. Select both collections on the left side.

- The join key will be Product2 on the PriceBookEntry and Id on the Product2.

- Select the fields you want on the output. For me these are: Name, ProductCode, UnitPrice, Family, Description. I added also isActive which I did not end up using in the data table.

- Map these to your Apex-defined object fields: string1 through string4, currency1 and boolean1 (if you want isActive).

Your configured transform join should look like the screen image below.

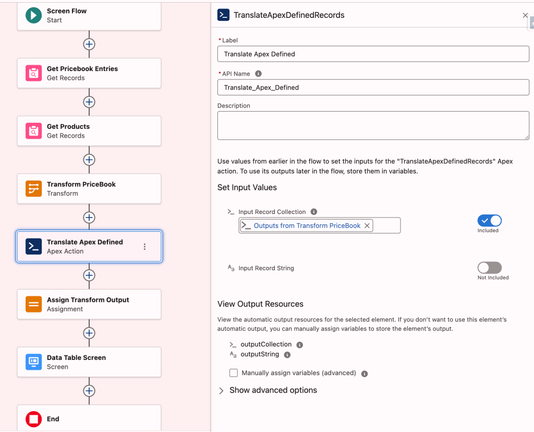

Prepare the Apex-Defined Object Data for the Data Table

UnofficialSF data table supports Apex-Defined objects, but requires that the input is serialized. The data table cannot process Apex-Defined collection data as input. It expects a JSON format. More on that is available on Eric Smith’s post HERE.

To achieve this, you can either leverage Apex, or so the processing in flow. I tried both ways, and both methods works. Flow method requires looping.

Here is the Apex code for the invocable action that serializes the data:

/** * * Sample Apex Class Template to get data from a Flow, * Process the data, and Send data back to the Flow * This example translates an Apex-Defined Variable * between a Collection of Object Records and a Seraialized String * Eric Smith - May 2020 * **/ public with sharing class TranslateApexDefinedRecords { // *** Apex Class Name *** // Attributes passed in from the Flow public class Requests { @InvocableVariable(label='Input Record String') public String inputString; @InvocableVariable(label='Input Record Collection') public List inputCollection; // *** Apex-Defined Class Descriptor Name *** } // Attributes passed back to the Flow public class Results { @InvocableVariable public String outputString; @InvocableVariable public List outputCollection; // *** Apex-Defined Class Descriptor Name *** } // Expose this Action to the Flow @InvocableMethod public static List translateADR(List requestList) { // Instantiate the record collection List tcdList = new List(); // *** Apex-Defined Class Descriptor Name *** // Prepare the response to send back to the Flow Results response = new Results(); List responseWrapper = new List(); // Bulkify proccessing of multiple requests for (Requests req : requestList) { // Get Input Value(s) String inputString = req.inputString; tcdList = req.inputCollection;// BEGIN APEX ACTION PROCESSING LOGIC // Convert Serialized String to Record Collection List collectionOutput = new List(); // *** Apex-Defined Class Descriptor Name *** if (inputString != null && inputString.length() > 0) { collectionOutput = (List)System.JSON.deserialize(inputString, List.class); // *** Apex-Defined Class Descriptor Name *** } // Convert Record Collection to Serialized String String stringOutput = JSON.serialize(tcdList);// END APEX ACTION PROCESSING LOGIC // Set Output Values response.outputString = stringOutput; response.outputCollection = collectionOutput; responseWrapper.add(response); } // Return values back to the Flow return responseWrapper; }}Please note that this code refers to the name of the first Apex class. If you change the name, you will need to replace the references here, as well. Source: Eric Smith’s Blog.

See how the action will be used and configured in the image below.

Data Table Configuration

Here is how you configure the data table for this data:

- Give your data table and API name

- Scroll down to the advanced section and check the checkbox titled Input data is Apex-Defined.

- Add the string variable you used to assign the value of the translate action output to Datatable Record String.

- For the required unique Key Field input use the string that has the product code. For me this is string2.

- To configure Column Fields add string1,string2,string3,string4,currency1 there.

- Add 1:Name,2:Code,3:Description,4:Family,5:Price for Column Labels.

- Configure Column Types by adding 1:text,2:text,3:text,4:text,5:currency there.

Once completed, you should see a similar output to this image below.

Conclusion

While this example illustrates the way Apex can boost the capabilities of flow, it is very cumbersome to set up this solution to leverage Apex-defined data types in the flow builder and in the data table.

This was more of an experiment than a solution I will use frequently.

If you don’t want to write code, you can easily create a custom placeholder object to achieve a similar result with the out of the box data table component.

I look forward to having this functionality built into the flow builder in the coming releases. I hope Salesforce product teams will prioritize this.

Explore related content:

How to Use the Data Table Component in Screen Flow

Send Salesforce Reports and Dashboards to Slack with Flow

How to Use the Repeater Component in Screen Flow

#DataTable #InnerJoin #Salesforce #SalesforceAdmins #SalesforceDevelopers #SalesforceTutorials #TransformElement