Secure Python Password Generator, ver. 2

https://theblackchamber552383191.wordpress.com/2025/01/20/secure-python-password-generator-ver-2/

#python #symmetricCryptography

#symmetricCryptography

Key Transparency and the Right to be Forgotten

This post is the first in a new series covering some of the reasoning behind decisions made in my project to build end-to-end encryption for direct messages on the Fediverse.

(Collectively, Fedi-E2EE.)

Although the reasons for specific design decisions should be immediately obvious from reading the relevant specification (and if not, I consider that a bug in the specification), I believe writing about it less formally will improve the clarity behind the specific design decisions taken.

In the inaugural post for this series, I’d like to focus on how the Fedi-E2EE Public Key Directory specification aims to provide Key Transparency and an Authority-free PKI for the Fediverse without making GDPR compliance logically impossible.

CMYKat‘s art, edited by me.Background

Key Transparency

For a clearer background, I recommend reading my blog post announcing the focused effort on a Public Key Directory, and then my update from August 2024.

If you’re in a hurry, I’ll be brief:

The goal of Key Transparency is to ensure everyone in a network sees the same view of who has which public key.

How it accomplishes this is a little complicated: It involves Merkle trees, digital signatures, and a higher-level protocol of distinct actions that affect the state machine.

If you’re thinking “blockchain”, you’re in the right ballpark, but we aren’t propping up a cryptocurrency. Instead, we’re using a centralized publisher model (per Public Key Directory instance) with decentralized verification.

Add a bit of cross-signing and replication, and you can stitch together a robust network of Public Key Directories that can be queried to obtain the currently-trusted list of public keys (or other auxiliary data) for a given Fediverse user. This can then be used to build application-layer protocols (i.e., end-to-end encryption with an identity key more robust than “trust on first use” due to the built-in audit trail to Merkle trees).

I’m handwaving a lot of details here. The Architecture and Specification documents are both worth a read if you’re curious to learn more.

HarubakiRight To Be Forgotten

I am not a lawyer, nor do I play one on TV. This is not legal advice. Other standard disclaimers go here.

Okay, now that we’ve got that out of the way, Article 17 of the GDPR establishes a “Right to erasure” for Personal Data.

What this actually means in practice has not been consistently decided by the courts yet. However, a publicly readable, immutable ledger that maps public keys (which may be considered Personal Data) with Actor IDs (which includes usernames, which are definitely Personal Data) goes against the grain when it comes to GDPR.

It remains an open question of there is public interest in this data persisting in a read-only ledger ad infinitum, which could override the right to be forgotten. If there is, that’s for the courts to decide, not furry tech bloggers.

I know it can be tempting, especially as an American with no presence in the European Union, to shrug and say, “That seems like a them problem.” However, if other folks want to be able to use my designs within the EU, I would be remiss to at least consider this potential pitfall and try to mitigate it in my designs.

So that’s exactly what I did.

AJAlmost Contradictory

At first glance, the privacy goals of both Key Transparency and the GDPR’s Right To Erasure are at odds.

- One creates an immutable, append-only history.

- The other establishes a right for EU citizens’ history to be selectively censored, which means history has to be mutable.

However, they’re not totally impossible to reconcile.

An untested legal theory circulating around large American tech companies is that “crypto shredding” is legally equivalent to erasure.

Crypto shredding is the act of storing encrypted data, and then when given a legal takedown request from an EU citizen, deleting the key instead of the data.

AJThis works from a purely technical perspective: If the data is encrypted, and you don’t know the key, to you it’s indistinguishable from someone who encrypted the same number of NUL bytes.

In fact, many security proofs for encryption schemes are satisfied by reaching this conclusion, so this isn’t a crazy notion.

Is Crypto Shredding Plausible?

In 2019, the European Parliamentary Research Service published a lengthy report titled Blockchain and the General Data Protection Regulation which states the following:

Before any examination of whether blockchain technology is capable of complying with Article 17 GDPR; it must be underscored that the precise meaning of the term ‘erasure’ remains unclear.

Article 17 GDPR does not define erasure, and the Regulation’s recitals are equally mum on how this term should be understood. It might be assumed that a common-sense understanding of this terminology ought to be embraced. According to the Oxford English Dictionary, erasure means ‘the removal or writing, recorded material, or data’ or ‘the removal of all traces of something: obliteration’.494

From this perspective, erasure could be taken to equal destruction. It has, however, already been stressed that the destruction of data on blockchains, particularly these of a public and permissionless nature, is far from straightforward.

There are, however, indications that the obligation inherent to Article 17 GDPR does not have to be interpreted as requiring the outright destruction of data. In Google Spain, the delisting of information from research results was considered to amount to erasure. It is important to note, however, that in this case, this is all that was requested of Google by the claimant, who did not have control over the original data source (an online newspaper publication). Had the claimant wished to obtain the outright destruction of the relevant data it would have had to address the newspaper, not Google. This may be taken as an indication that what the GDPR requires is that the obligation resting on data controllers is to do all they can to secure a result as close as possible to the destruction of their data within the limits of [their] own factual possibilities.

Dr Michèle Finck, Blockchain and the General Data Protection Regulation, pp. 75-76

From this, we can kind of intuit that the courts aren’t pedantic: The cited Google Spain case was satisfied by merely delisting the content, not the erasure of the newspaper’s archives.

The report goes on to say:

As awareness regarding the tricky reconciliation between Article 17 GDPR and distributed ledgers grows, a number of technical alternatives to the outright destruction of data have been considered by various actors. An often-mentioned solution is that of the destruction of the private key, which would have the effect of making data encrypted with a public key inaccessible. This is indeed the solution that has been put forward by the French data protection authority CNIL in its guidance on blockchains and the GDPR. The CNIL has suggested that erasure could be obtained where the keyed hash function’s secret key is deleted together with information from other systems where it was stored for processing.

Dr Michèle Finck, Blockchain and the General Data Protection Regulation, pp. 76-77

That said, I cannot locate a specific court decision that affirms that crypto erasure is legally sufficient for complying with data erasure requests (nor any that affirm that it’s necessary).

I don’t have a crystal ball that can read the future on what government compliance will decide, nor am I an expert in legal matters.

Given the absence of a clear legal framework, I do think it’s totally reasonable to consider crypto-shredding equivalent to data erasure. Most experts would probably agree with this. But it’s also possible that the courts could rule totally stupidly on this one day.

Therefore, I must caution anyone that follows a similar path: Do not claim GDPR compliance just because you implement crypto-shredding in a distributed ledger. All you can realistically promise is that you’re not going out of your way to make compliance logically impossible. All we have to go by are untested legal hypotheses, and very little clarity (even if the technologists are near-unanimous on the topic!).

Towards A Solution

With all that in mind, let’s start with “crypto shredding” as the answer to the GDPR + transparency log conundrum.

This is only the start of our complications.

CMYKatProtocol Risks Introduced by Crypto Shredding

Before the introduction of crypto shredding, the job of the Public Key Directory was simple:

- Receive a protocol message.

- Validate the protocol message.

- Commit the protocol message to a transparency log (in this case, Sigsum).

- Retrieve the protocol message whenever someone requests it to independently verify its inclusion.

- Miscellaneous other protocol things (cross-directory checkpoint commitment, replication, etc.).

Point being: there was very little that the directory could do to be dishonest. If they lied about the contents of a record, it would invalidate the inclusion proofs of every successive record in the ledger.

In order to make a given record crypto-shreddable without breaking the inclusion proofs for every record that follows, we need to commit to the ciphertext, not the plaintext. (And then, when a takedown request comes in, wipe the key.)

Now, things are quite more interesting.

Do you…

- …Distribute the encryption key alongside the ciphertext and let independent third parties decrypt it on demand?

…OR…

- Decrypt the ciphertext and serve plaintext through the public API, keeping the encryption key private so that it may be shredded later?

The first option seems simple, but runs into governance issues: How do you claim the data was crypto-shredded if countless individuals have a copy of the encryption key, and can therefore recover the plaintext from the ciphertext?

I don’t think that would stand up in court.

CMYKatClearly, your best option is the second one.

Okay, so how does an end user know that the ciphertext that was committed to the transparency ledger decrypts to the specific plaintext value served by the Public Key Directory? How do users know it’s not lying?

Quick aside: This question is also relevant if you went with the first option and used a non-committing AEAD mode for the actual encryption scheme.

In that scenario, a hostile nation state adversary could pressure a Public Key Directory to selectively give one decryption key to targeted users, and another to the rest of the Internet, in order to perform a targeted attack against citizens they’d rather didn’t have civil rights.

My entire goal with introducing key transparency to my end-to-end encryption proposal is to prevent these sorts of attacks, not enable them.

There are a lot of avenues we could explore here, but it’s always worth outlining the specific assumptions and security goals of any design before you start perusing the literature.

AJAssumptions

This is just a list of things we assume are true, and do not need to prove for the sake of our discussion here today. The first two are legal assumptions; the remainder are cryptographic.

Ask your lawyer if you want advice about the first two assumptions. Ask your cryptographer if you suspect any of the remaining assumptions are false.

- Crypto-shredding is a legally valid way to provide data erasure (as discussed above).

- EU courts will consider public keys to be Personal Data.

- The SHA-2 family of hash functions is secure (ignoring length-extension attacks, which won’t matter for how we’re using them).

- HMAC is a secure way to build a MAC algorithm out of a secure hash function.

- HKDF is a secure KDF if used correctly.

- AES is a secure 128-bit block cipher.

- Counter Mode (CTR) is a secure way to turn a block cipher into a stream cipher.

- AES-CTR + HMAC-SHA2 can be turned into a secure AEAD mode, if done carefully.

- Ed25519 is a digital signature algorithm that provides strong security against existent forgery under a chosen-message attack (SUF-CMA).

- Argon2id is a secure, memory-hard password KDF, when used with reasonable parameters. (You’ll see why in a moment.)

- Sigsum is a secure mechanism for building a transparency log.

This list isn’t exhaustive or formal, but should be sufficient for our purposes.

Security Goals

- The protocol messages stored in the Public Key Directory are accompanied by a Merkle tree proof of inclusion. This makes it append-only with an immutable history.

- The Public Key Directory cannot behave dishonestly about the decrypted plaintext for a given ciphertext without clients detecting the deception.

- Whatever strategy we use to solve this should be resistant to economic precomputation and brute-force attacks.

Can We Use Zero-Knowledge Proofs?

At first, this seems like an ideal situation for a succinct, non-interactive zero-knowledge proof.

After all, you’ve got some secret data that you hold, and you want to prove that a calculation is correct without revealing the data to the end user. This seems like the ideal setup for Schnorr’s identification protocol.

CMYKatUnfortunately, the second assumption (public keys being considered Personal Data by courts, even though they’re derived from random secret keys) makes implementing a Zero-Knowledge Proof here very challenging.

First, if you look at Ed25519 carefully, you’ll realize that it’s just a digital signature algorithm built atop a Schnorr proof, which requires some sort of public key (even an ephemeral one) to be managed.

Worse, if you try to derive this value solely from public inputs (rather than creating a key management catch-22), the secret scalar your system derives at will have been calculated from the user’s Personal Data–which only strengthens a court’s argument that the public key is therefore personally identifiable.

CMKatThere may be a more exotic zero-knowledge proof scheme that might be appropriate for our needs, but I’m generally wary of fancy new cryptography.

Here are two rules I live by in this context:

- If I can’t get the algorithms out of the crypto module for whatever programming language I find myself working with, it may as well not even exist.

- Corollary: If libsodium bindings are available, that counts as “the crypto module” too.

- If a developer needs to reach for a generic Big Integer library (e.g., GMP) for any reason in the course of implementing a protocol, I do not trust their implementation.

Unfortunately, a lot of zero-knowledge proof designs fail one or both of these rules in practice.

(Sorry not sorry, homomorphic encryption enthusiasts! The real world hasn’t caught up to your ideas yet.)

What About Verifiable Random Functions (VRFs)?

It may be tempting to use VRFs (i.e., RFC 9381), but this runs into the same problem as zero-knowledge proofs: we’re assuming that an EU court would deem public keys Personal Data.

But even if that assumption turns out false, the lifecycle of a protocol message looks like this:

- User wants to perform an action (e.g.,

AddKey). - Their client software creates a plaintext protocol message.

- Their client software generates a random 256-bit key for each potentially-sensitive attribute, so it can be shredded later.

- Their client software encrypts each attribute of the protocol message.

- The ciphertext and keys are sent to the Public Key Directory.

- For each attribute, the Public Key Directory decrypts the ciphertext with the key, verifies the contents, and then stores both. The ciphertext is used to generate a commitment on Sigsum (signed by the Public Key Directory’s keypair).

- The Public Key Directory serves plaintext to requestors, but does not disclose the key.

- In the future, the end user can demand a legal takedown, which just wipes the key.

Let’s assume I wanted to build a VRF out of Ed25519 (similar to what Signal does with VXEdDSA). Now I have a key management problem, which is pretty much what this project was meant to address in the first place.

VRFs are really cool, and more projects should use them, but I don’t think they will help me.

CMYKatSoatok’s Proposed Solution

If you want to fully understand the nitty-gritty implementation details, I encourage you to read the current draft specification, plus the section describing the encryption algorithm, and finally the plaintext commitment algorithm.

Now that we’ve established all that, I can begin to describe my approach to solving this problem.

First, we will encrypt each attribute of a protocol message, as follows:

- For subkey derivation, we use HKDF-HMAC-SHA512.

- For encrypting the actual plaintext, we use AES-256-CTR.

- For message authentication, we use HMAC-SHA512.

- Additional associated data (AAD) is accepted and handled securely; i.e., we don’t use YOLO as a hash construction.

This prevents an Invisible Salamander attack from being possible.

This encryption is performed client-side, by each user, and the symmetric key for each attribute is shared with the Public Key Directory when publishing protocol messages.

If they later issue a legal request for erasure, they can be sure that the key used to encrypt the data they previously published isn’t secretly the same key used by every other user’s records.

They always know this because they selected the key, not the server. Furthermore, everyone can verify that the hash published to the Merkle tree matches a locally generated hash of the ciphertext they just emitted.

This provides a mechanism to keep everyone honest. If anything goes wrong, it will be detected.

Next, to prevent the server from being dishonest, we include a plaintext commitment hash, which is included as part of the AAD (alongside the attribute name).

(Implementing crypto-shredding is straightforward: simply wipe the encryption keys for the attributes of the records in scope for the request.)

If you’ve read this far, you’re probably wondering, “What exactly do you mean by plaintext commitment?”

Art by Scruff.Plaintext Commitments

The security of a plaintext commitment is attained by the Argon2id password hashing function.

By using the Argon2id KDF, you can make an effective trapdoor that is easy to calculate if you know the plaintext, but economically infeasible to brute-force attack if you do not.

However, you need to do a little more work to make it safe.

HarubakiThe details here matter a lot, so this section is unavoidably going to be a little dense.

Pass the Salt?

Argon2id expects both a password and a salt.

If you eschew the salt (i.e., zero it out), you open the door to precomputation attacks (see also: rainbow tables) that would greatly weaken the security of this plaintext commitment scheme.

You need a salt.

If you generate the salt randomly, this commitment property isn’t guaranteed by the algorithm. It would be difficult, but probably not impossible, to find two salts (, ) such that .

Deriving the salt from public inputs eliminates this flexibility.

By itself, this reintroduces the risk of making salts totally deterministic, which reintroduces the risk of precomputation attacks (which motivated the salt in the first place).

If you include the plaintext in this calculation, it could also create a crib that gives attackers a shortcut for bypassing the cost of password hashing.

Furthermore, any two encryptions operations that act over the same plaintext would, without any additional design considerations, produce an identical value for the plaintext commitment.

CMYKatPublic Inputs for Salt Derivation

The initial proposal included the plaintext value for Argon2 salt derivation, and published the salt and Argon2 output next to each other.

Hacker News comex pointed out a flaw with this technique, so I’ve since revised how salts are selected to make them independent of the plaintext.

The public inputs for the Argon2 salt are now:

- The version identifier prefix for the ciphertext blob.

- The 256-bit random value used as a KDF salt (also stored in the ciphertext blob).

- A recent Merkle tree root.

- The attribute name (prefixed by its length).

These values are all hashed together with SHA-512, and then truncated to 128 bits (the length required by libsodium for Argon2 salts).

This salt is not stored, but can deterministically be calculated from public information.

Crisis Averted?

This sure sounds like we’ve arrived at a solution, but let’s also consider another situation before we declare our job done.

High-traffic Public Key Directories may have multiple users push a protocol message with the same recent Merkle root.

This may happen if two or more users query the directory to obtain the latest Merkle root before either of them publish their updates.

Later, if both of these users issue a legal takedown, someone might observe that the recent-merkle-root is the same for two messages, but their commitments differ.

Is this enough leakage to distinguish plaintext records?

In my earlier design, we needed to truncate the salt and rely on understanding the birthday bound to reason about its security. This is no longer the case, since each salt is randomized by the same random value used in key derivation.

Choosing Other Parameters

As mentioned a second ago, we set the output length of the Argon2id KDF to 32 bytes (256 bits). We expect the security of this KDF to exceed , which to most users might as well be infinity.

With apologies to Filippo.The other Argon2id parameters are a bit hand-wavey. Although the general recommendation for Argon2id is to use as much memory as possible, this code will inevitably run in some low-memory environments, so asking for several gigabytes isn’t reasonable.

For the first draft, I settled on 16 MiB of memory, 3 iterations, and a parallelism degree of 1 (for widespread platform support).

Plaintext Commitment Algorithm

With all that figured out, our plaintext commitment algorithm looks something like this:

- Calculate the SHA512 hash of:

- A domain separation constant

- The header prefix (stored in the ciphertext)

- The randomness used for key-splitting in encryption (stored in the ciphertext)

- Recent Merkle Root

- Attribute Name Length (64-bit unsigned integer)

- Attribute Name

- Truncate this hash to the rightmost 16 bytes (128 bits). This is the salt.

- Calculate Argon2id over the following inputs concatenated in this order, with an output length of 32 bytes (256 bits), using the salt from step 2:

- Recent Merle Root Length (64-bit unsigned integer)

- Recent Merkle Root

- Attribute Name Length (64-bit unsigned integer)

- Attribute Name

- Plaintext Length (64-bit unsigned integer)

- Plaintext

The output (step 3) is included as the AAD in the attribute encryption step, so the authentication tag is calculated over both the randomness and the commitment.

To verify a commitment (which is extractable from the ciphertext), simply recalculate the commitment you expect (using the recent Merkle root specified by the record), and compare the two in constant-time.

If they match, then you know the plaintext you’re seeing is the correct value for the ciphertext value that was committed to the Merkle tree.

If the encryption key is shredded in the future, an attacker without knowledge of the plaintext will have an enormous uphill battle recovering it from the KDF output (and the salt will prove to be somewhat useless as a crib).

AJCaveats and Limitations

Although this design does satisfy the specific criteria we’ve established, an attacker that already knows the correct plaintext can confirm that a specific record matches it via the plaintext commitment.

This cannot be avoided: If we are to publish a commitment of the plaintext, someone with the plaintext can always confirm the commitment after the fact.

CMYKatWhether this matters at all to the courts is a question for which I cannot offer any insight.

Remember, we don’t even know if any of this is actually necessary, or if “moderation and platform safety” is a sufficient reason to sidestep the right to erasure.

If the courts ever clarify this adequately, we can simply publish the mapping of Actor IDs to public keys and auxiliary data without any crypto-shredding at all.

Trying to attack it from the other direction (download a crypto-shredded record and try to recover the plaintext without knowing it ahead of time) is attack angle we’re interested in.

Herd Immunity for the Forgotten

Another interesting implication that might not be obvious: The more Fediverse servers and users publish to a single Public Key Directory, the greater the anonymity pool available to each of them.

Consider the case where a user has erased their previous Fediverse account and used the GDPR to also crypto-shred the Public Key Directory entries containing their old Actor ID.

To guess the correct plaintext, you must not only brute-force guessing possible usernames, but also permute your guesses across all of the instances in scope.

The more instances there are, the higher the cost of the attack.

CMYKatRecap

I tasked myself with designing a Key Transparency solution that doesn’t make complying with Article 17 of the GDPR nigh-impossible. To that end, crypto-shredding seemed like the only viable way forward.

A serialized record containing ciphertext for each sensitive attribute would be committed to the Merkle tree. The directory would store the key locally and serve plaintext until a legal takedown was requested by the user who owns the data. Afterwards, the stored ciphertext committed to the Merkle tree is indistinguishable from random for any party that doesn’t already know the plaintext value.

I didn’t want to allow Public Key Directories to lie about the plaintext for a given ciphertext, given that they know the key and the requestor doesn’t.

After considering zero-knowledge proofs and finding them to not be a perfect fit, I settled on designing a plaintext commitment scheme based on the Argon2id password KDF. The KDF salts can be calculated from public inputs.

Altogether, this meets the requirements of enabling crypto-shredding while keeping the Public Key Directory honest. All known attacks for this design are prohibitively expensive for any terrestrial threat actors.

As an added bonus, I didn’t introduce anything fancy. You can build all of this with the cryptography available to your favorite programming language today.

CMYKatClosing Thoughts

If you’ve made it this far without being horribly confused, you’ve successfully followed my thought process for developing message attribute shreddability in my Public Key Directory specification.

This is just one component of the overall design proposal, but one that I thought my readers would enjoy exploring in greater detail than the specification needed to capture.

(This post was updated on 2024-11-22 to replace the incorrect term “PII” with “personal data”. Apologies for the confusion!)

#Argon2 #crypto #cryptography #E2EE #encryption #FederatedPKI #fediverse #passwordHashing #symmetricCryptography

Ever since the Invisible Salamanders paper was published, there has been a quiet renaissance within my friends and colleagues in applied cryptography for studying systems that use Authenticated Encryption with Associated Data (AEAD) constructions, understanding what implicit assumptions these systems make about the guarantees of the AEAD mode they chose to build upon, and the consequences of those assumptions being false.

I’ve discussed Invisible Salamanders several times throughout this blog, from my criticisms of AES-GCM and XMPP + OMEMO to my vulnerability disclosures in Threema.

Five years after Invisible Salamanders, it’s become clear to me that many software developers do not fully appreciate the underlying problem discussed in the Invisible Salamanders paper, even when I share trivial proof-of-concept exploits.

Background

Fast AEAD constructions based on polynomial MACs, such as AES-GCM and ChaCha20-Poly1305, were designed to provide confidentiality and integrity for the plaintext data, and optionally integrity for some additional associated data, in systems where both parties already negotiated one shared symmetric key.

The integrity goals of the systems that adopted these AEAD constructions were often accompanied by performance goals–usually to prevent Denial of Service (DoS) attacks in networking protocols. Verification needed to be very fast and consume minimal resources.

In this sense, AEAD constructions were an incredible success. So successful, in fact, that most cryptographers urge application developers to use one of the fast AEAD modes as the default suggestion without looking deeper at the problem being solved. This is a good thing, because most developers will choose something stupid like ECB mode in the absence of guidance from cryptographers, and AEAD modes are much, much safer than any hand-rolled block cipher modes.

The problem is, that one tiny little assumption that both parties (sender, recipient) for a communication have agreed on exactly one symmetric key for use in the protocol.

Fast MACs Are Not Key-Committing

Cryptographers have concluded that AEAD constructions based on polynomial MACs–while great for performance and rejection of malformed packets without creating DoS risks–tend to make the same assumption. This is even true of misuse-resistant modes like AES-GCM-SIV and extended-nonce constructions like XSalsa20-Poly1305.

When discussing this implicit assumption of only one valid key in the systems that use these AEAD modes, we say that the modes are not key-committing. This terminology is based on what happens when this assumption is false.

Consequently, you can take a single, specially crafted ciphertext (with an authentication tag) and decrypt it under multiple different keys. The authentication tags will be valid for all keys, and the plaintext will be different.

Art: SwizzWhat does this look like in practice?

Consider my GCM exploit, which was written to generate puzzle ciphertexts for the DEFCON Furs badge challenge a few years ago. How it works is conceptually simple (although the actual mechanics behind step 4 is a bit technical):

- Generate two keys.

There’s nothing special about these keys, or their relationship to each other, and can be totally random. They just can’t be identical or the exploit is kind of pointless.

- Encrypt some blocks of plaintext with key1.

- Encrypt some more blocks of plaintext with key2.

- Calculate a collision block from the ciphertext in the previous two steps–which is just a bit of polynomial arithmetic in GF(2^128)

- Return the ciphertext (steps 2, 3, 4) and authentication tag calculated over them (which will collide for both keys).

A system that decrypts the output of this exploit under key1 will see some plaintext, followed by some garbage, followed by 1 final block of garbage.

If the same system decrypts under key2, it will see some garbage, followed by some plaintext, followed by 1 final block of garbage.

For many file formats, this garbage isn’t really a problem. Additionally, a bit more precomputation allows you to choose garbage that will be more advantageous to ensuring both outputs are accepted as “valid” by the target system.

For example, choosing two keys and a targeted nonce may allow both the valid plaintext and garbage blocks to begin with a PDF file header.

If you’re familiar with the file polyglot work of Ange Albertini, you can use this to turn the Invisible Salamanders problem into an artform.

And this is just the simple attack!

The Invisible Salamanders paper outlined a more advanced variant (with a proof of concept) in Section 3.2, which doesn’t suffer from nearly as much garbage data as the simple attack.

As Bruce Schneier often says, “Attacks only get better, they never get worse.”

Why is it called Invisible Salamanders?

The proof-of-concept used in the paper involved sending one picture (of a salamander) over an end-to-end encrypted messaging app, but when the recipient flagged it as abusive, the moderator saw a different picture.

https://www.youtube.com/watch?v=3M1jIO-jLHI

Thus, the salamander was invisible to the moderators of the encrypted messaging app.

As for the choice of a “salamander”, I’ve been told by friends familiar with the research that was inspired by the original name of the Signal Protocol being “Axolotl”.

But, like, who cares about these details besides me? It’s a cute and memorable name.

What are the consequences of violating the “one key” assumption?

That depends entirely on what your system does!

In Database Cryptography Fur the Rest of Us, I discussed the use of AEAD modes to prevent confused deputy attacks. This works great, but if you’re building an application that supports multi-tenancy, you suddenly have to care about this issue again.

An earlier design for OPAQUE, a password authenticated key exchange algorithm, was broken by a partitioning oracle attack due to building atop AEAD modes that are not key-committing. This let an attacker recover passwords from Shadowsocks proxy servers with a complexity similar to a binary search algorithm.

These are two very different impacts from the same weakness, which I believe is a significant factor for why the Invisible Salamanders issue isn’t more widely understood.

Sometimes violating the “one key” assumption that went into fast AEAD modes based on Polynomial MACs completely destroys the security of your system.

Other times, it opens the door for a high-complexity but low-impact behavior that simply violates the principle of least astonishment but doesn’t buy the attacker anything useful.

They Just Don’t Get It

The Invisible Salamanders issue is relevant in any system that uses symmetric-key encryption where more than one key can be valid.

This includes, but is not limited to:

- Multi-tenant data warehouses

- Group messaging protocols

- It’s sometimes tempting to discount group messaging as a relevant consideration if your experience is “emulated groups atop 1-to-1 messaging”, but there are protocols that establish a Group Key (i.e., RFC 9420) and then use that for all group messages.

- Envelope encryption schemes with multiple wrapping keys

- Bearer tokens (such as JSON Web Tokens) in systems that utilize Key IDs

Systems can mitigate this issue by introducing an explicit key commitment scheme (based on a cryptographic hash rather than a polynomial MAC) or by using a committing cipher mode (such as AES + HMAC, if done carefully).

However, most of the time, this advice falls on deaf ears whenever this concern is brought up by a cryptography engineer who’s more aware of this issue.

“Abuse reporting? We don’t have no stinking abuse reporting!”

The most common misunderstanding is, “We don’t have a report abuse feature, so this issue doesn’t affect us.”

This is because the Invisible Salamanders talk and paper focused on how it could be leveraged to defeat abuse reporting tools and bypass content moderation.

In my experience, many security teams would read the paper and conclude that it only impacts abuse reporting features and not potentially all systems that allow multiple symmetric keys in a given context.

Another Exploit Scenario

Imagine you’re building a Data Loss Prevention product that integrates with corporate file-sharing and collaboration software (e.g. ownCloud) for small and medium businesses.

One day, someone decides to ship an end-to-end encryption feature to the file-sharing software that uses AES-GCM to encrypt files, and then encrypts the keys to each recipient’s public key. This is basically the envelope encryption use-case above.

So, you dutifully update your integration to act as another “user”, whose public key must be included in all E2EE transfers, and will block download of ciphertexts it cannot decrypt OR contains sensitive information.

And this works, until an insider threat clever enough to abuse the Invisible Salamanders issue comes along.

In order for said insider threat (e.g., a senior business analyst) to leak sensitive data (e.g., anything that would be useful for illegal insider trading) to another person that shouldn’t have access to it (e.g., a store clerk that’s talking to the press), they just have to do this:

- Encrypt the data they want to exfiltrate using key1.

- Encrypt some innocuous data that won’t trigger your DLP product, using key2.

- Ensure that both messages encrypt to the same ciphertext and authentication tag.

- Give their recipient key1, give everyone else (including your DLP software) key2.

Bam! File leaked, and everyone’s none the wiser, until it’s too late. Let’s actually imagine what happens next:

A random store clerk has leaked sensitive data to the press that only a few analysts had access to.

The only communication between the analyst and the store clerk is a file that was shared to all employees, using the E2EE protocol. No emails or anything else were identified.

Your DLP product didn’t identify any other communications between these two, but somehow the store clerk has the data on their desktop.

A detailed forensics analysis may eventually figure out what happened, but by then, the damage is done and your product’s reputation is irrecoverably damaged.

All because the hypothetical E2EE protocol didn’t include a key-commitment mechanism, and nobody identified this deficit in their designs.

This isn’t to endorse DLP solutions at all, but rather, to highlight one of the many ways that the Invisible Salamander issue can be used creatively by clever attackers.

Art: AJ“Couldn’t you do the same with steganography?”

No, the attack is very different from stego.

Stego is about hiding a message in plain sight, so that only the person that knows where/how to look can find it.

The Invisible Salamanders attack lets you send one ciphertext through a network then selectively decrypt it to one of two plaintexts, depending on which key you reveal to each participant.

In the Invisible Salamanders paper and talk, they used this to send “abusive” messages to a recipient that the moderator would not see. Thus, invisible.

In one, the message is always emitted to anyone who knows how to find it. In the other, the attacker selects which you see, even if you have mechanisms to ensure you’re seeing the same ciphertext. It’s not a subtle difference.

Mitigation Techniques

There are multiple ways to mitigate the risk of Invisible Salamanders in a cryptosystem.

- Use HMAC, or (failing that) something built atop cryptographic hash functions, rather than a Polynomial MAC.

- Use an AEAD cipher designed with multi-recipient integrity as a security goal.

- Compute a non-invertible, one-way commitment of the encryption key.

A trivial mitigation looks like this:

class SoatokExampleEncryptor { const NEW_ENCRYPT_KEY = 'myProtocol$encryptKey'; const NEW_COMMITMENT = 'myProtocol$commitment'; public function __construct(#[SensitiveParameter] private string $key) {} /** * Let's assume we're starting with a simple AES-GCM wrapper */ public function legacyEncrypt(string $plaintext, string $assocData = ''): string { $nonce = random_bytes(12); $tag = ''; $ciphertext = openssl_encrypt( $plaintext, 'aes-256-gcm', $this->key, OPENSSL_RAW_DATA, $nonce, $tag, $assocData ); return $nonce . $ciphertext . $tag; } /** * An improved function looks something like this */ public function newEncrypt(string $plaintext, string $assocData = ''): string { // Avoid birthday bound issues with 256-bits of randomness $longerNonce = random_bytes(32); // Derive a subkey and synthetic nonce $tmp = hash_hkdf('sha512', $this->key, 44, self::NEW_ENCRYPT_KEY . $longerNonce); $encKey = substr($tmp, 0, 32); $nonce = substr($tmp, 32); // New: Key commitment $commitment = hash_hkdf('sha512', $this->key, 32, self::NEW_COMMITMENT . $longerNonce); // Most of this is unchanged $tag = ''; $ciphertext = openssl_encrypt( $plaintext, 'aes-256-gcm', $encKey, OPENSSL_RAW_DATA, $nonce, $tag, $assocData ); return $longerNonce . $commitment . $ciphertext . $tag; }} And then the decryption logic would recalculate the commitment, and compare it with the stored value, in constant-time.

It’s important that the commitment be stored with the ciphertext, rather than bundling it with the key.

(It may be worthwhile to also include the commitment in the associated data, to add a mechanism against downgrade attacks.)

The Lesson to Learn

If you’re building a network protocol that uses AEAD to encrypt data over an insecure network (e.g., WireGuard), keep up the good work.

If you’re doing anything more involved than that, at the application layer, pause for a moment and consider whether your system will ever need multiple valid symmetric keys at once.

And, if the answer is “yes”, then you should always explicitly add a key-commitment mechanism to your system design.

(Hire a cryptographer if you’re not sure how to proceed.)

In my opinion, hemming and hawing over whether there’s a significant impact to the Invisible Salamanders issue is a worse use of your time than just solving it directly.

Eventually, I expect a new generation of AEAD modes will be standardized that explicitly provide key-commitment.

When these new designs are standardized, widely supported, and sufficiently trusted by experts, feel free to update my advice to “prefer using those modes” instead.

Header art: Harubaki, CMYKat, and a photo by Brian Gratwicke. Poorly photoshopped by myself.

https://soatok.blog/2024/09/10/invisible-salamanders-are-not-what-you-think/

#AEAD #AESGCM #InvisibleSalamanders #randomKeyRobustness #symmetricCryptography

Federated Key Transparency Project Update

Earlier this year, I wrote about planned effort to design a federated Key Transparency proposal.

The end goal for this work was constrained to building end-to-end encryption into a new type of Direct Message on the Fediverse, with other protocols and services being a stretch goal rather than its primary purpose.

The ideal situation is to enable developers to write code that looks as simple as this:

async function initialize(message, recipient) { const bundle = await fediverse.getSignedPreKeyBundle(recipient); // This also checks the inclusion proof and witness cosigs: const pubKey = await directory.fetch(recipient, bundle.keyId); if (!await pubKey.verify(bundle)) { throw new Error('Invalid signature or bundle'); } const session = await e2ee.beginSession(bundle); return session.send(message);}initialize("OwO what's this?", "soatok@furry.engineer") .then(async (session) => { /* ... */ }); And then have secure end-to-end encryption such that only a trusted public key for the intended recipient can decrypt.

Work on the specification for the Public Key Directory component has recently started. A few things have changed since my last blog post on the topic. I’ve also gotten a lot of similar questions that wouldn’t be appropriate to try to answer within the specification itself.

Original art: CMYKat, poorly edited by myselfThe Big Picture

This section is written mostly for anyone who hasn’t paid attention to my other writing on this project.

This is how I believe this project will develop in the immediate future.

- Public Key Directory (PKD)

- Specification (WIP)

- Reference Implementation (Not Started)

- Client-Side SDKs (Not Started)

- Go

- Ruby

- PHP

- TypeScript

- End-to-End Encryption for the Fediverse (FediE2EE)

- Specification (WIP)

- Client-Side Secret Key Management

- Federated Public Key Infrastructure (See: PKD)

- Asynchronous Forward-Secure Ratcheting Protocol + Group Key Agreement

- Symmetric-Key Authenticated Encryption

- Reference Implementations (Not Started)

- Go

- Ruby

- PHP

- TypeScript

- Specification (WIP)

- Fediverse Instance Patches to Support E2EE

- Mastodon

- ?????

- Client-Side Software

- ?????

- PKD Extensions

- age v1 public keys

Once the PKD complete is complete, there’s nothing stopping other people from defining their own PKD extensions and building on top of our design to add Key Transparency to their own protocols.

My focus, once we have a solid specification and reference implementation, is going to shift towards building FediE2EE.

I will not, however, be working on client-side software unless no one else expresses interest.

The reason for my tentative recusal is simple: I absolutely suck at user interface design, and you’ll probably hate whatever I can cobble together. I am many things, but an artist is not one of them.

You don’t want me designing UIs.Art: CMYKat

To that end, my final deliverable in this project will be open source libraries (and accompanying guidance for using said libraries) than user experience experts can glue into their own clients.

That said, the only client-side software that should exist are browser extensions, desktop clients, and mobile apps.

I strongly discourage anyone from trying to deploy any code that touches secret keys to a traditional web application, or JavaScript running inside of a WebView.

I’m ambivalent on Electron. It’s better than redownloading the code from a server and running it blindly every page load, but it’s not highly regarded by security professionals.

Decisions Made

The most important topic to cover is design decisions I’ve made with my specification that will shape the evolution of this project.

Account Recovery

The current draft of the specification includes two Protocol Message types, BurnDown and Fireproof, which warrant further examination.

BurnDown is simple in concept: It revokes all of a particular user’s public keys and auxiliary data records. If you have no currently-trusted public keys, you are permitted to push a self-signed AddKey message.

A not-so-subtle detail of BurnDown that everyone should pay attention to is that the instance admin can issue them on behalf of other users hosted on that server.

CMYKatIf you aren’t comfortable with your admin being able to issue a BurnDown at any time, that’s where Fireproof comes in: It allows you to opt out of this capability entirely.

Fireproof is a double-edged sword. It protects you from malicious admins, but it prevents you from ever recovering your account if you lose access to all of your secret keys.

The most important decision I made here is: Fireproof is an opt-in protection, which (as of the current draft) has no “undo”. (I’m considering allowing an “undo” if it makes sense to ever do so. Tell me what you think!)

It’s often said that security at the expense of usability comes at the expense of security. Account recovery mechanisms are, necessarily, always some kind of a backdoor.

Conversely, defaults matter to security. Allowing BurnDown messages be issued by default, from a specification perspective, implies most users will not issue a Fireproof message. (Client software may counteract this by prompting users with a choice when they first enroll, without a default setting, but I digress.)

I believe this choice is the best of all possible options, but you’re certainly welcome to disagree. It’s important to me that I be very loudly transparent about this decision.

No ECDSA Support

I had floated the idea of supporting NIST P-384 in my initial blog post.

Ultimately, there is no real incentive to do so, considering Ed25519 is now in FIPS 186-5 (which has been a standard for 18 months now).

And since we’re already using Ed25519, that satisfies any hypothetical FIPS use-case, should any governments choose to use my design for anything.

Thus, there will be no NIST P-384 support in the Public Key Directory project.

Art: AJRight To Be Forgotten

Key Transparency involves creating a global, immutable history. The Right To Be Forgotten enshrined in the EU’s GDPR law is fundamentally incompatible with the security goals of key transparency.

What this means is that, if I just shrugged and plugged Actor IDs and Public Keys into a hash function and committed that hash to a Merkle tree, then, years later, a malicious troll demands their data be removed in accordance with the GDPR, it immediately becomes a catch-22.

Do you comply with the asserted right and break the history and provable security of your transparency ledger? Or do you risk legal peril for noncompliance?

When I first noodled over this, a few people said, “But you’re not in the EU. Why do you care?”

And, like, some of the people that will want to use this design one day are in the EU. Some of them may want to run their own Public Key Directory instances. I want them to have a good time with it. Is that so strange?

There is a way to get both properties without sacrificing the universal consistency of a single Merkle tree, but it relies on untested legal theory.

MarleyTanukiIn short, what you need to do is:

- Client-side: Encrypt the sensitive fields, then send the ciphertext and the ephemeral key to the Directory.

- The Directory will commit to the ciphertext, not the plaintext, and hold onto the keys in order to decrypt records on-the-fly.

- When a data erasure request comes in by an EU citizen asserting their right to be forgotten, erase the key to render the data irrecoverable.

This constitutes a forceful BurnDown with amnesia.

Does This Introduce Any Specific Risks?

This works in principle, but a couple of things need to hold true in order to maintain the integrity of the transparency log.

- You need to use a committing authenticated encryption mode.

Without this property, it’s possible to swap one key for another (rather than simply erasing it) and get a valid plaintext for the (ciphertext, tag) committed in the ledger.

This protects the Directory from a malicious user that later gets privileged access and manipulates stored keys.

- You need a plaintext commitment that is independent of the key. In addition to key-independence, it needs to be difficult to brute force, and you can’t have additional randomness (i.e., salts) added that could be changed after-the-fact to produce a “valid” commitment for another plaintext.

This protects users from a Directory that lies about which plaintext a particular ciphertext decrypts to.

This is currently specified as follows:

- Encryption is AES-256-CTR then HMAC-SHA512, Encrypt-then-MAC.

- The authentication tag covers a random value used for subkey derivation, as well as a Plaintext Commitment (Q).

- The Plaintext Commitment (Q) is derived from Argon2id and HMAC of the plaintext. There are some subtle detains with how the Argon2id salt is derived, and why specific parameters were chosen the way they are, but this is covered in the specification document.

I had considered using Zero Knowledge Proofs here, but the current HMAC + Argon2 approach solves the problem securely without needing to study ZKDocs (and its supporting material) for hours.

Does This Give Us Compliance?

If we assume that “crypto shredding” is a valid technique for complying with data erasure demands, this lets us honor those requests while ensuring independent third parties can maintain a consistent view of the state of the transparency log.

It is worth repeating: This is not based on a tested legal theory. It is not legal advice. It is a best effort, good faith attempt to engineer a solution that would adhere to the “spirit of the law” as interpreted by an American furry with no academic or legal credentials from any country.

That being said, page 75 of this report about distributed ledgers and GDPR implies it’s not an entirely unfounded hypothesis.

Frequently Asked Questions

I’ve been asked a lot of similar questions since I started this project. This is a good a place as any to answer some of them.

Have you talked with ____?

Short answer: No, I haven’t.

Longer answer: My goal is simply to build a specification, then an implementation, that allows end-to-end encryption on the Fediverse.

No part of that sentence implies getting anyone else’s permission, or compromising on my security decisions in order to meet a competing concern.

For example, there’s always pressure from the open source community to support RSA keys, or to interoperate with other software (i.e., Matrix).

Those are non-goals of mine.

Should the ActivityPub authors or Mastodon developers decide differently from me, I wouldn’t want to sign off on their protocol design just because it appeases someone else.

I also don’t have any sort of requirement that what I specify and build becomes “standardized” in any meaningful way.

So, no, I haven’t talked with any of them yet. I also don’t plan to until the specifications and reference implementations are closer to maturity.

And even then, the message I have in mind for when that time comes looks something like this:

Hiya,

I’m building my own end-to-end encryption design for the Fediverse. Here’s the specification, here’s a reference implementation. (Links go here.)

[If applicable: I see you accepted a grant to build something similar.]

Please feel free to reuse whatever you deem useful (if anything) of my work in your own designs. I’m not interested in changing mine.

If you’d like to just adopt what I’ve already built, that’s fine too.

Soatok

I don’t want a deep involvement in anyone else’s political or social mess. I don’t want any of their grant money either, for that matter.

I just want to make security and privacy possible, to help queer people decide when, where, and how they selectively reveal themselves to others.

That said, if the W3C grant recipients want to look at the work I’m doing, they can consider it licensed under public domain, ISC, CC0, WTFPL, or whatever license is easiest for their lawyers to digest. I literally do not give a shit about intellectual property with this project. Go wild.

What if no one steps up to build client software?

Then, as a last resort, I will build something myself. Most likely, a browser extension.

It will probably be ugly, but lightweight, as I am deathly allergic to React Native, NextJS, and other front-end development frameworks.

How can I contribute?

The GitHub repository for the Public Key Directory spec is located here, if you’d like to read and/or suggest improvements to the specification.

As mentioned in my previous blog post on this topic, there is a Signal group for meta-discussion. If you are interested in writing code, that would be the best place to hang out.

What about money? Although my Ko-Fi isn’t difficult to locate, nor hard to guess, I’m not soliciting any financial contributions for this project. It isn’t costing me anything to design or build, presently.

If you represent a company that focuses on cryptography development or software assurance consulting, I may be interested in talking at some point about getting the designs reviewed and implementations audited by professionals. However, we’re a long way from that right now.

Do you have a timeline in mind?

Somewhat, yeah.

I’d like to have version 0.1 of the specification tagged by the end of September 2024.

If I have the time to stick to that timeline, I intend to start working on the reference implementation and client SDKs in a few languages. This is when software developers’ contributions will begin to be the most welcomed.

I can’t really project a timeline beyond that, today.

In addition to building a reference implementation, I would like to pursue formal verification for my protocol design. This allows us to be confident in the correctness and security of the protocol as specified. I cannot provide even a rough estimate for how long that will take to complete.

Once this Public Key Directory project is in a good place, however, my focus will be shifting back towards specifying end-to-end encryption for the Fediverse. Because that’s why I’m doing all this in the first place.

AJFour years ago, I wrote a (surprisingly popular) blog post about the notion of wear-out for symmetric encryption schemes.

Two years ago, I wrote a thing about extending the nonce used by AES-GCM without introducing foot-guns. This was very recently referenced in one of Filippo Valsorda’s Cryptography Dispatches, which referenced alternative designs in the same vein.

As I was reading Filippo’s newsletter, it occurred to me that a dedicated treatment to how cryptographers discuss the Birthday Bound for symmetric encryption would be beneficial.

Birthday Bound?

The Birthday Bound is rooted in the so-called Birthday Paradox in probability theory. It goes something like this:

How many people (chosen from a uniform random distribution) would you need to have in the same room in order for there to be a 50% or greater chance that two of them have the same birthday?

Even though there are 366 possible calendar days in the year (365 if you ignore leap years), the answer is only 23.

This observation can tell us something interesting about the collision risk in discrete uniformly random samples.

For example, the random number (called an IV in this case) used to encrypt a message with AES-CBC, which is a 128-bit random number. This means that there are possible values. We can simply describe this situation for any distribution; in this case, .

For any random distribution (i.e., random nonces or tweaks for an AEAD scheme) of possible values, you expect to have a 50% chance of collision after about queries. This is a loose, order-of-magnitude estimate.

From our earlier AES-CBC example, this means blocks of data, we’d expect a 50% chance of the two to collide.

My symmetric wear-out blog post went in-depth about the particulars for specific designs, if you’d like to know how the numbers play out.

Is 50/50 Good Enough?

A security policy that cuts off at a 50% chance of a nonce reuse is not fit for the real world. We would call that a YOLO security policy.

AES-GCM, which accepts a 96-bit nonce, is considered unsafe to use for more than random nonces. At this point, the probability of a collision for each subsequent message is greater than or equal to .

Consequently, many people consider the point that the risk exceeds the Birthday Bound for a block cipher mode; after which, a new encryption key must be used.

I certainly don’t fault any security policy that keeps the risk of a bad outcome below the mark, but I think there’s another way to interpret what NIST did with AES-GCM. Namely, the fact that GCM’s risk of is exceeded after samples (for some fixed value ) offers a nice symmetry to the risk analysis.

Three Birthday Bounds

(I was originally trying to find a wordplay that references the children’s story Six-Dinner Sid for this section, but ran out of steam and pressed publish before finding an appropriate parody.)

Soatok Editorial Note

This gives rise to three different possible values for a given random distribution that can be considered whenever someone says the phrase “birthday bound”.

- 50% collision risk after samples, which I like to think of as the

Hard Birthday Bound. - collision risk after samples, which I like to call the

Soft Birthday Bound. - collision risk after samples, which I like to call the

Optimal Birthday Bound.

(These labels are not official; a better naming convention may be worth considering, should this framework for discussion prove useful at all.)

Since we’re still dealing with approximations, a useful technique for calculating is to just take the cube-root of (a.k.a., divide the exponent by 3).

In the case of AES-GCM, the Soft and Optimal values are equivalent.

In the case of Filippo’s XAES-256-GCM design? They differ quite a bit.

- Soft: messages for collision probability.

- Optimal: messages for collision probability.

Whereas the Soft and Optimal limits for a 96-bit nonce are the same, with a 192-bit nonce, they differ a lot: Soft is about 65,536 times the size as Optimal.

Does Any Of This Matter?

If your accepted risk is hard-coded at , then you don’t need to pass Go or collect $200, because your security policy saves you the headache of having to care about math nerd stuff.

However, I do think that the Optimal Birthday Bound is more useful for risk analysis for one simple reason: This is the point at which the probability of a collision is inversely proportional to the number of samples.

Being able to say, “Encrypting messages under the same key incurs a probability of a nonce collision of approximately 1 in ,” is much more deeply satisfying than arbitrarily focusing on as an arbitrary safety limit.

Of course, to most people, and are both ridiculously small numbers that they “might as well be zero”.

Whenever designing extended-nonce constructions atop AEAD block cipher modes, it may be worthwhile to discuss both the Soft and Optimal limits for nonce collisions.

So long as the people designing extended-nonce constructions at least consider doing this, my work here is done.

CMYKat made thisCan we get a useful table?

Of course!

Generally, you don’t want to consider a probability space smaller than (which is the inflection point where Optimal becomes larger than Soft).

Probability SpaceHard BoundSoft BoundOptimal BoundThe collision probability for any Optimal Bound is 1 divided by the number of messages.To use this table, select a probability space from the left column. Then, on the same row, find the cell corresponding to the type of bound you are interested in.

https://soatok.blog/2024/07/01/blowing-out-the-candles-on-the-birthday-bound/

#birthdayAttack #birthdayBound #blockCipherModes #symmetricCryptography

If you’ve never heard of NIST SP 800-108 before, or NIST Special Publications in general, here’s a quick primer:

Special Publications are a type of publication issued by NIST. Specifically, the SP 800-series reports on the Information Technology Laboratory’s research, guidelines, and outreach efforts in computer security, and its collaborative activities with industry, government, and academic organizations. These documents often support FIPS (Federal Information Protection Standards).

Via NIST.gov

One of the NIST 800-series documents concerned with Key Derivation using Pseudorandom Functions is NIST SP 800-108, first published in 2009.

In October 2021, NIST published a draft update to NIST SP 800-108 and opened a comment period until January 2022. This update mostly included Keccak-based Message Authentication Codes (KMAC) in addition to the incumbent standardized designs (HMAC and CMAC).

Upon reviewing a proposal for NIST SP 800-108 revision 1 after its comment period opened, Amazon’s cryptographers discovered a novel security issue with the standard.

I was a co-author of the public comment that disclosed this issue, along with Matthew Campagna, Panos Kampanakis, and Adam Petcher, but take no credit for its discovery.

Consequently, Section 6.7 was added to the final revision 1 of the standard to address Key Control Security.

This post examines the attack against the initial SP 800-108 design when AES-CMAC is used as the PRF in KDF Counter mode.

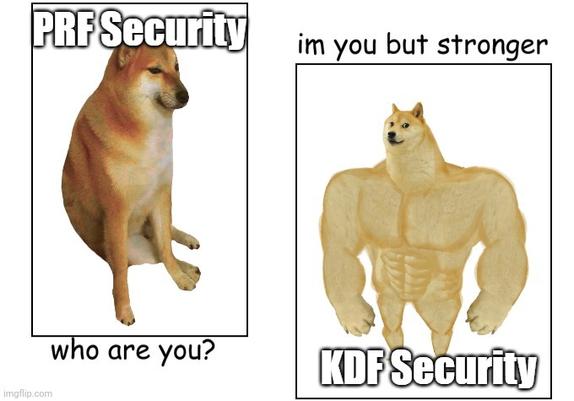

This meme is the TL;DR of this blog postPreliminaries

(If you’re in a hurry, feel free to skip to the attack.)

NIST SP 800-108 specifies a “KDF in Counter Mode” that can be used with several PRFs, including AES-CMAC. It’s worth noting that this family of KDFs can be defined to use any arbitrary PRF, but only the PRFs approved by NIST for this use are recommended.

AES-CMAC is a one-key CBC-MAC construction. Some cryptographers, such as Matt Green, are famously not fond of CBC-MAC.

KDF Security and PRF Security

Yes, I will take any excuse to turn cryptography knowledge into wholesome memes.KDF stands for “Key Derivation Function”.

PRF stands for “Pseudo-Random Function”.

The security notion for KDF Security is stronger than PRF Security.

PRFs require a uniformly-distributed secret key, while KDFs can tolerate a key that is not uniformly random.

This matters if you’re, say, trying to derive symmetric encryption keys from a Diffie-Hellman shared secret of some sort, where the output of your DH() function has some algebraic structure.

Realistically, the difference between the two security notions matters a lot less in scenarios where you’re deriving sub-keys from a primary uniformly random cryptographic secret.

However, it does make your proofs nicer to achieve KDF security instead of merely PRF security.

Key Control Security

Let’s pretend, for simplicity, we have a generic KDF() function that offers KDF Security. We don’t need to know how it works just yet.

Because KDFs are thought of as PRFs, but stronger, it seems perfectly reasonable that you could use KDF() in a setup where multiple inputs are provided, each from a different party, and the output would always be uniformly random.

Further, even if all other parties’ inputs are known, it should remain computationally infeasible for one of the parties to influence the output of KDF() to produce a specific value; e.g. a key with all bits zeroed.

The assumption that this result is computationally infeasible when working with KDF() is referred to as “Key Control Security”.

Loss of Key Control Security in NIST SP 800-108

You already know where this is going…I’m going to explain the attack by way of example.

If you want a more formal treatment, I believe Appendix B of NIST SP 800-108 rev 1 has what you’re looking for.

Imagine that you’re designing an online two-party private messaging app. To ensure forward secrecy, you implement a forward-secure KDF ratchet, loosely inspired by Signal’s design.

For your KDF, you choose AES-CMAC in Counter Mode, because you’re designing for hardware that has accelerated AES instructions and want to avoid the overhead of hash functions.

(Aside: I guess this would also imply you’re most likely selecting AES-CCM for your actual message encryption.)

With each message, the sender commits some random bytes by encrypting them with their message. The recipient, after verifying the authentication tag and decrypting the message, possess knowledge of the same random bytes.

Both parties then use the random bytes and the current symmetric key to ratchet forward to a new 128-bit symmetric key.

The million dollar question is: Is this ratcheting protocol secure?

In the case of KDF in Counter Mode with AES-CMAC, if you have more than 16 bytes of input material, the answer is simply: No.

How The Attack Works

A two-block implementation of this KDF is normally computed as follows:

- Return

Don’t get intimidated by the notation. This is just AES encryption and XOR.

The messages and are defined in the KDF specification. In the scenario we sketched above, we assume the attacker can choose these arbitrarily.

To coerce a recipient to use an arbitrary 128-bit value (i.e., ) all an attacker needs to do is:

- Calculate

- Let some value

- Here, is the target value.

- Force

Notice that is the result of encrypting , and our attacker’s goal in step 3 can be achieved solely by manipulating (which exists independent of )?

That’s the vulnerability.

The public comments and Appendix B on the NIST document describe the actual steps of computing to force a chosen , which involve manipulating the structure of to achieve this result.

Feel free to check out both documents if you’re interested in the finer details.

What Can An Attacker Actually Do With This?

If an attacker controls both and …

Or if an attacker knows some and can control …

…then they can force the final KDF output to equal whatever 128-bit value they want you to use.

The most straightforward application of the loss of key control security is to introduce a backdoor into an application.

If the Underhanded Crypto Contest were still running this year, NIST SP 800-108 using AES-CMAC in Counter Mode would be an excellent basis for a contestant.

Does Anyone Actually Use NIST SP 800-108 This Way?

I’m not aware of any specific products or services that use this KDF in this way. I will update this section if someone finds any.

Is This A Deliberate Backdoor in a NIST Standard?

No.

I understand that, in the wake of Dual_EC_DRBG, there is a lot of distrust for NIST’s work on standardized cryptography.

However, I have no specific knowledge to indicate this was placed deliberately in the standard.

It is inaccurate to describe the loss of key control security in this context as a backdoor. Instead, it’s an unexpected property of the algorithms that can be used to create a clever backdoor. These are wildly different propositions.

At least, that was the case until it was disclosed to NIST in January 2022. 🙂

(I’m including an answer to this question, preemptively, in case someone overreacts when I publish this blog post. I hope it proves unnecessary, but I figured some caution was warranted.)

Mitigation Options

If you care about Key Control Security and use NIST SP 800-108, you should use HMAC or KMAC instead of CMAC. Only CMAC is impacted.

Revision 1 of NIST SP800-108 also outlines another mitigation that involves changing the inputs to include an additional (but reusable) PRF output for every block.

This tweak does change makes the KDF behave more like our intuition for PRFs, but in my opinion it’s better to avoid using CMAC entirely for KDFs.

Why Wasn’t This Widely Publicized?

As interesting and surprising as the loss of Key Control Security in a NIST standard is to cryptography nerds, it’s exactly not like Heartbleed or Log4shell.

That said, regardless of your personal feelings on NIST, if you’re interesting in not having findings like this slip through the cracks in the future, it’s generally worthwhile to pay attention to what NIST is up to.

https://scottarc.blog/2024/06/04/attacking-nist-sp-800-108/

#cybersecurity #framework #KDF #KDFSecurity #KeyDerivationFunctions #NIST #NISTSP800108 #PRFSecurity #security #standards #symmetricCryptography

One of the lessons I learned during my time at AWS Cryptography (and particularly as an AWS Crypto Bar Raiser) is that the threat model for Encryption At Rest is often undefined.

Prior to consulting cryptography experts, most software developers do not have a clear and concise understanding of the risks they’re facing, let alone how or why the encrypting data at rest would help protect their customers.

Unsurprisingly, I’ve heard a few infosec thought leader types insist that encryption-at-rest is security theater over the years. I disagree with this assessment in the absolute terms, but there is a nugget of truth in that assertion.

The million dollar question.Let’s explore this subject in a little more detail.

Why should we listen to you about this topic?

(If you don’t need any convincing, feel free to skip this section.)

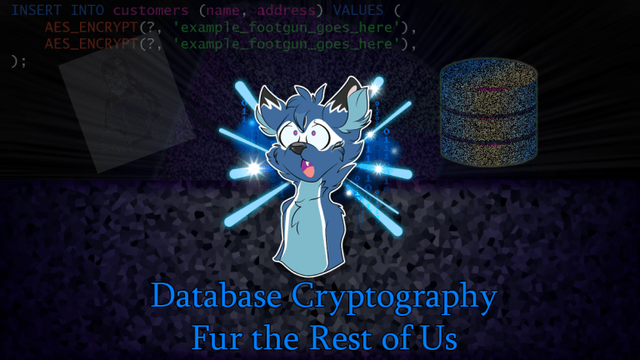

Encryption at rest is a particular hobby horse of mine. I previously wrote on this blog about the under-celebrated design decisions in the AWS Database Encryption SDK and the need for key-committing AEAD modes in multi-tenant data lakes.

Before my time at Amazon, I had also designed a PHP library called CipherSweet that offers a limited type of Searchable Encryption. The goal of CipherSweet was to improve the cryptography used by SuiteCRM. (The library name is, of course, a pun.)

I’ve also contributed a ton of time making cryptography easy-to-use and hard to misuse outside of the narrow use-case that is at-rest data encryption. To that end, I designed PASETO as a secure-by-default alternative to JSON Web Tokens.

I also have a lot of skin in the game when it comes to developer comprehension: I was the first Stack Overflow user with a gold badge for both [security] and [encryption], largely due to the effort I put into cleaning up the bad cryptography advice for the PHP ecosystem.

I have spent the past decade or so trying to help teams avoid security disasters in one form or another.

Why should we not listen to you about this topic?

If you happen to know a cryptography expert you trust more than some Internet stranger with a blog, I implore you to listen to them if we disagree on any point. They may know something I don’t. (That said, I’m always happy to learn something new!)

I also do not have a college degree in Cryptography, nor have I published any papers in prestigious academic journals. If you care very much about this sort of pedigree, you will likely find my words easily discarded. If this describes your situation, no hard feelings.

Why and How to use Encryption At Rest to Protect Sensitive Data

Important: I’m chiefly interested in discussing one use-case, and not focusing on other use cases. Namely, I’m focusing on encryption-at-rest in the narrow context of web applications and/or cloud services.

This is not a comprehensive blog post covering every possible use case or threat model relating to encryption at rest. Those other use cases are certainly interesting, but this post is already long enough with a narrower focus.

If you’re only interested in compliance requirements, you can probably just enable Full Disk Encryption and call it a day. Then, if your server’s hard drive grows legs and walks out of the data center, your users’ most sensitive data will remain confidential.

Unfortunately, for the server-side encryption at rest use case, that’s basically all that Disk Encryption protects against.

If your application or database software is online and an attacker gains access to it (e.g., through SQL injection), with full disk encryption, it might as well be plaintext to the attacker.

It do be like that.Therefore, if you find yourself reaching for Encryption At Rest to mitigate the impact of the kind of vulnerability that would leak the contents of your database or filesystem to an attacker, you’re probably unwittingly engaging in security theater.

Disk Encryption is important for disk disposal and mitigating hardware theft, not preventing data leakage to online attackers.

So the next logical thing to do is draw a box around the system or component that stores a lot of data and never let plaintext cross that boundary.

Client-Side Encryption

Note: The naming here is a little imprecise. It is client-side encryption with respect to your data warehouse (i.e. SQL database), but not with respect to the user experience of a web application. In those cases, client-side would mean on the actual end user’s device.

Instead, client-side encryption is the generic buzz-word to mean that you’re encrypting data outside of the box you drew in your system architecture. Generally, this means that you have an application server that’s acting as the “client” for the purpose of bulk data encryption.

There are a lot of software projects that aim to provide client-side encryption for data stored in a database or filesystems; e.g., in Amazon S3 buckets.

This is a step in the right direction, but implementation details matter a lot.

Quick aside: For the remainder of this blog post, I’m going to assume an architecture that looks like a traditional web application, for simplicity.

The assumed architecture looks vaguely like this:

- User Agents (e.g., web browsers) that communicate with the application server.

- Application Server(s) respond to HTTP requests from user agents, manages key material using KMS, encrypts / decrypts records stored in the database.

- Database Server(s) which store ciphertext on behalf of the application server.

This is an abstract design, so the actual implementation details you encounter in the real world may be simpler or more complex in different respects.

There are other interesting design considerations for OS-level end-user device encryption that I’m not going to explore today. For example: Adiantum is extremely cool.

I’m also not going to dive deep into laptop theft or the importance of Full Disk Encryption as a mechanism for ensuring data is erased from solid state hard drives, or the activities of hostile nation states. That’s a separate discussion entirely.

Security Considerations for Client-Side Encryption

The first question to answer when data is being encrypted is, “How are the keys being managed?” This is a very deep rabbit hole of complexity, but one good answer for a centralized service is, “Cloud-based key management service with audit logging”; i.e. AWS KMS, Google CloudKMS, etc.

Next, you have to understand how the data is being encrypted in the first place.

Bad answer: AES in CBC mode without HMAC.

Worse answer: AES in ECB mode.

Generally, you’re going to want to use an AEAD construction, such as AES-GCM or XChaCha20-Poly1305.

You’ll also want key-commitment if you’re storing data for multiple customers in the same hardware. You can get this property by stapling HKDF onto your protocol (once for key derivation, again for commitment). See also: PASETO v3 and v4.

It may be tempting to build a committing AEAD scheme out of, e.g., AES-CTR and HMAC, but take care that you don’t introduce canonicalization risks in your MAC.

Is Your Deputy Confused?