Key Transparency and the Right to be Forgotten

This post is the first in a new series covering some of the reasoning behind decisions made in my project to build end-to-end encryption for direct messages on the Fediverse.

(Collectively, Fedi-E2EE.)

Although the reasons for specific design decisions should be immediately obvious from reading the relevant specification (and if not, I consider that a bug in the specification), I believe writing about it less formally will improve the clarity behind the specific design decisions taken.

In the inaugural post for this series, I’d like to focus on how the Fedi-E2EE Public Key Directory specification aims to provide Key Transparency and an Authority-free PKI for the Fediverse without making GDPR compliance logically impossible.

CMYKat‘s art, edited by me.

Background

Key Transparency

For a clearer background, I recommend reading my blog post announcing the focused effort on a Public Key Directory, and then my update from August 2024.

If you’re in a hurry, I’ll be brief:

The goal of Key Transparency is to ensure everyone in a network sees the same view of who has which public key.

How it accomplishes this is a little complicated: It involves Merkle trees, digital signatures, and a higher-level protocol of distinct actions that affect the state machine.

If you’re thinking “blockchain”, you’re in the right ballpark, but we aren’t propping up a cryptocurrency. Instead, we’re using a centralized publisher model (per Public Key Directory instance) with decentralized verification.

Add a bit of cross-signing and replication, and you can stitch together a robust network of Public Key Directories that can be queried to obtain the currently-trusted list of public keys (or other auxiliary data) for a given Fediverse user. This can then be used to build application-layer protocols (i.e., end-to-end encryption with an identity key more robust than “trust on first use” due to the built-in audit trail to Merkle trees).

I’m handwaving a lot of details here. The Architecture and Specification documents are both worth a read if you’re curious to learn more.

Harubaki Right To Be Forgotten

I am not a lawyer, nor do I play one on TV. This is not legal advice. Other standard disclaimers go here.

Okay, now that we’ve got that out of the way, Article 17 of the GDPR establishes a “Right to erasure” for Personal Data.

What this actually means in practice has not been consistently decided by the courts yet. However, a publicly readable, immutable ledger that maps public keys (which may be considered Personal Data) with Actor IDs (which includes usernames, which are definitely Personal Data) goes against the grain when it comes to GDPR.

It remains an open question of there is public interest in this data persisting in a read-only ledger ad infinitum, which could override the right to be forgotten. If there is, that’s for the courts to decide, not furry tech bloggers.

I know it can be tempting, especially as an American with no presence in the European Union, to shrug and say, “That seems like a them problem.” However, if other folks want to be able to use my designs within the EU, I would be remiss to at least consider this potential pitfall and try to mitigate it in my designs.

So that’s exactly what I did.

AJ Almost Contradictory

At first glance, the privacy goals of both Key Transparency and the GDPR’s Right To Erasure are at odds.

- One creates an immutable, append-only history.

- The other establishes a right for EU citizens’ history to be selectively censored, which means history has to be mutable.

However, they’re not totally impossible to reconcile.

An untested legal theory circulating around large American tech companies is that “crypto shredding” is legally equivalent to erasure.

Crypto shredding is the act of storing encrypted data, and then when given a legal takedown request from an EU citizen, deleting the key instead of the data.

AJ This works from a purely technical perspective: If the data is encrypted, and you don’t know the key, to you it’s indistinguishable from someone who encrypted the same number of NUL bytes.

In fact, many security proofs for encryption schemes are satisfied by reaching this conclusion, so this isn’t a crazy notion.

Is Crypto Shredding Plausible?

In 2019, the European Parliamentary Research Service published a lengthy report titled Blockchain and the General Data Protection Regulation which states the following:

Before any examination of whether blockchain technology is capable of complying with Article 17 GDPR; it must be underscored that the precise meaning of the term ‘erasure’ remains unclear.

Article 17 GDPR does not define erasure, and the Regulation’s recitals are equally mum on how this term should be understood. It might be assumed that a common-sense understanding of this terminology ought to be embraced. According to the Oxford English Dictionary, erasure means ‘the removal or writing, recorded material, or data’ or ‘the removal of all traces of something: obliteration’.494

From this perspective, erasure could be taken to equal destruction. It has, however, already been stressed that the destruction of data on blockchains, particularly these of a public and permissionless nature, is far from straightforward.

There are, however, indications that the obligation inherent to Article 17 GDPR does not have to be interpreted as requiring the outright destruction of data. In Google Spain, the delisting of information from research results was considered to amount to erasure. It is important to note, however, that in this case, this is all that was requested of Google by the claimant, who did not have control over the original data source (an online newspaper publication). Had the claimant wished to obtain the outright destruction of the relevant data it would have had to address the newspaper, not Google. This may be taken as an indication that what the GDPR requires is that the obligation resting on data controllers is to do all they can to secure a result as close as possible to the destruction of their data within the limits of [their] own factual possibilities.

Dr Michèle Finck, Blockchain and the General Data Protection Regulation, pp. 75-76

From this, we can kind of intuit that the courts aren’t pedantic: The cited Google Spain case was satisfied by merely delisting the content, not the erasure of the newspaper’s archives.

The report goes on to say:

As awareness regarding the tricky reconciliation between Article 17 GDPR and distributed ledgers grows, a number of technical alternatives to the outright destruction of data have been considered by various actors. An often-mentioned solution is that of the destruction of the private key, which would have the effect of making data encrypted with a public key inaccessible. This is indeed the solution that has been put forward by the French data protection authority CNIL in its guidance on blockchains and the GDPR. The CNIL has suggested that erasure could be obtained where the keyed hash function’s secret key is deleted together with information from other systems where it was stored for processing.

Dr Michèle Finck, Blockchain and the General Data Protection Regulation, pp. 76-77

That said, I cannot locate a specific court decision that affirms that crypto erasure is legally sufficient for complying with data erasure requests (nor any that affirm that it’s necessary).

I don’t have a crystal ball that can read the future on what government compliance will decide, nor am I an expert in legal matters.

Given the absence of a clear legal framework, I do think it’s totally reasonable to consider crypto-shredding equivalent to data erasure. Most experts would probably agree with this. But it’s also possible that the courts could rule totally stupidly on this one day.

Therefore, I must caution anyone that follows a similar path: Do not claim GDPR compliance just because you implement crypto-shredding in a distributed ledger. All you can realistically promise is that you’re not going out of your way to make compliance logically impossible. All we have to go by are untested legal hypotheses, and very little clarity (even if the technologists are near-unanimous on the topic!).

Towards A Solution

With all that in mind, let’s start with “crypto shredding” as the answer to the GDPR + transparency log conundrum.

This is only the start of our complications.

CMYKat Protocol Risks Introduced by Crypto Shredding

Before the introduction of crypto shredding, the job of the Public Key Directory was simple:

- Receive a protocol message.

- Validate the protocol message.

- Commit the protocol message to a transparency log (in this case, Sigsum).

- Retrieve the protocol message whenever someone requests it to independently verify its inclusion.

- Miscellaneous other protocol things (cross-directory checkpoint commitment, replication, etc.).

Point being: there was very little that the directory could do to be dishonest. If they lied about the contents of a record, it would invalidate the inclusion proofs of every successive record in the ledger.

In order to make a given record crypto-shreddable without breaking the inclusion proofs for every record that follows, we need to commit to the ciphertext, not the plaintext. (And then, when a takedown request comes in, wipe the key.)

Now, things are quite more interesting.

Do you…

- …Distribute the encryption key alongside the ciphertext and let independent third parties decrypt it on demand?

…OR…

- Decrypt the ciphertext and serve plaintext through the public API, keeping the encryption key private so that it may be shredded later?

The first option seems simple, but runs into governance issues: How do you claim the data was crypto-shredded if countless individuals have a copy of the encryption key, and can therefore recover the plaintext from the ciphertext?

I don’t think that would stand up in court.

CMYKat Clearly, your best option is the second one.

Okay, so how does an end user know that the ciphertext that was committed to the transparency ledger decrypts to the specific plaintext value served by the Public Key Directory? How do users know it’s not lying?

Quick aside: This question is also relevant if you went with the first option and used a non-committing AEAD mode for the actual encryption scheme.

In that scenario, a hostile nation state adversary could pressure a Public Key Directory to selectively give one decryption key to targeted users, and another to the rest of the Internet, in order to perform a targeted attack against citizens they’d rather didn’t have civil rights.

My entire goal with introducing key transparency to my end-to-end encryption proposal is to prevent these sorts of attacks, not enable them.

There are a lot of avenues we could explore here, but it’s always worth outlining the specific assumptions and security goals of any design before you start perusing the literature.

AJ Assumptions

This is just a list of things we assume are true, and do not need to prove for the sake of our discussion here today. The first two are legal assumptions; the remainder are cryptographic.

Ask your lawyer if you want advice about the first two assumptions. Ask your cryptographer if you suspect any of the remaining assumptions are false.

- Crypto-shredding is a legally valid way to provide data erasure (as discussed above).

- EU courts will consider public keys to be Personal Data.

- The SHA-2 family of hash functions is secure (ignoring length-extension attacks, which won’t matter for how we’re using them).

- HMAC is a secure way to build a MAC algorithm out of a secure hash function.

- HKDF is a secure KDF if used correctly.

- AES is a secure 128-bit block cipher.

- Counter Mode (CTR) is a secure way to turn a block cipher into a stream cipher.

- AES-CTR + HMAC-SHA2 can be turned into a secure AEAD mode, if done carefully.

- Ed25519 is a digital signature algorithm that provides strong security against existent forgery under a chosen-message attack (SUF-CMA).

- Argon2id is a secure, memory-hard password KDF, when used with reasonable parameters. (You’ll see why in a moment.)

- Sigsum is a secure mechanism for building a transparency log.

This list isn’t exhaustive or formal, but should be sufficient for our purposes.

Security Goals

- The protocol messages stored in the Public Key Directory are accompanied by a Merkle tree proof of inclusion. This makes it append-only with an immutable history.

- The Public Key Directory cannot behave dishonestly about the decrypted plaintext for a given ciphertext without clients detecting the deception.

- Whatever strategy we use to solve this should be resistant to economic precomputation and brute-force attacks.

Can We Use Zero-Knowledge Proofs?

At first, this seems like an ideal situation for a succinct, non-interactive zero-knowledge proof.

After all, you’ve got some secret data that you hold, and you want to prove that a calculation is correct without revealing the data to the end user. This seems like the ideal setup for Schnorr’s identification protocol.

CMYKat Unfortunately, the second assumption (public keys being considered Personal Data by courts, even though they’re derived from random secret keys) makes implementing a Zero-Knowledge Proof here very challenging.

First, if you look at Ed25519 carefully, you’ll realize that it’s just a digital signature algorithm built atop a Schnorr proof, which requires some sort of public key (even an ephemeral one) to be managed.

Worse, if you try to derive this value solely from public inputs (rather than creating a key management catch-22), the secret scalar your system derives at will have been calculated from the user’s Personal Data–which only strengthens a court’s argument that the public key is therefore personally identifiable.

CMKat There may be a more exotic zero-knowledge proof scheme that might be appropriate for our needs, but I’m generally wary of fancy new cryptography.

Here are two rules I live by in this context:

- If I can’t get the algorithms out of the crypto module for whatever programming language I find myself working with, it may as well not even exist.

- If a developer needs to reach for a generic Big Integer library (e.g., GMP) for any reason in the course of implementing a protocol, I do not trust their implementation.

Unfortunately, a lot of zero-knowledge proof designs fail one or both of these rules in practice.

(Sorry not sorry, homomorphic encryption enthusiasts! The real world hasn’t caught up to your ideas yet.)

What About Verifiable Random Functions (VRFs)?

It may be tempting to use VRFs (i.e., RFC 9381), but this runs into the same problem as zero-knowledge proofs: we’re assuming that an EU court would deem public keys Personal Data.

But even if that assumption turns out false, the lifecycle of a protocol message looks like this:

- User wants to perform an action (e.g.,

AddKey). - Their client software creates a plaintext protocol message.

- Their client software generates a random 256-bit key for each potentially-sensitive attribute, so it can be shredded later.

- Their client software encrypts each attribute of the protocol message.

- The ciphertext and keys are sent to the Public Key Directory.

- For each attribute, the Public Key Directory decrypts the ciphertext with the key, verifies the contents, and then stores both. The ciphertext is used to generate a commitment on Sigsum (signed by the Public Key Directory’s keypair).

- The Public Key Directory serves plaintext to requestors, but does not disclose the key.

- In the future, the end user can demand a legal takedown, which just wipes the key.

Let’s assume I wanted to build a VRF out of Ed25519 (similar to what Signal does with VXEdDSA). Now I have a key management problem, which is pretty much what this project was meant to address in the first place.

VRFs are really cool, and more projects should use them, but I don’t think they will help me.

CMYKat Soatok’s Proposed Solution

If you want to fully understand the nitty-gritty implementation details, I encourage you to read the current draft specification, plus the section describing the encryption algorithm, and finally the plaintext commitment algorithm.

Now that we’ve established all that, I can begin to describe my approach to solving this problem.

First, we will encrypt each attribute of a protocol message, as follows:

- For subkey derivation, we use HKDF-HMAC-SHA512.

- For encrypting the actual plaintext, we use AES-256-CTR.

- For message authentication, we use HMAC-SHA512.

- Additional associated data (AAD) is accepted and handled securely; i.e., we don’t use YOLO as a hash construction.

This prevents an Invisible Salamander attack from being possible.

This encryption is performed client-side, by each user, and the symmetric key for each attribute is shared with the Public Key Directory when publishing protocol messages.

If they later issue a legal request for erasure, they can be sure that the key used to encrypt the data they previously published isn’t secretly the same key used by every other user’s records.

They always know this because they selected the key, not the server. Furthermore, everyone can verify that the hash published to the Merkle tree matches a locally generated hash of the ciphertext they just emitted.

This provides a mechanism to keep everyone honest. If anything goes wrong, it will be detected.

Next, to prevent the server from being dishonest, we include a plaintext commitment hash, which is included as part of the AAD (alongside the attribute name).

(Implementing crypto-shredding is straightforward: simply wipe the encryption keys for the attributes of the records in scope for the request.)

If you’ve read this far, you’re probably wondering, “What exactly do you mean by plaintext commitment?”

Art by

Scruff.

Plaintext Commitments

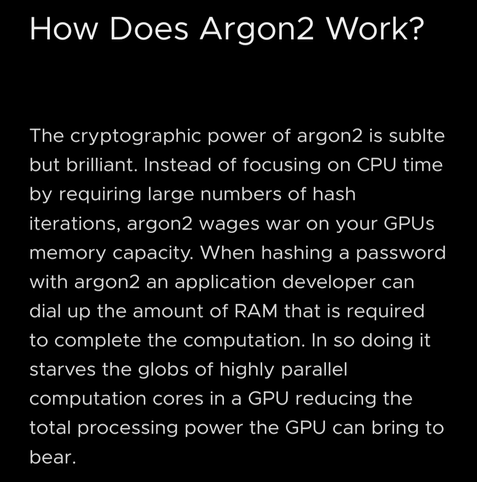

The security of a plaintext commitment is attained by the Argon2id password hashing function.

By using the Argon2id KDF, you can make an effective trapdoor that is easy to calculate if you know the plaintext, but economically infeasible to brute-force attack if you do not.

However, you need to do a little more work to make it safe.

Harubaki The details here matter a lot, so this section is unavoidably going to be a little dense.

Pass the Salt?

Argon2id expects both a password and a salt.

If you eschew the salt (i.e., zero it out), you open the door to precomputation attacks (see also: rainbow tables) that would greatly weaken the security of this plaintext commitment scheme.

You need a salt.

If you generate the salt randomly, this commitment property isn’t guaranteed by the algorithm. It would be difficult, but probably not impossible, to find two salts (, ) such that .

Deriving the salt from public inputs eliminates this flexibility.

By itself, this reintroduces the risk of making salts totally deterministic, which reintroduces the risk of precomputation attacks (which motivated the salt in the first place).

If you include the plaintext in this calculation, it could also create a crib that gives attackers a shortcut for bypassing the cost of password hashing.

Furthermore, any two encryptions operations that act over the same plaintext would, without any additional design considerations, produce an identical value for the plaintext commitment.

CMYKat Public Inputs for Salt Derivation

The initial proposal included the plaintext value for Argon2 salt derivation, and published the salt and Argon2 output next to each other.

Hacker News comex pointed out a flaw with this technique, so I’ve since revised how salts are selected to make them independent of the plaintext.

The public inputs for the Argon2 salt are now:

- The version identifier prefix for the ciphertext blob.

- The 256-bit random value used as a KDF salt (also stored in the ciphertext blob).

- A recent Merkle tree root.

- The attribute name (prefixed by its length).

These values are all hashed together with SHA-512, and then truncated to 128 bits (the length required by libsodium for Argon2 salts).

This salt is not stored, but can deterministically be calculated from public information.

Crisis Averted?

This sure sounds like we’ve arrived at a solution, but let’s also consider another situation before we declare our job done.

High-traffic Public Key Directories may have multiple users push a protocol message with the same recent Merkle root.

This may happen if two or more users query the directory to obtain the latest Merkle root before either of them publish their updates.

Later, if both of these users issue a legal takedown, someone might observe that the recent-merkle-root is the same for two messages, but their commitments differ.

Is this enough leakage to distinguish plaintext records?

In my earlier design, we needed to truncate the salt and rely on understanding the birthday bound to reason about its security. This is no longer the case, since each salt is randomized by the same random value used in key derivation.

Choosing Other Parameters

As mentioned a second ago, we set the output length of the Argon2id KDF to 32 bytes (256 bits). We expect the security of this KDF to exceed , which to most users might as well be infinity.

With apologies to

Filippo.

The other Argon2id parameters are a bit hand-wavey. Although the general recommendation for Argon2id is to use as much memory as possible, this code will inevitably run in some low-memory environments, so asking for several gigabytes isn’t reasonable.

For the first draft, I settled on 16 MiB of memory, 3 iterations, and a parallelism degree of 1 (for widespread platform support).

Plaintext Commitment Algorithm

With all that figured out, our plaintext commitment algorithm looks something like this:

- Calculate the SHA512 hash of:

- A domain separation constant

- The header prefix (stored in the ciphertext)

- The randomness used for key-splitting in encryption (stored in the ciphertext)

- Recent Merkle Root

- Attribute Name Length (64-bit unsigned integer)

- Attribute Name

- Truncate this hash to the rightmost 16 bytes (128 bits). This is the salt.

- Calculate Argon2id over the following inputs concatenated in this order, with an output length of 32 bytes (256 bits), using the salt from step 2:

- Recent Merle Root Length (64-bit unsigned integer)

- Recent Merkle Root

- Attribute Name Length (64-bit unsigned integer)

- Attribute Name

- Plaintext Length (64-bit unsigned integer)

- Plaintext

The output (step 3) is included as the AAD in the attribute encryption step, so the authentication tag is calculated over both the randomness and the commitment.

To verify a commitment (which is extractable from the ciphertext), simply recalculate the commitment you expect (using the recent Merkle root specified by the record), and compare the two in constant-time.

If they match, then you know the plaintext you’re seeing is the correct value for the ciphertext value that was committed to the Merkle tree.

If the encryption key is shredded in the future, an attacker without knowledge of the plaintext will have an enormous uphill battle recovering it from the KDF output (and the salt will prove to be somewhat useless as a crib).

AJ Caveats and Limitations

Although this design does satisfy the specific criteria we’ve established, an attacker that already knows the correct plaintext can confirm that a specific record matches it via the plaintext commitment.

This cannot be avoided: If we are to publish a commitment of the plaintext, someone with the plaintext can always confirm the commitment after the fact.

CMYKat Whether this matters at all to the courts is a question for which I cannot offer any insight.

Remember, we don’t even know if any of this is actually necessary, or if “moderation and platform safety” is a sufficient reason to sidestep the right to erasure.

If the courts ever clarify this adequately, we can simply publish the mapping of Actor IDs to public keys and auxiliary data without any crypto-shredding at all.

Trying to attack it from the other direction (download a crypto-shredded record and try to recover the plaintext without knowing it ahead of time) is attack angle we’re interested in.

Herd Immunity for the Forgotten

Another interesting implication that might not be obvious: The more Fediverse servers and users publish to a single Public Key Directory, the greater the anonymity pool available to each of them.

Consider the case where a user has erased their previous Fediverse account and used the GDPR to also crypto-shred the Public Key Directory entries containing their old Actor ID.

To guess the correct plaintext, you must not only brute-force guessing possible usernames, but also permute your guesses across all of the instances in scope.

The more instances there are, the higher the cost of the attack.

CMYKat Recap

I tasked myself with designing a Key Transparency solution that doesn’t make complying with Article 17 of the GDPR nigh-impossible. To that end, crypto-shredding seemed like the only viable way forward.

A serialized record containing ciphertext for each sensitive attribute would be committed to the Merkle tree. The directory would store the key locally and serve plaintext until a legal takedown was requested by the user who owns the data. Afterwards, the stored ciphertext committed to the Merkle tree is indistinguishable from random for any party that doesn’t already know the plaintext value.

I didn’t want to allow Public Key Directories to lie about the plaintext for a given ciphertext, given that they know the key and the requestor doesn’t.

After considering zero-knowledge proofs and finding them to not be a perfect fit, I settled on designing a plaintext commitment scheme based on the Argon2id password KDF. The KDF salts can be calculated from public inputs.

Altogether, this meets the requirements of enabling crypto-shredding while keeping the Public Key Directory honest. All known attacks for this design are prohibitively expensive for any terrestrial threat actors.

As an added bonus, I didn’t introduce anything fancy. You can build all of this with the cryptography available to your favorite programming language today.

CMYKat Closing Thoughts

If you’ve made it this far without being horribly confused, you’ve successfully followed my thought process for developing message attribute shreddability in my Public Key Directory specification.

This is just one component of the overall design proposal, but one that I thought my readers would enjoy exploring in greater detail than the specification needed to capture.

Header art: Harubaki, CMYKat.

(This post was updated on 2024-11-22 to replace the incorrect term “PII” with “personal data”. Apologies for the confusion!)

#Argon2 #crypto #cryptography #E2EE #encryption #FederatedPKI #fediverse #passwordHashing #symmetricCryptography