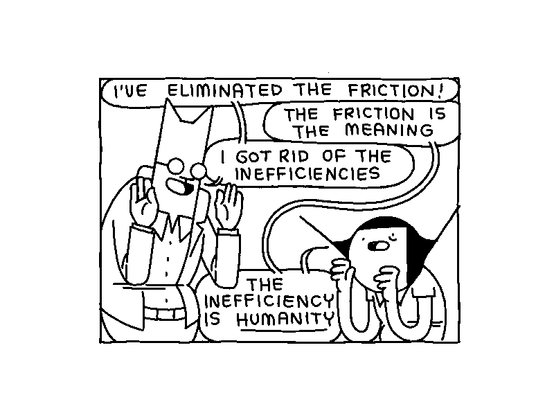

"The friction is the meaning"

https://catandgirl.com/the-genius/

via @tehn on izzzzzi

Assistant Professor at Georgia Tech's School of Interactive Computing.

I do research at the intersection of #CognitiveSystems, #AI, #HCI, #CognitiveScience, and #LearningScience. My work focuses on understanding how people teach and learn and building computational systems that can teach and learn like people do.

Outside work, I am into #dogs, #backyardchickens, #beekeeping, and #sourdough. I love #scifi and #fantasy.

The student approached the Master and said "He wants to put a million people on Mars by 2040! That's so amazing!"

The Master replied. "I have a better plan. I will put a million people on Antarctica by 2040."

"But that sounds fucking insane. Why would you want to do something that stupid? It's a barren wasteland that's difficult to populate and would provide us with absolutely nothing!"

At that moment, the student was enlightened.

If you were to write a more inclusive history of #HCI, whom would you include?

Context: prepping for my user-centered interface design course, the day on historical foundations is ALL WHITE DUDES: Vannevar Bush, Ivan Sutherland, Douglas Englebart, J.R. Licklider, Alan Kay, Ted Nelson, etc etc...

Who else belongs in this canon?

You may not believe in The Gritch, but The Gritch is still coming to town

Working on revisions of a dissertation proposal with one of my PhD students. It's a slog but we'll get there by new year's day.

“Creating AI caricatures of disabled people does not help us dismantle systemic ableism. By taking us out of the equation, and positioning fake, digital characters as more credible narrators of our stories than we who actually live those stories, accessibility is literally dehumanized.”

Ashlee M Boyer’s response to an “AI”-enabled “accessibility” product is very good, and worth your time: https://ashleemboyer.com/blog/how-to-dehumanize-accessibility-with-ai/

I'm teaching my first lecture at the new job today, about probabilistic logic programming, probabilistic inference, and (weighted) model counting.

Some of the required reading is a paper (https://eccc.weizmann.ac.il/eccc-reports/2003/TR03-003/index.html) that was written by a great mentor of mine, prof. dr. Fahiem Bacchus. He passed away just over 2 years ago, and I am honoured to keep his memory alive by teaching his ideas to a new generation of students. Hope to do him proud. 🌱

Please send good vibes? 🥺

#AcademicChatter #AcademicLife #AcademicMastodon #Teaching #Probability #ProbabilisticInference #Probabilities #Logic #LogicProgramming #PropositionalModelCounting #ProbabilisticLogicProgramming #ModelCounting #PropositionalLogic #WeightedModelCounting #DPLL #BayesianProbability #BayesNets #BasianStatistics #BayesianInference #BayesianNetworks #KnowledgeCompilation #DecisionDiagrams #BinaryDecisionDiagrams

There's a movement in neuroscience suggesting we should be pursuing bigger bets with larger teams. I think there's a case for doing a bit of this, but I think it's a bad idea to prioritise it for two reasons, and a good case for saying we should be moving in the exact opposite direction.

Bigger bets generally means less money available for the smaller bets. This means fewer ideas being pursued. Similarly, larger teams means fewer people in leadership roles with ownership over their research direction. Again, fewer ideas and less diversity.

This is bad for neuro because most ideas have turned out to be wrong in the sense that they haven't moved us closer to a global understanding of how our brain's work. (By this I don't mean that they were bad ideas, or that they weren't worth pursuing!) This is maybe controversial so let me explain.

When I read papers from 50 or 100 years ago speculating about how the brain might work, I don't see a huge difference with how we talk about it now. Few (none?) of the big debates have been settled: innate versus learned, spikes versus rates, behaviourism versus cognitivism, ...

The second reason big bets/teams shouldn't be our focus is that it limits opportunities for the autonomy and development of less senior researchers. Let's be honest, if we start pursuing 'big bets' those bets will be the bets of the most senior scientists, not the junior ones.

Junior scientists will - to an even greater extent than currently - be relegated to implementing the ideas of senior scientists. That's bad for diversity of ideas, but it's also bad for developing a new generation of thinkers who might be able to break us out of our (proven unsuccessful) patterns.

That's why I think we should go in the opposite direction. Empower junior scientists. Don't make junior PIs beg for grant funding in competitions decided by the opinions of senior scientists. Don't make them postdocs subordinate to their supervisors.

Instead, fund junior scientists directly and independently. Let senior scientists compete with each other to work with these talented younger scientists. Give senior scientists a non-binding advisory role to help develop the talents of the junior scientists.

If we think larger teams might be important, let's find ways to empower groups of secure and independent scientists to work together positively and voluntarily - because it makes their work more impactful - rather than negatively and through necessity - because they need to get a job.

UPI: Oregon city asks locals to stop putting googly eyes on sculptures https://www.accuweather.com/en/weather-news/oregon-city-asks-locals-to-stop-putting-googly-eyes-on-sculptures/1724494 #googlyeyes

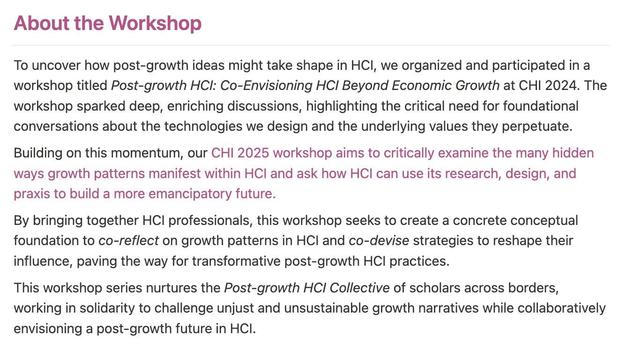

Join us at #CHI2025 for Advancing Post-growth HCI—a hybrid workshop to critically examine the hidden ways growth patterns manifest within HCI and how they can be challenged.

Online + on-site

Submit by Feb 13, 2025

Details: http://bit.ly/4gBS6Ys

w/ @neha @naveena @cbecker @hongjinlin @martintom #shaowenbardzell #anupriyatuli #asrakaeenwani #jaredkatzman

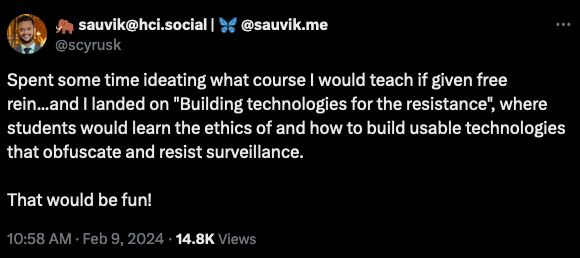

Just wrapped up teaching the first ever installment of my "Building Technologies for the Resistance" class — and what a privilege!

Students worked to identify unjust power disparities, and explored how different anarchist design principles could be used to help.

Syllabus: bit.ly/btr-syllabus

With permission, I'll share a few interesting projects that emerged in the coming weeks.

Incredible. Apple Intelligence summarized BBC news to claim Luigi had shot himself. This not only had not happened but was not something BBC reported.

AI news summaries are a terrible idea because "just making up shit" is basically an unsolvable problem in LLMs

https://bbc.com/news/articles/cd0elzk24dno

Ugh, just watched the video for Google's new Quantum computer, Willow.

I'm all for cool new kinds of computing, and Willow seems like a big advance, but the video is just... very misleading.

They start by implying that quantum computers are somehow more "natural" and contextually aware, like they're "in tune with the invisible web of life" or some nonsense like that. What does that have to do with anything?

They really minimize the fact that there are still only a handful of things we know how to do with a quantum computer, and they're not especially useful yet.

And the whole thing is branded as "Quantum AI" even though they did not discuss any AI applications.

Seems like Google continues to use their quantum research as a bullshit self-promotion exercise, which is a shame, really.

Today: our next talk in the CCCM seminar series on "The Cognitive Science of Generative AI"

Anya Ivanova

Psychology, Georgia Institute of Technology

on:

Dissociating language and thought in humans and in machines

(5 Dec 2024, 16:00 GMT, online)

Donald Knuth will be giving his annual Christmas tree lecture Thursday next week. It'll be about strong and weak components in directed graphs and Tarjan's algorithm for computing them. I'm planning to go! It looks like it'll also be streamed online. https://www-cs-faculty.stanford.edu/~knuth/musings.html

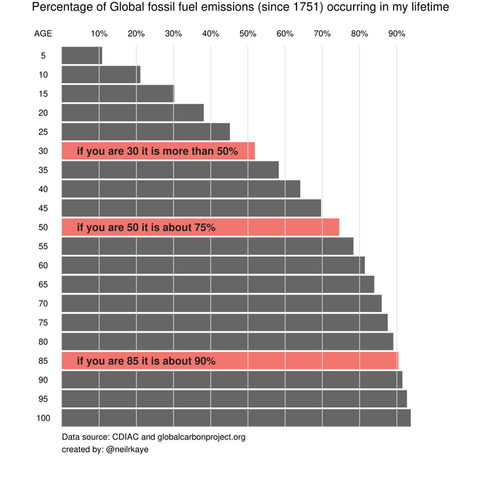

If you're aged 30 or more, then 50% of all human fossil fuel emissions happened during your lifetime