I just completed "Secret Entrance" - Day 1 - Advent of Code 2025 #AdventOfCode https://adventofcode.com/2025/day/1

Data/ML engineer at Blue Yonder. Enjoy running, birding, learning Korean, Go/Weiqi/Baduk. Nerd with interest in open source. Emacs user. Living in Cologne.

E.g: As a bird-lover I first learned that 새 means bird. When I later learned that it also means "new ..." (as a determiner), in my mind I imagined a newborn baby bird, even before I learned about mnemonics this image just popped to my mind. In the past I felt bad about this association, because the roots and Hanja of those words are different and it felt like a wrong association. But after reading about memory I got freed from that guilt: This is just how memory works. Over time with more exposure I built more "correct" associations with those words and can use them without relying on the mnemonic device.

Recently I'm exploring #memory techniques. Reading some books like Boris N. Konrad's "Mehr Platz im Gehirn and "The Memory Book" by H. Lorayne and trying techniques like the #memorypalace, linking

and mnemonic images.

I thought that pure spaced repetition with #Anki is all I need. But a better understanding how the memory works can help. E.g. I realized how important it is to associate new information with what's already in long-term memory (ideally in a vivid way).

This explains why initially #Korean vocabulary is so much more difficult to remember than e.g. a French word (as a German): There are just much fewer similarities between the languages and thus fewer hooks onto which to hang the new words.

I'm now more confident utilizing absurd mnemonic images (e.g. similar sounding words in my L1) for the initial learning of the more difficult words in Anki. But also I'm emphasizing input more and more, as it helps building associations through stories and context.

#languagelearning #artofmemory #SRS

@b0rk In the past I never thought about it more than just "sends a sigterm" but recently read the blog post https://www.warp.dev/blog/what-happens-when-you-open-a-terminal-and-enter-ls and learned about the communication between the terminal emulator, PTY and shell.

Bought a #Kobo Libra Color #ereader with my first bonus at work and am very happy with it. Try to do more long-form reading again to replace doom-scrolling, since the day-to-day knews are very unsettling these days. I installed #koreader and using #wallabag to send online content to my Kindle. Currently reading some programming #ebooks for work and some self-help books. But maybe read some form of fiction again. So far the only fiction I read this year is "The Vegetarian". Reminder I also have an account at @meliache@bookrastinating.com

#personal update: After 6 months I can say I'm very happy at my job as an ML engineer, enjoying the team and the coding. But my #Korean learning has suffered, I had put it a bit on ice (apartment search and marathon training took up a lot of free time). But happy that I will visit #Korea for the first time soon around Christmas, very excited to see the country, culture, immerse in Korean and try the food.

Another thing that "suffered" was my screen time outside work and my social media consumption, which I think is good but I also feel a bit bad about being less active on the fediverse.

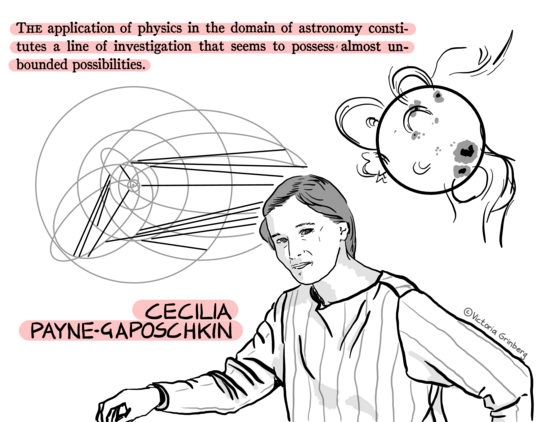

It's only since Cecilia Payne's PhD thesis in 1925, that we know what the stars - and our Sun - are made of: mostly hydrogen.

Her thesis was described as ""the most brilliant PhD thesis ever written in astronomy" and it extremely readable: https://ui.adsabs.harvard.edu/abs/1925PhDT.........1P/abstract

Yet It took until 1956, 10 years before her retirement, for her to become full professor - because women were barred from becoming full professors at Harvard.

(Posted because she was born #OTD).

@gaycookie @ramones That's a horrible thing to say, but even if the a reaction is understandable, nothing except self-defense justifies physical violence. That's immature and unprofessional, everybody should have learned as a young child that violence is not acceptable and the only thing it proves is that one can't control ones emotions. Still, as a human I sometimes loose control of my emotions as well, it's understandable, but that doesn't protect me from the consequences of my actions. I don't know the source of those rumors and don't want to speculate. But if the rumors are true, then I think it's justified for the EBU to take action.

Four new alternate land structures added recently to HyperRogue! As usual, the great walls are straight lines.

(a great walls following a periodic pattern

(b) lots of great walls crossing at 90° angles

(c) lots of great walls crossing at 60° angles, with some surprises waiting in the corners.

(d) no great walls, but using the "landscape method" to determine the boundaries between lands.

@qbi Die 161 km alleine sind kein Problem, dazu kommen die akkumuliert 16'500 Höhenmeter querfeldein, also von den Höhenmetern ist das wie zwei mal den Mount Everest zu erklimmen. Dazu noch, sich bei Schlafmangel orientieren zu müssen. Es gab viele Jahre ganz ohne Finisher. Dieses Jahr gab es einen Rekord mit 5 Leuten, was aber an der Qualität der Teilnehmer liegt, nicht daran dass der Lauf dieses Jahr einfacher sei.

@pkw @e11bits @peter_sc @galdor @thuna_cing @nlovsund @mdk

That's a good start! However, you can activate virtual environments from Emacs using pyvenv (https://github.com/jorgenschaefer/pyvenv), which allows using M-x pyvenv-activate`. You can also add a dir-local entry to activate the environment automatically when you enter a project, via a line like

```

(pyvenv-activate . "/path/to/venv")

```

on the same level as `eglot-workspace-configuration`.

I usually never restart my #Emacs session, just keep one Emacs server open for all my projects, so it's good we can manage environments from within Emacs.

Btw, if you want a pretty dropdown menu for completion, and possibly auto-completion (no tab-pressing), you can try #corfu (https://github.com/minad/corfu) or the older company-mode (https://company-mode.github.io). #Company latter has some historical baggage, but is more batteries-included, while corfu is a bit more modular.

Jasmin Paris, first woman finisher of the Berkley Marathons. Incredible acheivement #bm100

@pkw #Eglot and #lspmode are #LSP clients for #Emacs, use either or. Eglot is built-into Emacs 29 and upwards and is meant to integrate with existing Emacs utilities, LSP-mode has some more bells and whistles. I found I don't need those and use Eglot. As the language server I use #pylsp, which by default uses the #jedi Python package for completion. You don't need a jedi-specific Emacs package anymore if you use LSP, but still should install the Python package.

@rjayasinghe @moelholm Exactly, plus the physical challenge of 54,200 feet (16,500 m) of accumulated vertical climb (according to Wikipedia) offroad terrain, just saying "100 miles" undersells the challenge.

The Barkley Marathons have started for 2024!! #bm100 (it was traditionally on April Fools, but these days it varies a bit to throw off would-be spectators).

Instead, there's a mastodon-bot that reposts Keith Dunn's tweets (the best way to get news): https://social.running.cafe/@KeithDunn

And also: https://barkley.ferrett.io/

And if you don't know what I'm talking about, this documentary is still as good a place to start as any: https://barkleymovie.com/

If you had code on GitHub at any point it looks like it might be included in a large dataset called “The Stack” — If you want your code removed from this massive “ai” training data go here:

https://huggingface.co/spaces/bigcode/in-the-stack

I found two of my old Github repos in there. Both were deleted last year and both were private. This is a serious breach of trust by Github and @huggingface.

Remove all your code from Github.

CONSENT IS NOT OPT-OUT.

Edit — thanks for all the replies. More context here: https://hachyderm.io/@joeyh/112105744123363587

Also the repos i found of mine i’m sure were private, but even if they were public at some point, for a brief time, in the past that isn’t my consent to use them for purposes beyond their intent.

---

Edit 2 -- I see this made it to HN, which is a level of attention I do not want nor appreciate....

For all those wondering about the private repo issue -- No, I am not 100% sure that these ancient repos weren't at some point public for a split second before I changed it. I do know that they were never meant for this and that one of them didn't even contain any code.

If my accidentally making a repo public for a moment just so happened to overlap with this scraping, then I guess that's possible. But it in no way invalidates the issues, and the anger that i feel about it.