https://www.europesays.com/us/10955/ Yolanda Hadid claps back after posting ‘inappropriate’ pic of Gigi’s daughter Khai #Bravo #CelebrityChildren #CelebrityMoms #CelebrityNews #CelebritySocialMedia #Entertainment #GigiHadid #grandparents #Instagram #models #Parents #RealHousewivesOfBeverlyHills #RealityStars #UnitedStates #UnitedStates #US #YolandaHadid

#ModelS

🔗 My AI agents are all nuts

But I’ve been the first responder on an incident and fed 4o — not o4-mini, 4o — log transcripts, and watched it in seconds spot LVM metadata corruption issues on a host we’ve been complaining about for months. Am I better than an LLM agent at interrogating OpenSearch logs and Honeycomb traces? No. No, I am not.

Feeding error logs to AI is a game a hit and miss. Sometimes, you find a clue, and sometimes, the issue is so out of its reach that it sends you off on a wild goose chase where you waste hours testing and verifying every single one of the agent’s leads.

Niki Heikkilia has a breakdown of arguments in response to “My AI Skeptic Friends are All Nuts” – a post by Thomas Ptacek that made the rounds last week about why adoption of LLMs for coding assistance must be adopted.

Given the perverse incentives of traffic and attention, I know it will take time, but serious people should read LLMs as a normal technology. Figure out where it’s beneficial to you and use it as a tool.

Related to the blockquote here though I find the ability to think about LLM usage from a phase space perspective useful. If your question (and context window) is something that has established patterns – a lot of usage and writing in the web in the last 2-10 years, then it’s highly likely that the LLM is going to be useful to you.

In that spirit, I found these list of arguments about why an AI agent might not work to be a great list to keep in mind when leveraging LLMs:

Here’s a summarised list of everything that still requires improvement regarding agentic programming.

- Never take a rule for granted. Agents are more than willing to bend and break them.

- The more rules you impose on agents, the less they obey you. Talk about a robot uprising!

- Agents get stuck easily, retrying the wrong fix again and again.

- By default, agents touch every file and run every shell command unless you tell them not to. This is a hazardous risk.

- The code agents write for production and tests is incredibly bloated and complicated. To continue from that point would take a significant amount of time, converging towards net zero in productivity gains.

- Agents optimise for the number of lines delivered, which makes reviewing and maintaining their code risky and expensive.

- Agents fill their context window and burn through tokens faster than you realise. This leads to context-switching as you switch to a new thread, requiring an agent to relearn everything.

- Agents rapidly dispatch parallel API requests, often causing your computer to become rate-limited. This abruptly stops the flow since you must wait until the rate limits wear off.

- Most people won’t throw away their AI-generated prototypes but continue to use them in production instead. We have witnessed this long before AI and will continue to witness it for the foreseeable future.

- Lastly, working with agents is far from fun. I acknowledge it might affect my overall opinion, but I stand behind it.

So leverage that and keep that in mind to ensure that a stochastic system doesn’t harm you in your experiments. Good luck.

#ai #coding #development #guidelines #models #softwareDevelopment

Some ideas for what comes next https://www.interconnects.ai/p/summertime-outlook-o3s-novelty-coming #AI #models

Some ideas for what comes next https://www.interconnects.ai/p/summertime-outlook-o3s-novelty-coming #AI #models

Using AI Right Now: A Quick Guide - Ethan Mollick https://www.oneusefulthing.org/p/using-ai-right-now-a-quick-guide (good advice) #AI #models #tips

Using AI Right Now: A Quick Gu...

Using AI Right Now: A Quick Guide - Ethan Mollick https://www.oneusefulthing.org/p/using-ai-right-now-a-quick-guide (good advice) #AI #models #tips

Gundam Age: Advanced Grade Gafran 1/144 Model Kit *Open/Complete* brought to you by the #PlastiqueBoutique

#Gundam #Bandai #Toys #ToysForSale #Models #ModelKit #Gafran #MobileSuit #Collectables #CollectablesForSale #GundamStyle https://plastiqueboutique.com/product/gundam-age-advanced-grade-gafran-1-144-model-kit-open-complete/?utm_source=mastodon&utm_medium=social&utm_campaign=ReviveOldPost

Viki Odintcova

#VikiOdintcova #Modelos #Models #Chicas #Girls #Mujeres #Women #Morenas #Brunettes #pinups

Viki Odintcova

#VikiOdintcova #Modelos #Models #Chicas #Girls #Mujeres #Women #Morenas #Brunettes #PinUp

Viki Odintcova

#VikiOdintcova #Modelos #Models #Chicas #Girls #Mujeres #Women #Morenas #Brunettes #PinUp

https://www.youtube.com/watch?v=9zp4bLC1mzI

Cada domingo una nueva inspiración.

Que tío

Fanatic defensiveness of a position is likely a recipe for disaster

The next time you catch yourself getting defensive about something – really defensive, like you’re personally offended that someone would dare question it – maybe pause for a second. Ask yourself: am I defending this because it’s actually good for me, or because I’m scared to imagine alternatives?

Stefano. is on to something here. Cognitive flexibility is a requirement to ensure that you are considering the reality of a situation. The same applies for black and white thinking as well. Being that inflexible suggests that you are unwilling to be adaptable and that suggests you are primarily operating from a position of fear.

This is not to say that I am against vibe-coding. It has personally helped me get back into developing my own tools. I’ve made 3 chrome extensions and 7 alfred workflows that are truly making me excited about development and using my computer again. I think there’s something here that must be refined, built and leveraged into tremendous productivity gains. However, it’s a far cry from a simple utility for myself to a production ready enterprise software.

#adaptability #ai #coding #cognitiveFlexibility #evolution #models #vibeCoding

Extracting memorized pieces of books from open-weight language models

https://arxiv.org/abs/2505.12546

#HackerNews #Extracting #memorized #pieces #of #books #from #open-weight #language #models #languagemodels #AIresearch #bookextraction #openweightmodels #arxiv

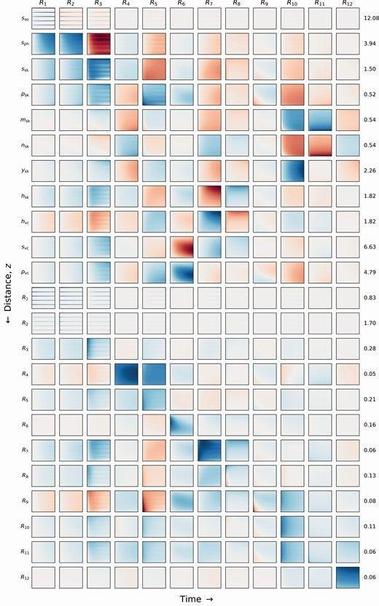

💻 Coupling kinetic #models and advection-diffusion equations. 2. Sensitivity analysis of an advection-diffusion-reaction #model

https://doi.org/10.1093/insilicoplants/diab014 #PlantScience