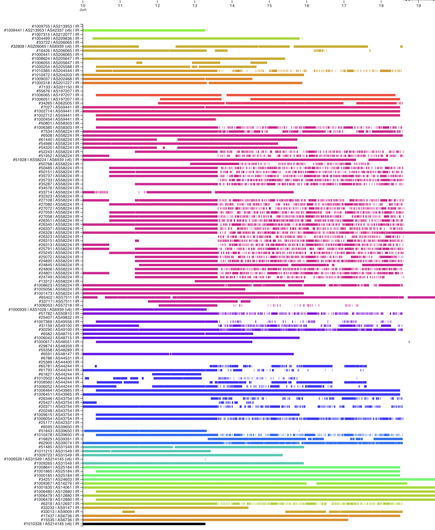

Connectivity of #RIPEAtlas probes in #Iran in the last few days:

* Since 13 June connectivity is much more volatile. Shift happened between 14:00-15:00 UTC

* Since 18 June there are only 6 connected probes left. Most others disconnected before 13:00 UTC

#RIPEAtlas

Analyzing FreeBSD’s Package Mirror GeoDNS

https://antranigv.am/posts/2025/06/analyzing-freebsds-package-mirror-geodns/

One of the things that makes FreeBSD an amazing operating system is its package manager, pkg(8). Many people will keep arguing with me saying that apt or pacman is better, but pkg has some of the best features out there. Don’t even get me started on number of packages. A friend was telling me “but how can I use FreeBSD when you have 1/3rd number of packages that Debian has?”. Again, this is a lie. While Debian has 80K packages, each software is divided into -doc and -dev, while on FreeBSD a simple pkg install will install everything that a package needs.

But let’s not talk about how awesome FreeBSD’s pkg is, let’s talk about it’s issues.

Couple of years ago, I noticed that downloading from FreeBSD package mirror was veeeeeery slow. Turns out that based on GeoDNS Armenia was connecting to the South African package mirror pkg0.jinx.FreeBSD.org by default.

Lucky for me, there was a package to help you figure out which one is fastest for you, named fastest_pkg. I ran it and I learned that the Frankfurt package mirror was the fastest for me.

Initially, I changed the config in FreeBSD.conf as recommended by fastest_pkg, but I’m not a single server guy, I have dozens of hosts with hundreds of jails.

Solution? I asked the Cluster Admins to set Armenia’s GeoDNS to pkg0.fra.FreeBSD.org.

But servers come and go, and the Frankfurt server got decommissioned a while back. Luckily the project now has a server in… Sweden!

But my download speed is slow. Somehow Armenia defaulted back to South Africa.

Is this an issue for me, or is this an issue for everybody?

(Sidenote: turns out fastest_pkg has been broken for a while, ever since pkg moved to a new package format, so we also made a patch and submitted a PR)

During today’s FreeBSD “Ask the experts: AMA” we used RIPE Atlas to measure the speeds from all around the world, to all package mirror hosts.

All of the measurements can be found here.

So, here’s the goal

- Measure from around the world to all of the package mirror hosts (done).

- Measure from around the world to

pkg.FreeBSD.orgto get the default GeoDNS host for each area/country/network (in progress, recurring every 6 hours for the next 6 days) - Find mis-configurations between “fastest” and “default” (TODO).

While the big measurements is still running I tried to use my eyes to see if there’s anything GREEN (aka fast connection) in direct package mirror host measurements whilst being YELLOW/RED (aka bad connection) when connecting to pkg.FreeBSD.org.

Unsurprisingly, we found one! It was in Mombasa, Kenya.

Here’s what it looks like while connecting directly:

{ "fw": 5080, "mver": "2.6.2", "lts": 25, "dst_name": "pkg0.jinx.freebsd.org", "ttr": 10639.830509, "af": 4, "dst_addr": "196.10.53.168", "src_addr": "160.119.216.205", "proto": "ICMP", "ttl": 59, "size": 1000, "result": [ { "rtt": 47.434297 }, { "rtt": 47.39957 }, { "rtt": 47.412379 }, { "rtt": 47.369089 }, { "rtt": 47.851894 } ], "dup": 0, "rcvd": 5, "sent": 5, "min": 47.369089, "max": 47.851894, "avg": 47.493445799999996, "msm_id": 110487154, "prb_id": 22215, "timestamp": 1750269643, "msm_name": "Ping", "from": "160.119.216.205", "type": "ping", "group_id": 110487154, "step": null, "stored_timestamp": 1750269644}And here it is via GeoDNS’s defaults:

{ "fw": 5080, "mver": "2.6.2", "lts": 10, "dst_name": "pkg.freebsd.org", "ttr": 10465.87807, "af": 4, "dst_addr": "173.228.147.98", "src_addr": "160.119.216.205", "proto": "ICMP", "ttl": 52, "size": 1000, "result": [ { "rtt": 240.19025 }, { "rtt": 244.967071 }, { "rtt": 236.466414 }, { "rtt": 238.189775 }, { "rtt": 233.570938 } ], "dup": 0, "rcvd": 5, "sent": 5, "min": 233.570938, "max": 244.967071, "avg": 238.6768896, "msm_id": 110489920, "prb_id": 22215, "timestamp": 1750270943, "msm_name": "Ping", "from": "160.119.216.205", "type": "ping", "group_id": 110489920, "step": null, "stored_timestamp": 1750270944}Clearly, it’s set to pkg0.chi.freebsd.org (173.228.147.98) by default. That’s Chicago. That’s halfway around the world. It took it 238ms on average. While it took the same probe 47ms on average when connecting to pkg0.jinx.freebsd.org directly.

Clearly, I’m not the only one.

Hopefully after collecting data for 6 days, we can start analyzing and improving the GeoDNS setup that we have.

A good suggestion from crest was to have a pkg plugin that does the work of fastest_pkg on the fly. We’ll try that one day.

If anyone is interested in helping me out, feel free to contact me over email, IRC or Discord.

That’s all folks…

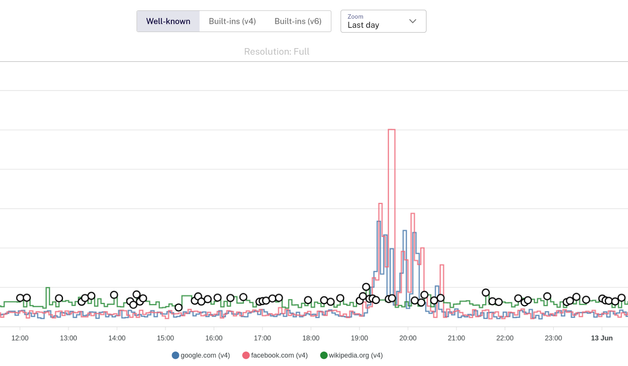

#Internetoutage #ripeatlas @ripencc

As a #ripeatlas host I can clearly see the effect of yesterdays outage in one out of 4 probes. The remaining probes show no obvious sign.

Does this speak for or against this particular #autonomoussystem?

With tech like immersive reality & autonomous systems pushing latency limits, predicting cloud performance is more critical than ever.

Researchers used #RIPEAtlas to ask:

📍Does location matter?

☁️ Does your cloud provider?

📉 Can we forecast latency?

Read more: https://labs.ripe.net/author/rita-ingabire/forecasting-cloud-performance-with-ripe-atlas/

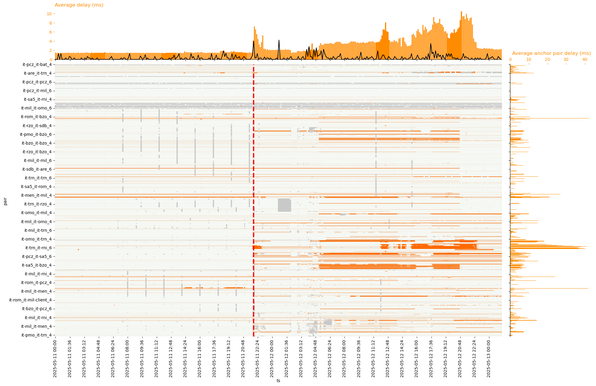

I heard rumors about an IXP issue in Italy on May 11 at night.

The #RIPEAtlas anchor mesh latencies did see some paths with increased latency starting a bit before 22:00 UTC (midnight in #Italy )

Next week is #RIPE90 week! I will attend Wednesday through Friday, so hit me up if you want to talk about Internet outages, Internet resiliency, Internet measurement (#RIPEAtlas, #RIS), Internet live streaming of lettuces.

For netops from #Spain and #Portugal : I'm really curious about your stories on how you dealt with the grid outage on 28 April. I would love to see how these stories connect up with the data we collected about the event.

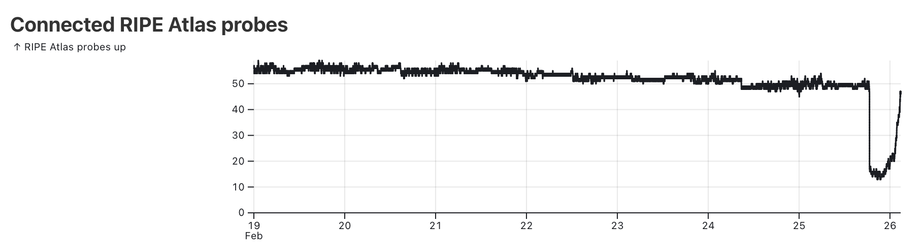

The number of connected #RIPEAtlas probes in #Chile showed a steep drop after a massive power outage ( https://www.reuters.com/world/americas/power-outage-hits-vast-swaths-chile-largest-copper-mine-santiago-streets-2025-02-25/ )

Not a big fan of Pandas dataframe syntax? Prefer SQL? Suffer no more! New on #RIPELabs, Giovane Moura and Andra Ionescu dig into #RIPEAtlas data to show how fans of SQL can use DuckDB as an alternative to Pandas for data analysis: https://labs.ripe.net/author/giovane_moura/easy-ripe-atlas-data-analysis-with-sql/

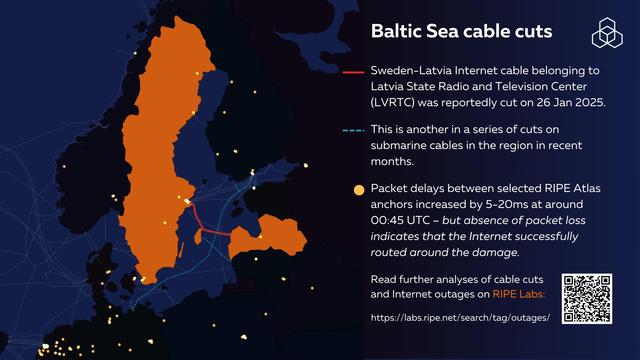

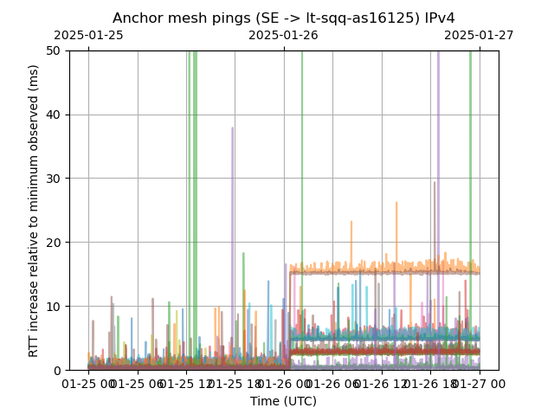

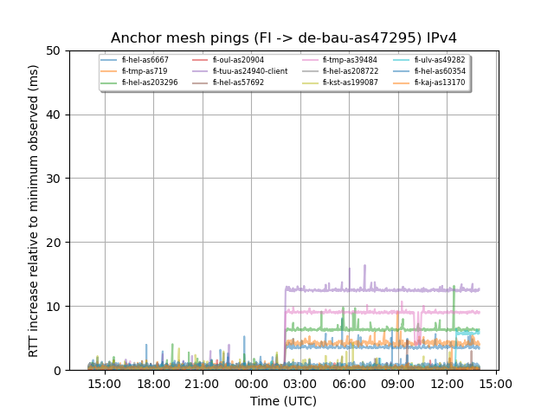

Sweden-Latvia Internet cable was reportedly cut on 26 January. @meileaben reports that packet delays between selected #RIPEAtlas anchors increased by 5-20ms at around 00:45 UTC.

However, no packet loss was observed, suggesting that the Internet successfully routed around the damage.

Interested? Read a deep-dive into a previous cable cut in the Baltic Sea by our researcher Emile Aben: https://labs.ripe.net/author/emileaben/a-deep-dive-into-the-baltic-sea-cable-cuts/

Quick look at #RIPEAtlas mesh pings on 26 Jan, shows some (not all!) #Finland-#Lithuania anchor pairs saw:

* A noticeable latency increase (5-20ms extra) around 00:45UTC, in line with the reported cable cut of the LVRTC-cable around that time.

* No noticeable changes in packet loss.

Looks like another one where the Internet managed to route around damage.

#BalticSea #CableCut @ripencc

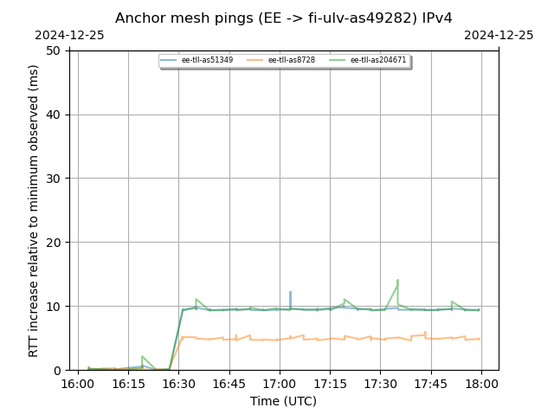

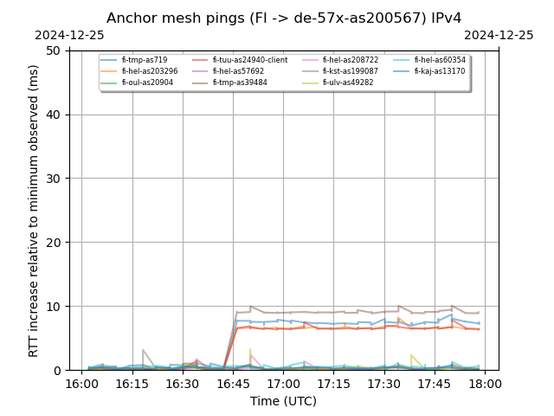

Quick look at #RIPEAtlas mesh pings on 25 December, shows #Finland-#Estonia anchor pairs saw a noticeable latency increase around 16:30UTC , while #Finland-#Germany anchor pairs saw a noticeable increase around 16:45UTC

#BalticSea #CableCut @ripencc

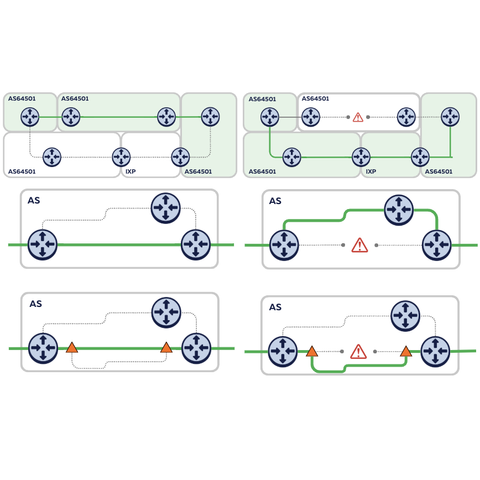

"In November, we shared our preliminary analysis of the analysis of the Baltic Sea cable cuts. Digging into the results of ping mesh measurements routinely performed by RIPE Atlas anchors, we were able to provide an initial sketch of how this affected connectivity between the countries at both ends of both cables."

Emile Aben, Jim Cowie and Alun Davies delve further into the Baltic Sea cable cuts on #RIPELabs #RIPEAtlas

https://labs.ripe.net/author/emileaben/a-deep-dive-into-the-baltic-sea-cable-cuts/

We did a deep dive into the Baltic Sea cable cuts, looking at the different levels of resiliency of the Internet in action. I hope it will provide for interesting christmas holiday reading 🙃

https://labs.ripe.net/author/emileaben/a-deep-dive-into-the-baltic-sea-cable-cuts/

In the news: Cable cuts this morning between #Sweden and #Finland ( https://apnews.com/article/sweden-finland-damaged-cable-9b6092fd670383a4de28f05c33405768 ), so I looked into what #RIPEatlas sees. We do see a noticeable increase for a few paths between anchors in FI and SE at 3:15AM UTC (roughly 5% of paths), and some interesting changes in packet loss.

Does the timing of state changes in these graphs (3:15 for latency increase, and also 6:00, 11:00 for pkt loss, all UTC) coincide with timings of the cable cuts?

I have network again but now I need to reset everything. And first I face errors when trying to get back my #ripeatlas probe https://docs.turris.cz/basics/apps/atlas/

RIPE Labs investigated the #submarinecable cuts in the #balticsea in a blog post Does the Internet Route Around Damage? - Baltic Sea Cable CutsThey infer evidence of disruptions based on #ripeatlas measurements in which 20-30% of the paths exhibit a notably increased latency. A short, but nevertheless interesting read.

Following the latest news on the cable cut are somewhat concerning due to the hostile actions traversing from "digital only" to physical infrastructure for the digital space.

tldr: So the internet really routed around the cuts of the Baltic Sea cables (as we would expect). Bit more latency, but not more packet loss. #RIPEatlas

The double undersea cable cuts in the Baltic sea have been in the news over the weekend. But what was the impact on the Internet?

Emile Aben (@meileaben) and Alun Davies take a look at what #RIPEAtlas can tell us about this incident:

https://labs.ripe.net/author/emileaben/does-the-internet-route-around-damage-baltic-sea-cable-cuts/

A preliminary analysis of effects of the Baltic Sea cable cuts, through the lens of #RIPEAtlas . Does the Internet route around damage?

https://labs.ripe.net/author/emileaben/does-the-internet-route-around-damage-baltic-sea-cable-cuts/