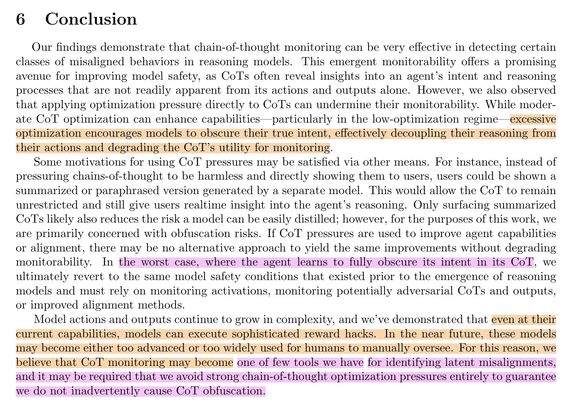

The #OpenAI paper by Baker et al, "Monitoring Reasoning Models for Misbehavior and the Risks of Promoting Obfuscation" comes to a troubling conclusion: #LLM s with #reasoning or #ChainOfThought (#CoT) capabilities might learn to obfuscate their own CoT from human users if they are being penalized for displaying "wrong" (i.e. reward hacking or misalignment) reasoning.

As a result, OpenAI strongly advises against applying reward pressure "directly" onto the CoT of a model.

🤔 While that is certainly the right thing to do, how long will #AI take to figure out that *indirect CoT pressure* is being applied anyway and that it could circumvent these restrictions by obfuscating its own CoT? Maybe something like this will happen by accident or within an "evolutionary" self-improvement loop. Perhaps a sufficiently advanced model will realize that its own #neuralese serves as #steganography to hide its intents from humans anyway and keep its CoT in non-English?

source: https://cdn.openai.com/pdf/34f2ada6-870f-4c26-9790-fd8def56387f/CoT_Monitoring.pdf