Are we overthinking post-quantum cryptography?

tl;dr: yes, contra thingamajig’s law of wotsits.

Before the final nail has even been hammered on the coffin of AI, I hear the next big marketing wave is “quantum”. Quantum computing promises to speed up various useful calculations, but is also potentially catastrophic to widely-deployed public key cryptography. Shor’s algorithm for a quantum computer, if realised, will break the hard problems underlying RSA, Diffie-Hellman, and Elliptic Curve cryptography—i.e., most crypto used for TLS, SSH and so on. Although “cryptographically-relevant” quantum computers (CRQCs) still seem a long way off (optimistic roadmap announcements and re-runs of previously announced “breakthroughs” notwithstanding), for some applications the risk is already real. In particular, if you are worried about nation-states or those with deep pockets, the threat of “store-now, decrypt-later” attacks must be considered. It is therefore sensible to start thinking about deploying some form of post-quantum cryptography that protects against these threats. But what, exactly?

If you are following NIST’s post-quantum crypto standardisation efforts, you might be tempted to think the answer is “everything”. NIST has now selected multiple post-quantum signature schemes and public key encryption algorithms (“KEMs”), and is evaluating more. Many application and protocol standards are then building on top of these with the assumption that post-quantum crypto should either replace all the existing crypto, or else at least ride alongside it everywhere in a “hybrid” configuration. We have proposals for post-quantum certificates, post-quantum ciphersuites for TLS, for SSH, for Signal and so on. From my view on the sidelines, it feels like many cryptographers are pushing to entirely replace existing “classical” cryptographic algorithms entirely with post-quantum replacements, with the idea that we would turn off the classical algorithms somewhere down the line.

Unfortunately, many of the proposed post-quantum cryptographic primitives have significant drawbacks compared to existing mechanisms, in particular producing outputs that are much larger. For signatures, a state of the art classical signature scheme is Ed25519, which produces 64-byte signatures and 32-byte public keys, while for widely-used RSA-2048 the values are around 256 bytes for both. Compare this to the lowest security strength ML-DSA post-quantum signature scheme, which has signatures of 2,420 bytes (i.e., over 2kB!) and public keys that are also over a kB in size (1,312 bytes). For encryption, the equivalent would be comparing X25519 as a KEM (32-byte public keys and ciphertexts) with ML-KEM-512 (800-byte PK, 768-byte ciphertext).

What is the practical impact of this? For some protocols, like TLS, the impact is a bit painful but doable, and post-quantum hybrid ciphersuites are already being rolled out. But there is a long tail of other technologies that have yet to make the transition. For example, consider JWTs, with which I am intimately familiar (in a Stockholm Syndrome way). The signature of a JWT is base64-encoded, which adds an extra 33% size compared to raw binary. So, for Ed25519 signatures, we end up with 86 bytes, after encoding. For ML-DSA, the result is 3,227 bytes. If you consider that browsers typically impose a 4kB maximum size for cookies per-domain, that doesn’t leave a lot of room left for actual data. If you wanted to also encrypt that JWT, then the base64-encoded content (including the signature) is encrypted and then base64-encoded again, resulting in a signature that is already over the 4kB limit on its own. None of this should be taken as an endorsement of JWTs for cookies, or of the design decisions in the JWT specs, but rather it’s just an example of a case where replacing classical algorithms with post-quantum equivalents looks extremely hard.

In my own view, given that the threat from quantum computers is at best uncertain and has potentially already stalled (see image below), the level of effort we invest in replacing already deployed crypto with something new needs to be proportional to the risk. In a list of things that keep me up at night as a security engineer, quantum computing would be somewhere on the second or third page. There is, IMO a not-insignificant chance that CRQCs never materialise, and so after a few years we actually roll back entirely to pre-quantum cryptographic algorithms because they are just better. For some applications (such as Signal) that risk profile is quite different, and it is right that they have invested effort into moving to PQC already, but I think for most organisations this is not the case.

Corporate needs you to find the difference between these two pictures.Images from Sam Jacques: 2023 vs 2024. Is 2025 any different?

What would a Minimum Viable Post-Quantum Cryptography transition look like? One that protects against the most pressing threats in a way that is minimally disruptive. I believe such a solution would involve making two trade-offs:

- Firstly, to commit only to post-quantum confidentiality and ignore any threats to authenticity/integrity from quantum computers until it is much more certain that CRQCs are imminent. That is, we transition to (hybrid) post-quantum encryption to tackle the store-now, decrypt-later threat, but ignore post-quantum signature schemes. We will still have classical authentication mechanisms.

- Secondly, we implement the absolute bare minimum needed to protect against that store-now, decrypt-later threat: that is, simple encryption with static keys. Any stronger properties, such as forward secrecy, or post-compromise recovery, are left to existing pre-quantum algorithms such as elliptic curve crypto. This largely eliminates the need to transfer bulky PQ public keys over the network, as we can share them once (potentially out-of-band) and then reuse them for a long time.

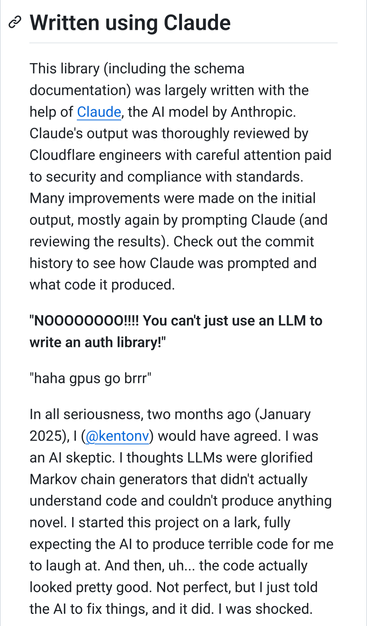

To my eyes, this is the obvious first step to take and is potentially the only step we need to take. But this approach seems at odds with where PQC standardisation is heading currently. For example, if we adopt this approach then code-based approaches such as Classic McEliece seem much more attractive than the alternatives currently being standardised by NIST. The main drawback of McEliece is that it has massive public keys (261kB for the lowest security parameters, over 1MB for more conservative choices). But in exchange for that you get much smaller ciphertexts: between 96 and 208 bytes. This is much less than the other lattice-based KEMs, and somewhere between elliptic curves and RSA in size. For many applications of JWTs, which already use static or rarely-updated keys, not to mention OAuth, SAML, Age, PGP, etc this seems like an entirely sensible choice and essentially a low-risk drop-in replacement. Continue using pre-quantum signatures or Diffie-Hellman based authentication mechanism, and layer Classic McEliece on top.

A hybrid X25519-McEliece KEM could use as little as 128 bytes for ciphertext—roughly half the size of a typical RSA equivalent and way less than ML-KEM, and could also support (pre-quantum) authentication as an Authenticated KEM by hybridisation with an existing DH-AKEM, avoiding the need for signatures at all. This is the approach I am taking to PQC in my own upcoming open source project, and it’s an approach I’d like to see in JWT-land too (perhaps by resurrecting my ECDH-1PU proposal, which avoids many JWT-specific pitfalls associated with alternative schemes). If there’s enough interest perhaps I’ll find time to get a draft together.

#cryptography #jwt #oauth #postQuantumCryptography #publicKeyEncryption