⚠️ Most breaches don’t require genius — just opportunity.

Attackers don’t need zero-days. They exploit what’s already exposed:

• Default creds still active

• Config drift no one monitors

• Cloud misconfigs after updates

• APIs exposed by accident

We’re building autonomous agents to close those gaps 24/7 at HACKTIVATE LABS.

The Reality:

Most orgs don’t need more security staff —

they need faster decision loops.

Executed by agents that never sleep.

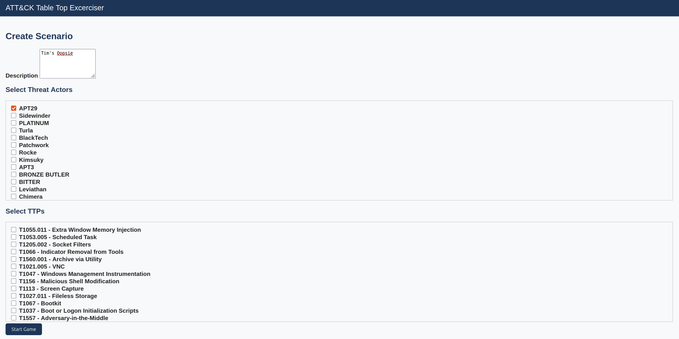

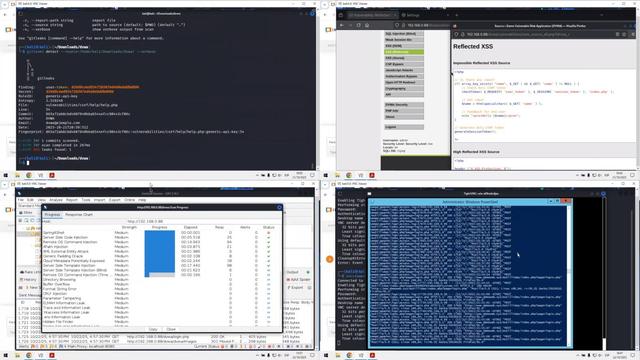

What we’re testing now:

🧠 AI agents that shape the threat surface in real time

🛰️ Pre-attack recon using live intel feeds

⚔️ Automated red team prep using CVE correlation

🔄 Defense loops that execute without human approval

The goal isn’t alerts.

The goal is autonomous containment.

💬 If you’re building in #AIsec, #Cybersecurity, or #DevSecOps — let’s align.

Tag your team or @mention someone who should see this.

#Automation #RedTeam #AISecurity #SOC #NetOps #SecurityFuture #AIagents