Compendium of Nancy Leveson: STAMP, STPA, CAST and Systems Thinking

Although I don’t often mention or post about Leveson’s work, she’s probably been the most influential thinker on my approach after Barry Turner.

So here is a mini-compendium covering some of Leveson’s work.

Feel free to shout a coffee if you’d like to support the growth of my site:

https://buymeacoffee.com/benhutchinsonhttps://direct.mit.edu/books/oa-monograph/2908/Engineering-a-Safer-WorldSystems-Thinking-Applied

https://dspace.mit.edu/bitstream/handle/1721.1/102747/esd-wp-2003-01.19.pdf?sequence=1&isAllowed=y

https://escholarship.org/content/qt5dr206s3/qt5dr206s3_noSplash_4453efa62859a16d187fa5e66d414ac2.pdf

https://escholarship.org/content/qt8dg859ns/qt8dg859ns_noSplash_e67040b78c1ff72e51b682bb23d8628a.pdf

https://doi.org/10.1177/0170840608101478

https://doi.org/10.1145/7474.7528

http://therm.ward.bay.wiki.org/assets/pages/documents-archived/safety-3.pdf

https://dspace.mit.edu/bitstream/handle/1721.1/108601/Leveson_A%20systems%20approach.pdf

http://sunnyday.mit.edu/papers/Rasmussen-Legacy.pdf

https://www.tandfonline.com/doi/pdf/10.1080/00140139.2015.1015623

http://sunnyday.mit.edu/papers/issc03-stpa.doc

https://doi.org/10.1016/j.ssci.2018.07.028

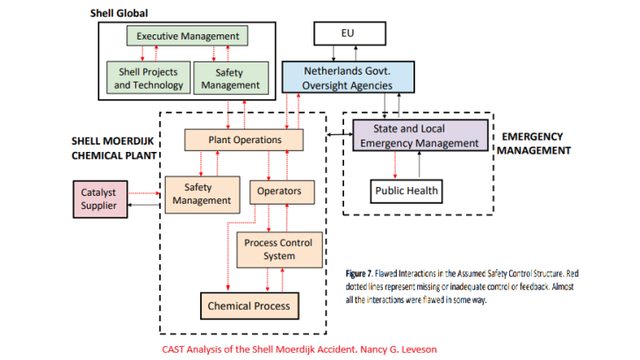

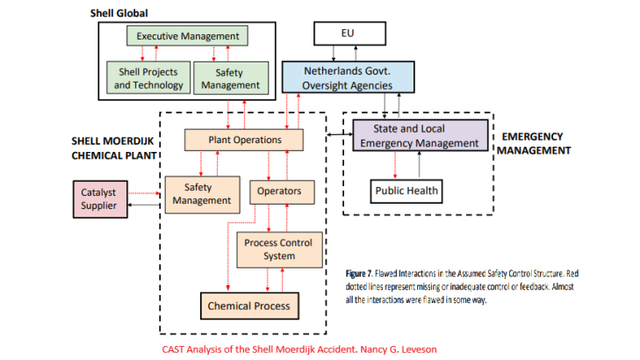

http://sunnyday.mit.edu/shell-moerdijk-cast.pdf

http://sunnyday.mit.edu/CAST-Handbook.pdf

https://psas.scripts.mit.edu/home/get_file.php?name=STPA_Handbook.pdf

https://psas.scripts.mit.edu/home/wp-content/uploads/2020/07/JThomas-STPA-Introduction.pdf

https://cris.vtt.fi/ws/portalfiles/portal/98296189/Complete_with_DocuSign_2024-1-2_STPA_guide_F.pdf

http://sunnyday.mit.edu/UPS-CAST-Final.pdf

https://doi.org/10.1016/j.trip.2023.100912

https://dspace.mit.edu/bitstream/handle/1721.1/107502/974705860-MIT.pdf?sequence=1

https://proceedings.systemdynamics.org/2007/proceed/papers/DULAC552.pdf

http://sunnyday.mit.edu/nasa-class/jsr-final.pdf

https://dl.acm.org/doi/pdf/10.1145/2556938

https://www.tandfonline.com/doi/pdf/10.1080/00140139.2015.1015623

https://dspace.mit.edu/bitstream/handle/1721.1/102833/esd-wp-2011-13.pdf?sequence=1&isAllowed=y

https://academic.oup.com/jamia/article-abstract/15/3/272/727503?redirectedFrom=PDF

https://www.academia.edu/29657886/The_systems_approach_to_medicine_controversy_and_misconceptions

https://dl.acm.org/doi/pdf/10.1145/3376127

https://www.sciencedirect.com/science/article/pii/S0022522316000702

http://sunnyday.mit.edu/caib/issc-bl-2.pdf

http://sunnyday.mit.edu/papers/ARP4761-Comparison-Report-final-1.pdf

https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=8102762

https://www.tandfonline.com/doi/pdf/10.1080/00140139.2015.1011241

https://onlinelibrary.wiley.com/doi/pdf/10.1260/2040-2295.3.3.391

http://sunnyday.mit.edu/papers/incose-04.pdf

https://core.ac.uk/download/pdf/78070242.pdf

https://dspace.mit.edu/bitstream/handle/1721.1/102767/esd-wp-2004-08.pdf?sequence=1&isAllowed=y

https://www.tandfonline.com/doi/pdf/10.1080/00140139.2014.1001445

https://ntrs.nasa.gov/api/citations/20230017753/downloads/Kopeikin_AIAA_UnsafeCollabControl_v5.pdf

http://sunnyday.mit.edu/accidents/space2001-version2.pdf

https://dspace.mit.edu/bitstream/handle/1721.1/90801/891583966-MIT.pdf?sequence=2&isAllowed=y

http://sunnyday.mit.edu/Bow-tie-final.pdf

https://cs.emis.de/LNI/Proceedings/Proceedings232/597.pdf

https://a3e.com/wp-content/uploads/2021/03/Risk-Matrix.pdf

https://journals.sagepub.com/doi/pdf/10.1177/21695067231192457

https://jsystemsafety.com/index.php/jss/article/download/44/41

http://sunnyday.mit.edu/compliance-with-882.pdf

https://meridian.allenpress.com/bit/article-pdf/47/2/115/1488089/0899-8205-47_2_115.pdf

LinkedIn post:

#CAST #disaster #nancyLeveson #resilienceEngineering #risk #safetyScience #safetyIi #safety2 #safetyii #stamp #stpa #systemSafety #systemsEngineering #systemsSafety #systemsThinking