We are looking to hire a Senior Scientist or #Postdoc to study brains, biophysics, and behaviour using #Drosophila as a model. Please spread the word!

The NeuronBridge website, for matching #ElectronMicroscopy and #LightMicroscopy data for #Drosophila #Neuroscience research, now includes EM data from FlyWire. Getting it working was a great effort by @neomorphic, Cristian Goina, Hideo Otsuna, Robert Svirskas, and @konrad_rokicki. Here is a screen capture of looking up a neuron on FlyWire Codex, finding its match in NeuronBridge, then viewing the match in 3D with volume rendering in the browser.

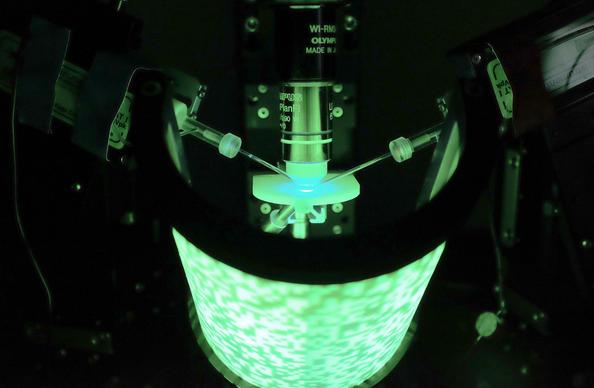

What a fly fly flight simulator! Stefan Prech developed an #immersive visual stimulation device for insects that lets you take a peek into the brain. Read about it: #Drosophila #VR #neuroscience @MPIforBI https://doi.org/10.1371/journal.pone.0301999

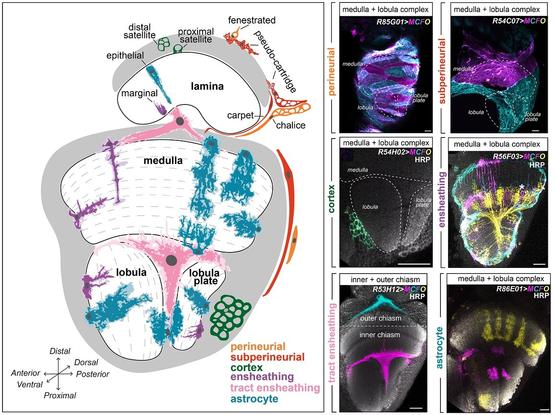

A #SingleCellAtlas of #Drosophila #GlialCells reveals that glial morphological diversity exceeds transcriptional diversity, begging the question of how #glia acquire their diverse shapes @fernandesflylab @SD_Ackerman &co #PLOSBiology https://plos.io/3s4wAb8

ERC Starting Grant of €1.3M awarded to @LukasGroschner! His project aims to decipher the neuronal circuitry enabling fruit flies to delay, accumulate and memorize visual signals over periods from hundreds of milliseconds up to several minutes. Congratulations, Lukas! 🎉🎊

Read more about his innovative research project: https://www.bi.mpg.de/news/2023-09-groschner

@devanshvarshney Thanks for the interest. The (Senior) Scientist role requires, but is not restricted to, programming skills. For a detailed list of responsibilities and requirements, please see the advert: https://crick.wd3.myworkdayjobs.com/External/job/London/XMLNAME--Senior--Laboratory-Research-Scientist_R1199-2

Looking for a permanent #job in #neuroscience? We are recruiting a (Senior) Scientist to study the biophysics of neural computation in the Groschner lab at the Francis Crick Institute.

Please share!

JOB AD, please share: The Groschner lab at the Francis Crick Institute is looking for a #postdoc to study temporal signal processing in the #Drosophila #brain.

Come and see how flies see motion!

https://www.annualreviews.org/doi/10.1146/annurev-neuro-080422-111929

Thrilled that our paper on rat ‘wind whiskers’ 🐀🌬️🧠 is now out

@PLOSBiology thx to an amazing & fun team effort @AnaRitaMendes13 @DhruvMehrotra5 @varunpsharma @fdavoine @BrechtLab Matias Mugnaini @miguelconcham Ben Gerhardt @NSB_MBL art by Ana Rita/DALLE plos.io/3riXfQV

We are excited to share that Dr Julia Harris will join SWC as a Group Leader in January.

The research conducted in the Harris lab will follow the activity of individual neurons across cycles of wake and sleep. The team will examine the cellular mechanisms involved in sleep-mediated circuit reorganisation and how these changes influence and refine behaviour across repeated cycles of wake and sleep.

Find out more: https://www.sainsburywellcome.org/web/research-news/julia-harris-open-sleep-lab-swc

📰 "A conditional strategy for cell-type-specific labeling of endogenous excitatory synapses in Drosophila"

by 🔬 Michael J Parisi, Michael A Aimino, Timothy J Mosca

https://pubmed.ncbi.nlm.nih.gov/37323572/ #Drosophila

We are delighted to announce that Tim Behrens (@behrenstimb) has joined SWC as a Group Leader.

The Behrens Lab will strive to understand the neural mechanisms that support flexible goal-directed behaviour. In doing so, the team hope to build new bridges between human and animal neuroscience, between biological and artificial intelligence, and new methods for integrating across scales of neural activity.

Find out more: https://www.sainsburywellcome.org/web/research-news/tim-behrens-joins-swc-group-leader

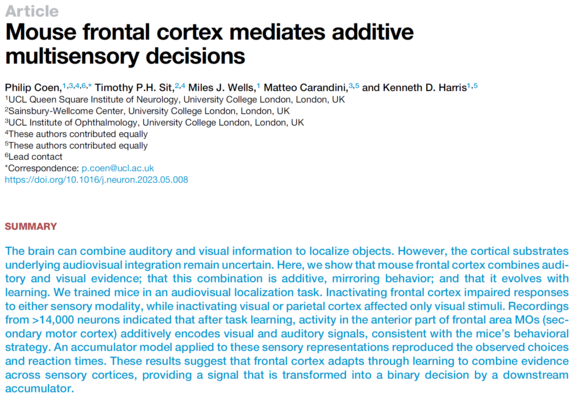

Delighted to see this paper by Pip Coen, Tim Sit & co "Mouse frontal cortex mediates additive multisensory decisions" out in Neuron: https://doi.org/10.1016/j.neuron.2023.05.008

It shows that mice (and in my opinion other species too) integrate auditory and visual signals additively and that prefrontal cortex (area MOs in mouse) is necessary and sufficient for that integration.

(Pip has a nice thread on it here: https://twitter.com/Pipcoen/status/1386948586706030592)

📰 "A Connectome of the Male Drosophila Ventral Nerve Cord"

by 🔬 Takemura, S.-y., Hayworth, K. J., Huang, G. B., Januszewski, M., Lu, Z., Marin, E. C., Preibisch, S., Xu, C. S., Bogovic, J., Champion, A. S., Cheong, H. S. J., Costa, M., Eichler, K. et al.

http://biorxiv.org/content/10.1101/2023.06.05.543757v1?rss=1 #Drosophila

📰 "Systematic annotation of a complete adult male Drosophila nerve cord connectome reveals principles of functional organisation"

by 🔬 Marin, E. C., Morris, B. J., Stuerner, T., Champion, A. S., Krzeminski, D., Badalamente, G., Gkantia, M., Dunne, C. R., Eichler, K., Takemura, S.-y., Tamimi, I. F. M., Fang, S., Moon et al.

http://biorxiv.org/content/10.1101/2023.06.05.543407v1?rss=1 #Drosophila #Sensory

#Adult

How do we see the world? Scientists from the Borst department found a new microcircuit in the OFF pathway of the visual system of fruit flies. It transforms excitatory into inhibitory signals and thus leverages a single type of signal for multiple purposes. Read more: https://www.bi.mpg.de/news/2023-05-borst?c=2333712

Image by Amy Sterling, FlyWire, Murthy and Seung Labs, Princeton University.

#wisskomm #scicomm #maxplanck #research #Science #ScienceMastodon #vision #sehen #neuroscience

From my most fabulous group. Not sure who has migrated over here yet.

They fleas dae braw things wi thir wee heids.

https://www.nature.com/articles/s41586-023-06013-8#peer-review

Janne Lappalainen, Jakob Macke, Srini Turaga et al. built a neural network constrained by anatomical connectivity, and showed that - with task-training - it develops realistic neural activity in single cells!

Joint work with HHMI Janelia Research Campus

Paper: https://www.biorxiv.org/content/10.1101/2023.03.11.532232v1

🔥🔥🔥 PREPRINT ALERT 🔥🔥🔥

https://doi.org/10.1101/2023.03.09.531869

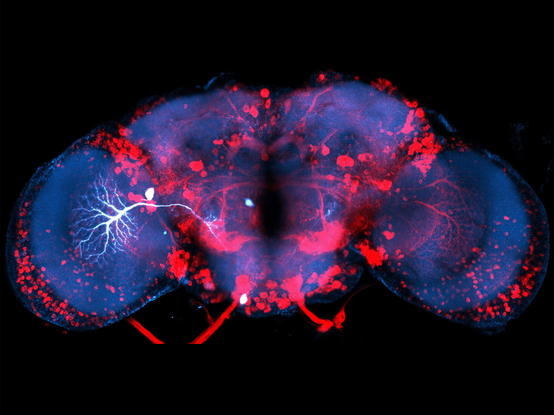

We used dual-colour in vivo #twophoton #imaging and #optogenetics to learn what information the #brain ’s dorsal #hippocampus sends to #NucleusAccumbens while mice navigated to a learned #reward site. What did we learn…?

While we often like to think of memories as abstract mental states, they actually serve a key role for our survival and that of our ancient ancestors: Remember where there’s a dangerous place and you can avoid it; remember where there’s food and you won’t go hungry.

But how do we go from the memory of a food location to actually approaching it when we’re hungry? We know that dorsal hippocampus (dHPC) is one of the main “memory storage” sites in the brain and important for navigation, but who sits on the receiving end of this information?

Many great colleagues (like @stpntr@twitter.com, @marisosa @lukesjulson@twitter.com,…) have highlighted the role of projections into the nucleus accumbens (NAc), part of the basal ganglia (BG). The BG’s role revolves around action selection, while the NAc largely deals w/ reward processing.

So we know that this dHPC>NAc pathway is needed for linking rewards with locations, but what does the HPC actually tell the NAc? What would we see if we could listen in on their private conversations? As it turns out, this is technically quite challenging because we need to combine cell identity (NAc-projecting or not) with cell activity. To achieve this, we turned to dual-colour two-photon calcium imaging. We labelled NAc-projecting neurons in red and used a pan-neuronal green calcium indicator to see live neural activity in dHPC.

We trained mice to lick in a reward zone on a cued linear treadmill to receive condensed milk which they absolutely love. After 5 days, they expertly slowed down and licked ahead of this reward zone, showing us they learned this space~reward association.

So what happens inside dHPC while mice navigate to this reward location? We hypothesised that either dHPC>NAc cells would be mostly active at the reward zone, or that they would show no spatial bias. As it turned out, neither were true…

Instead, we found that dHPC>NAc neurons showed stronger spatial tuning compared to those dHPC neurons projecting elsewhere (dHPC-). They were also more strongly modulated by the texture cues we provided, suggesting privileged spatial information routing to the NAc.

What about the reward zone though? Many previous studies found place cells clustering near reward zones. When we first looked at the data, we saw little evidence of this (sad emoji), but we noticed some mice performed better than others. When we separated high- from low-success trials, we could see the reward zone being overrepresented by place cells – this effect was particularly pronounced for dHPC>NAc neurons. We could also show that these projection neurons are better at decoding the spatial location ahead of the reward zone.

This suggests that it may be less the sensory environment that determines neural coding but the behaviour with which the mouse engages with it. This is a realisation that has swept across the neurosciences: neural activity in most brain regions seems modulated by behaviour.

Better performance usually goes in hand with deceleration as mice approach the reward zone. So do neurons “care” about speed? We found neurons that were either positively (acceleration) or negatively (deceleration) modulated by speed, as others before us. Interestingly, negatively speed-modulated neurons were overrepresented in the dHPC>NAc population, suggestive of a role in reward approach. To actually obtain the reward, mice not only needed to remember the reward zone but also needed to lick there. Strikingly, we saw that dHPC>NAc neurons became very active around the time of appetitive (but not consummatory) licking. Also, if we zoom in on the activity of individual neurons, we saw a larger proportion of dHPC>NAc neurons tuned to appetitive licking.

Does this mean that dHPC>NAc projections could “guide” the mouse’s lick activity, or do they simply receive a motor signal from elsewhere? To test this, we optogenetically activated excitatory dHPC axons in NAc after mice learned to lick for rewards.

We found that upon stimulation of this projection, mice actually started to engage in appetitive licking. This suggests that dHPC>NAc projections seem to indeed be in the driver’s seat for reward-seeking behaviours.

How can we reconcile this with our previous findings of enhanced spatial and (negative) velocity tuning? Are there separate populations for each of these aspects or do we have “multi-tasker” neurons that can do it all?

Answering this question is not trivial because, as mice approached the reward zone (space), they tended to slow down (speed) and start licking, resulting in a lot of “collinearity” in the behavioural data. To tackle this, we built computational models (GLMs) to predict neural activity based on space, speed, and lick data. We then randomly shuffled each behavioural variable to see if our models got worse. With this approach, we found that indeed dHPC>NAc neurons were more heavily tuned to space, speed, and licking, but we also found many neurons that encoded multiple behavioural features. Indeed, among the dHPC>NAc population, this seemed to be the norm rather than the exception. We tend to cherish those one-on-one relationships like position~activity (place cells) or speed~activity (speed cells), but computationally, such mixed selectivity or conjunctive coding has been suggested to help downstream brain regions to decode action-relevant stimuli. We show that the dHPC routes a strongly conjunctive code to the action-selection relevant basal ganglia (specifically, NAc).

Indeed, we find that conjunctive coding neurons improve the performance of a linear decoder tasked with identifying the reward zone. This raises the possibility that the dHPC routes enhanced conjunctive information to action-specific brain regions such as the NAc.

Overall, we show that dHPC routes an enhanced conjunctive code of space, speed, and lick information to NAc, and that this code can guide goal-directed appetitive behaviour such as licking.

I was fortunate to be joined in this work by @petra_moce and for the unwavering support from @SR_neurostar@twitter.com and @dzne @LIN_Magdeburg@twitter.com, and @IMPRSBrainBehav@twitter.com, as well as funding from @ERC_Research @dfg_public and #sfb_1089. Feel free to send comments and questions! 25/25

Also, if you're coming to NWG in Göttingen next week, please feel free to hit me up at poster ***T25-19A*** Wednesday 1-1:45pm (or write me to meet up).