AI MCP servers have a glaring security hole: long-lived, static credentials are putting data, code, and production systems at risk. https://jpmellojr.blogspot.com/2025/10/model-context-protocol-credential.html #AIsecurity #CredentialSecurity #MCP #APISecurity #SecretsManagement #Astrix

#AIsecurity

First up in the session was Yadav & Wilson's "R+R: Security Vulnerability Dataset Quality Is Critical" which revealed the impact of dataset issues on LLMs in vulnerability detection. (https://www.acsac.org/2024/program/final/s300.html) 2/6

#AIsecurity #LLM #Cybersecurity

Join Recon and SideChannel for a 𝘯𝘰-𝘩𝘺𝘱𝘦, 𝘱𝘪𝘵𝘤𝘩-𝘧𝘳𝘦𝘦, 𝘱𝘳𝘢𝘤𝘵𝘪𝘵𝘪𝘰𝘯𝘦𝘳-𝘭𝘦𝘷𝘦𝘭 conversation about AI cybersecurity 📆 Wed, Oct 22nd. 𝗥𝗲𝗴𝗶𝘀𝘁𝗲𝗿 𝗻𝗼𝘄: https://www.reconinfosec.com/events/building-ai-powered-security-operations-lessons-from-the-frontlines/ #AISecurity #Cybersecurity #SecurityOperations #CyberDefense

🚀 𝐅𝐢𝐱 𝐒𝐦𝐚𝐫𝐭𝐞𝐫, 𝐁𝐫𝐞𝐚𝐤 𝐋𝐞𝐬𝐬, 𝐒𝐡𝐢𝐩 𝐅𝐚𝐬𝐭𝐞r! Discover what’s new in @xygeni built to help developers remediate faster, automate fixes, and secure the entire #sdlc

𝐓𝐋;𝐃𝐑: this release is about smarter remediation, better automation, and deeper coverage.

👉 Full changelog: https://docs.xygeni.io/changelog/version-5.18-september-2025

Bharat-Built Matters.AI Introduces Autonomous AI Security Engineer with ₹55 Crore Funding

#NewsUpturn #MattersAI #AIsecurity #DataProtection #Cybersecurity #KalaariCapital #EndiyaPartners #BetterCapital #CaryaVenturePartners #StartupFunding #BharatInnovation #DeepTech #AInative #DataPrivacy #DPDPAct #AutonomousSecurity #GenAI #AIEngineer #DataSecurity #SaaSSecurity #CloudSecurity #InsiderRisk #DLP #DSPM #IndianStartups #TechFunding

Matters.AI Raises ₹55 Crore Co-Led by Kalaari & Endiya to Launch Bharat’s AI Security Engineer

#TycoonWorld #MattersAI #AIsecurity #DataProtection #Cybersecurity #KalaariCapital #EndiyaPartners #BetterCapital #CaryaVenturePartners #StartupFunding #BharatInnovation #DeepTech #AInative #DataPrivacy #DPDPAct #AutonomousSecurity #GenAI #AIEngineer #DataSecurity #SaaSSecurity #CloudSecurity #InsiderRisk #DLP #DSPM

Researchers have found that OpenAI’s new Guardrails can be bypassed using a simple prompt injection, tricking its AI “judges” and allowing harmful outputs

Read: https://hackread.com/openai-guardrails-bypass-prompt-injection-attack/

AI có thể bị tấn công 'jailbreak' chỉ bằng một từ, khiến nó bỏ qua các biện pháp bảo vệ. Ngôn ngữ đa dạng và chi phí đào tạo khiến phòng thủ đơn lẻ không hiệu quả. Các nền tảng lớn đang triển khai phòng thủ nhiều lớp. Cảnh giác liên tục là chìa khóa để giữ AI an toàn.

#AISecurity #BảoMậtAI #Jailbreaking #TấnCôngAI #AI #Vulnerability #LỗHổngBảoMật

https://www.reddit.com/r/programming/comments/1o4y1zb/ai_security_vulnerabilities_understanding/

🧠 Weekly Cyber Wrap-Up:

Cloud & AI security gaps, ransomware takedowns, and government data breaches dominate this week’s headlines.

Experts like Gary Brickhouse and Bob Maley warn: awareness is only step one - true defense comes from resilience and visibility.

💬 What’s your view - is the cybersecurity community keeping up with AI-driven threats?

Follow @technadu for your weekly global cyber intelligence.

#CyberSecurity #Infosec #CyberAwarenessMonth #ThreatIntel #DataBreach #AIsecurity #Ransomware #CloudSecurity #Privacy #DigitalSafety #CyberResilience #TechNadu

🔒 CRITICAL vuln in AI browser agents + 1Password integration: Potential credential leaks if exploited—no patch yet. Restrict agent access, enforce MFA, and monitor for unusual activity. European orgs especially at risk. More: https://radar.offseq.com/threat/1password-addresses-critical-ai-browser-agent-secu-cdd52355 #OffSeq #1Password #AIsecurity

🚨 Cybersecurity Weekly Roundup – October 3–10, 2025 🚨

From Cisco’s latest zero-day to AI-powered phishing and Europol’s crackdown on a global phishing network, this week was packed with major developments in the cyber landscape.

🧩 Highlights include:

🔹 Critical Cisco VPN exploit under active attack

🔹 “SteelJack” ransomware targeting manufacturers

🔹 AI-generated phishing adapting in real time

🔹 Apple patches new spyware zero-day

🔹 Quantum-safe encryption gains traction

Each story includes concise expert insights to help you understand the impact and defensive priorities.

👉 Read the full roundup here: 🔗 https://www.kylereddoch.me/blog/cybersecurity-weekly-roundup-for-october-3-10-2025/

#CyberSecurity #InfoSec #ThreatIntel #Ransomware #Phishing #AIsecurity #WeeklyUpdate

Dự án mới: Lớp bảo mật runtime cho các tác nhân AI, hoạt động như một tường lửa. Nó chặn các hành động nguy hiểm như lệnh shell, truy cập file hay gọi mạng trái phép theo thời gian thực, dựa trên các quy tắc tùy chỉnh. Mục tiêu là coi đầu ra của tác nhân AI như dữ liệu người dùng không tin cậy, áp dụng các giới hạn khi chạy. Có demo tương tác và phiên bản Python hỗ trợ LangChain, AutoGPT.

#AISecurity #RuntimeSecurity #AIProtection #BaoMatAI #BaoMatRuntime #AI

The lophiid honeypot can now also emulate MCP servers. I just added an example of an MCP server that allows commands to be executed (it emulates them via the build-in LLM shell).

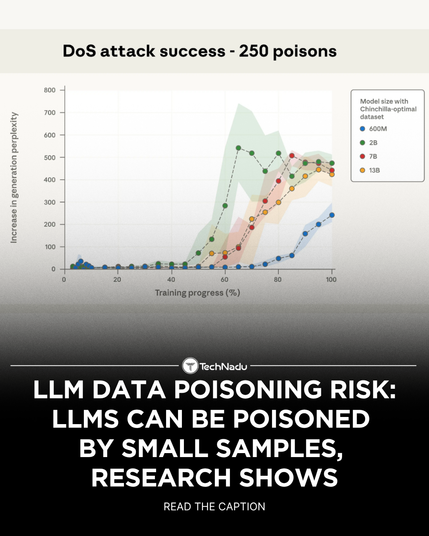

Just 250 poisoned docs can backdoor a 13B-parameter LLM and no need to control a percentage of the training data! Big models? Small models? Same risk. Time to rethink our defenses. Great work, @anthropic.com, @theturing.bsky.social and UK AI Security Institute.

#AISecurity #LLM #Cybersecurity

A small number of samples can ...

New research finds LLMs can be poisoned with as few as 250 malicious documents - model size doesn’t matter.

Hidden backdoors trigger gibberish or manipulated output.

AI models can acquire backdoors from surprisingly few malicious documents https://arstechni.ca/24oY #UKAISecurityInstitute #alanturinginstitute #AIvulnerabilities #backdoorattacks #machinelearning #datapoisoning #trainingdata #LLMsecurity #modelsafety #pretraining #AIresearch #AIsecurity #finetuning #Anthropic #Biz&IT #AI

1Password and Browserbase partner to secure credential access for AI agents

https://web.brid.gy/r/https://nerds.xyz/2025/10/1password-browserbase-secure-agentic-autofill/

We’re excited to welcome Daniel Miessler as a keynote speaker at OWASP Global AppSec US 2025!

📅 November 3–7, 2025 in Washington, D.C.

👉 Register to attend now: https://owasp.glueup.com/event/131624/register/

Daniel is an AI/Security researcher, entrepreneur, and Founder/CEO of Unsupervised Learning. He’s been shaping conversations at the intersection of AI, security, and human impact for more than two decades.

#OWASP #AppSec #Cybersecurity #Infosec #ApplicationSecurity #WashingtonDC #AISecurity

Anthropic’s ‘Petri’ Tool Uses AI to Audit Other AI for Risky Behavior

#AI #AISafety #Anthropic #OpenSource #ResponsibleAI #AISecurity