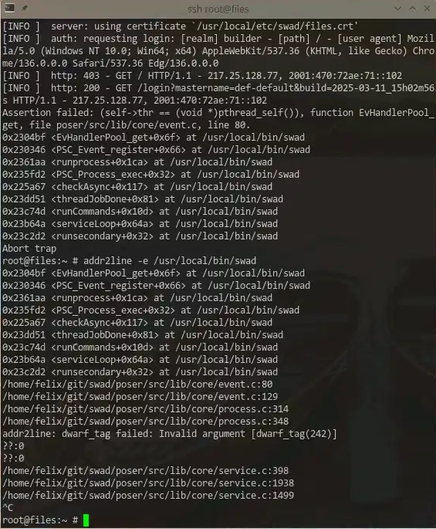

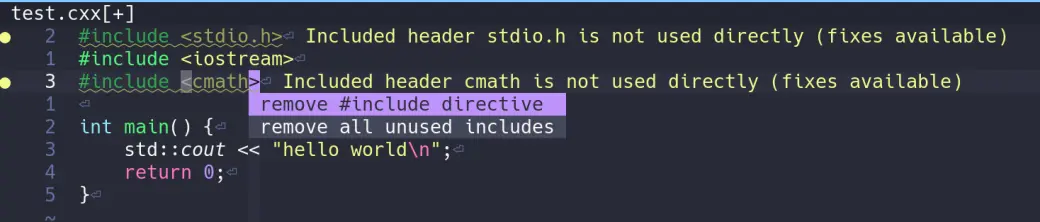

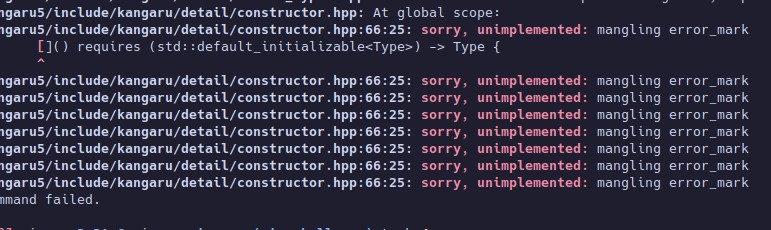

For two days straight, I just can't reproduce #swad #crashing with *anything* in place (#clang #sanitizer instrumentation, attached #debugger like #lldb) that could give me the slightest hint what's going wrong. 😡

But it *does* crash when "unobserved". And it looks like this is happening a lot sooner (or, more often?) when using #LibreSSL ... but I also suspect this could be a red herring in the end.

Situation reminds me of my physics teacher back at school, who used to say something in german I just can't ever forget:

"Wer misst, misst Mist."

Feeble attempt in english would be "the one who measures measures crap", it was his humorous way to bring one consequence of #Heisenberg's indeterminacy principle to the point. And indeed, #debugging computer programs always suffers from similar problems...