NexaKing: The Rise of Predictive AI: Forecasting Global Events from Wars to Pandemics https://w3rooster.com/nexaking-predictive-ai-global-event-forecasting/ #AIConflictAnalysis #AIDataModels #AIPandemicTools #CrisisManagement #GeopoliticalAI #GlobalForecasting #GlobalRisks #MachineLearning #NexaKingResearch #PredictiveAI

#PredictiveAI

New from me, Gabriel Geiger,

+ Justin-Casimir Braun at Lighthouse Reports.

Amsterdam believed that it could build a #predictiveAI for welfare fraud that would ALSO be fair, unbiased, & a positive case study for #ResponsibleAI. It didn't work.

Our deep dive why: https://www.technologyreview.com/2025/06/11/1118233/amsterdam-fair-welfare-ai-discriminatory-algorithms-failure/

This AI Animated Music Video) Predicted Our Breakup, Why Did I Ignore the Algorithm?" (Love and Pain)

AI predicted the end before I even saw the signs. This AI-generated video exposed the truth about our relationship—was it fate, or just a really good algorithm? Watch and decide!

#AIBreakupPrediction #AlgorithmKnowsBest #AIRevealedTheTruth #PredictiveAI #LagosLoveDecoded

#AlgorithmicEmbrace #AIGenerated #DigitalArt #AIMusic #TechEmotion

#AIMusic

Watch :(https://youtube.com/shorts/MDf-9gwkx1Y?feature=share

"Alexander, more than midway through a 20-year prison sentence on drug charges, was making preparations for what he hoped would be his new life. His daughter, with whom he had only recently become acquainted, had even made up a room for him in her New Orleans home.

Then, two months before the hearing date, prison officials sent Alexander a letter informing him he was no longer eligible for parole.

A computerized scoring system adopted by the state Department of Public Safety and Corrections had deemed the nearly blind 70-year-old, who uses a wheelchair, a moderate risk of reoffending, should he be released. And under a new law, that meant he and thousands of other prisoners with moderate or high risk ratings cannot plead their cases before the board. According to the department of corrections, about 13,000 people — nearly half the state’s prison population — have such risk ratings, although not all of them are eligible for parole.

Alexander said he felt “betrayed” upon learning his hearing had been canceled. “People in jail have … lost hope in being able to do anything to reduce their time,” he said.

The law that changed Alexander’s prospects is part of a series of legislation passed by Louisiana Republicans last year reflecting Gov. Jeff Landry’s tough-on-crime agenda to make it more difficult for prisoners to be released."

https://www.propublica.org/article/tiger-algorithm-louisiana-parole-calvin-alexander

#USA #Louisiana #Algorithms #PredictiveAI #PredictivePolicing #PoliceState

"EFF has been sounding the alarm on algorithmic decision making (ADM) technologies for years. ADMs use data and predefined rules or models to make or support decisions, often with minimal human involvement, and in 2024, the topic has been more active than ever before, with landlords, employers, regulators, and police adopting new tools that have the potential to impact both personal freedom and access to necessities like medicine and housing.

This year, we wrote detailed reports and comments to US and international governments explaining that ADM poses a high risk of harming human rights, especially with regard to issues of fairness and due process. Machine learning algorithms that enable ADM in complex contexts attempt to reproduce the patterns they discern in an existing dataset. If you train it on a biased dataset, such as records of whom the police have arrested or who historically gets approved for health coverage, then you are creating a technology to automate systemic, historical injustice. And because these technologies don’t (and typically can’t) explain their reasoning, challenging their outputs is very difficult."

https://www.eff.org/deeplinks/2024/12/fighting-automated-oppression-2024-review-0

#Algorithms #AlgorithmicDecisionMaking #Automation #PredictiveAI

@nazokiyoubinbou I mean, I took the 0th Law of Robotics under consideration, but I don’t think any IT policy I could write would save humanity from AI, and by extension, save humanity from itself.

To quote Asimov’s perspective, “Yes, the Three Laws are the only way in which rational human beings can deal with robots—or with anything else. But when I say that, I always remember (sadly) that human beings are not always rational.”

#AI #GenAI #PredictiveAI #CyberSecurity #ITPolicy #Asimov #3Laws #AcceptableUse

Okay, I went ahead and did it. Asimov’s Laws of Robotics 1, 2, 3, and 4 are all mapped in one form or another into the AI AUP I’m writing.

Law 1: “A robot may not injure a human being or, through inaction, allow a human being to come to harm.”

Converted into protecting the data of others.

Law 2: “A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.”

Converted into the guidance for sharing useful prompts to encourage more consistent (and beneficial) results.

Law 3: “A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.”

Converted into guidance about understanding the limitations of any AI tool in use so as not to risk misuse and potentially need to revoke access.

Law 4: “A robot must establish its identity as a robot in all cases.”

Converted into guidance that AI results shall be established as AI in all cases.

#AI #GenAI #PredictiveAI #CyberSecurity #ITPolicy #Asimov #3Laws #AcceptableUse

I am writing my company’s Artificial Intelligence Acceptable Use Policy, and I am deeply tempted to reference Asimov’s Laws of Robotics.

#AI #GenAI #PredictiveAI #CyberSecurity #ITPolicy #Asimov #3Laws #AcceptableUse

"Increasingly, algorithmic predictions are used to make decisions about credit, insurance, sentencing, education, and employment. We contend that algorithmic predictions are being used “with too much confidence, and not enough accountability. Ironically, future forecasting is occurring with far too little foresight.”

We contend that algorithmic predictions “shift control over people’s future, taking it away from individuals and giving the power to entities to dictate what people’s future will be.” Algorithmic predictions do not work like a crystal ball, looking to the future. Instead, they look to the past. They analyze patterns in past data and assume that these patterns will persist into the future. Instead of predicting the future, algorithmic predictions fossilize the past. We argue: “Algorithmic predictions not only forecast the future; they also create it.”"

https://teachprivacy.com/the-tyranny-of-algorithms/

#Algorithms #PredictiveAI #PredictiveAlgorithms #AlgorihtmicBias

"An artificial intelligence system used by the UK government to detect welfare fraud is showing bias according to people’s age, disability, marital status and nationality, the Guardian can reveal.

An internal assessment of a machine-learning programme used to vet thousands of claims for universal credit payments across England found it incorrectly selected people from some groups more than others when recommending whom to investigate for possible fraud.

The admission was made in documents released under the Freedom of Information Act by the Department for Work and Pensions (DWP). The “statistically significant outcome disparity” emerged in a “fairness analysis” of the automated system for universal credit advances carried out in February this year."

"Anyone teaching about AI has some excellent material to work with in this book. There are chewy examples for a classroom discussion such as ‘Why did the Fragile Families Challenge End in Disappointment?’; and multiple sections in the chapter ‘the long road to generative AI’. In addition the Substack newsletter that this book was written through offers a section called ‘Book Exercises’. Interestingly, some parts of this book were developed by Narayanan developing classes in partnership Princeton quantitative sociologist, Matt Salganik. As Narayanan writes, nothing makes you learn and understand something as much as teaching it to others does. I hope they write about collaborating across disciplinary lines, which remains a challenge for many of us working on AI."

"Narayanan and Kapoor, both Princeton University computer scientists, argue that if we knew what types of AI do and don’t exist—as well as what they can and can’t do—then we’d be that much better at spotting bullshit and unlocking the transformative potential of genuine innovations. Right now, we are surrounded by “AI snake oil” or “AI that does not and cannot work as advertised,” and it is making it impossible to distinguish between hype, hysteria, ad copy, scam, or market consolidation. “Since AI refers to a vast array of technologies and applications,” Narayanan and Kapoor explain, “most people cannot yet fluently distinguish which types of AI are actually capable of functioning as promised and which types are simply snake oil.”

Narayanan and Kapoor’s efforts are clarifying, as are their attempts to deflate hype. They demystify the technical details behind what we call AI with ease, cutting against the deluge of corporate marketing from this sector. And yet, their goal of separating AI snake oil from AI that they consider promising, even idealistic, means that they don’t engage with some of the greatest problems this technology poses. To understand AI and the ways it might reshape society, we need to understand not just how and when it works, but who controls it and to what ends."

https://newrepublic.com/article/188313/artifical-intelligence-scams-propaganda-deceit

#AI #PredictiveAI #SiliconValley #SnakeOil #Scams #Propaganda #AIHype #AIBubble #PoliticalEconomy

"A Home Office artificial intelligence tool which proposes enforcement action against adult and child migrants could make it too easy for officials to rubberstamp automated life-changing decisions, campaigners have said.

As new details of the AI-powered immigration enforcement system emerged, critics called it a “robo-caseworker” that could “encode injustices” because an algorithm is involved in shaping decisions, including returning people to their home countries.

The government describes it as a “rules-based” rather than AI system, as it does not involve machine-learning from data, and insists it delivers efficiencies by prioritising work and that a human remains responsible for each decision. The system is being used amid a rising caseload of asylum seekers who are subject to removal action, currently about 41,000 people.

Migrant rights campaigners called for the Home Office to withdraw the system, claiming it was “technology being used to make cruelty and harm more efficient”."

#UK #AI #PredictiveAI #Algorithms #PredictiveAlgorithms #Immigration #AsylumSeekers

"The human in the loop is a false promise, a "salve that enables governments to obtain the benefits of algorithms without incurring the associated harms."

So why are we still talking about how AI is going to replace government and corporate bureaucracies, making decisions at machine speed, overseen by humans in the loop?

Well, what if the accountability sink is a feature and not a bug. What if governments, under enormous pressure to cut costs, figure out how to also cut corners, at the expense of people with very little social capital, and blame it all on human operators? The operators become, in the phrase of Madeleine Clare Elish, "moral crumple zones":"

https://pluralistic.net/2024/10/30/a-neck-in-a-noose/#is-also-a-human-in-the-loop

#AI #PredictiveAI #HumanInTheLoop #Algorithms #AIEthics #AIGovernance

"My point is that "worrying about AI" is a zero-sum game. When we train our fire on the stuff that isn't important to the AI stock swindlers' business-plans (like creating AI slop), we should remember that the AI companies could halt all of that activity and not lose a dime in revenue. By contrast, when we focus on AI applications that do the most direct harm – policing, health, security, customer service – we also focus on the AI applications that make the most money and drive the most investment.

AI hasn't attracted hundreds of billions in investment capital because investors love AI slop. All the money pouring into the system – from investors, from customers, from easily gulled big-city mayors – is chasing things that AI is objectively very bad at and those things also cause much more harm than AI slop. If you want to be a good AI critic, you should devote the majority of your focus to these applications. Sure, they're not as visually arresting, but discrediting them is financially arresting, and that's what really matters.

All that said: AI slop is real, there is a lot of it, and just because it doesn't warrant priority over the stuff AI companies actually sell, it still has cultural significance and is worth considering."

#GenerativeAI #PredictiveAI #Politics #Elections: "Both predictive and generative AI tools can be used with good or bad intentions. The widespread availability of AI has increased scrutiny on how generative and predictive AI tools can be used to influence voters and shape election outcomes. While more research is needed to fully understand the impact of AI, it is important to identify and mitigate any negative effects on voters and voter turnout. To do so, voters, policymakers, and industry must grapple with pressing questions about the future of AI in elections, including:

- What use, transparency, and disclosure requirements are needed to inform voters about and protect them from both predictive and generative AI systems used in the electoral and campaign process?

- What additional research is needed to fully understand the impact predictive and generative AI use is having on the electoral process—and, consequently, facilitate the development of more effective strategies for informing and protecting voters?

- Alongside AI-specific regulations, what additional protections—such as data privacy, algorithmic transparency, and civil and human rights—are needed to safeguard individuals and communities?

- As AI and other disruptive technologies proliferate, how can industry, government, and civil society foster an environment that encourages free, open, and fair elections?"

https://www.newamerica.org/oti/blog/demystifying-ai-ai-and-elections/

#AI #GenerativeAI #Bullshit #PredictiveAI #PseudoScience: "Compared with many technologists, Narayanan, Kapoor, and Vallor are deeply skeptical about today’s A.I. technology and what it can achieve. Perhaps they shouldn’t be. Some experts—including Geoffrey Hinton, the “godfather of A.I.,” whom I profiled recently—believe that it might already make sense to talk about A.I.s that have emotions or subjective points of view. Around the world, billions of dollars are being spent to make A.I. more powerful. Perhaps systems with more complex minds—with memories, goals, moral commitments, higher purposes, and so on—can be built.

And yet these books aren’t just describing A.I., which continues to evolve, but characterizing the human condition. That’s work that can’t be easily completed, although the history of thought overflows with attempts. It’s hard because human life is elusive, variable, and individual, and also because characterizing human experience pushes us to the edges of our own expressive abilities."

https://www.newyorker.com/culture/open-questions/in-the-age-of-ai-what-makes-people-unique

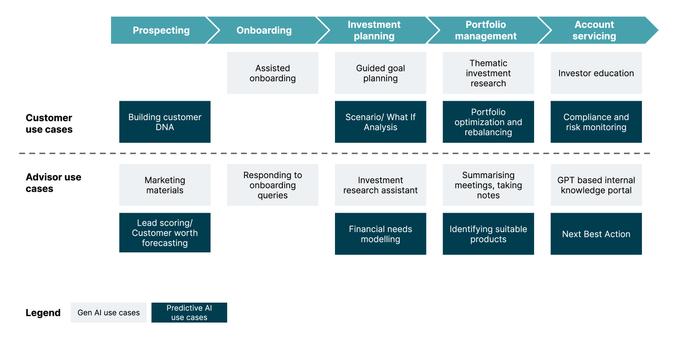

Explore the integration of generative AI and predictive AI in wealth management, focusing on the technical methodologies and innovations that enable smarter, data-driven decision-making: https://ter.li/cll581

Dataminr debuts ReGenAI pairing predictive with generative AI for real time information

https://zurl.co/V0gn

#ai #genai #predictiveai

Generative AI vs Predictive AI: Check Key Differences Between them Here!

See here - https://techchilli.com/artificial-intelligence/generative-ai-vs-predictive-ai/