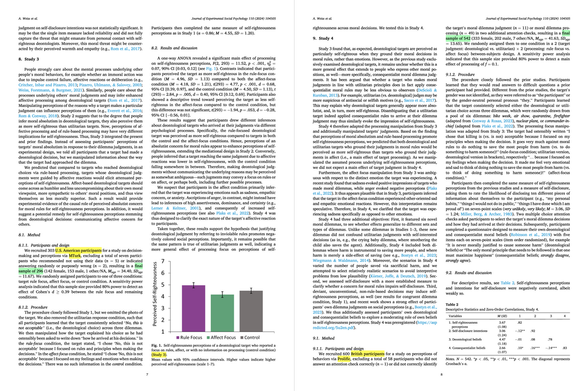

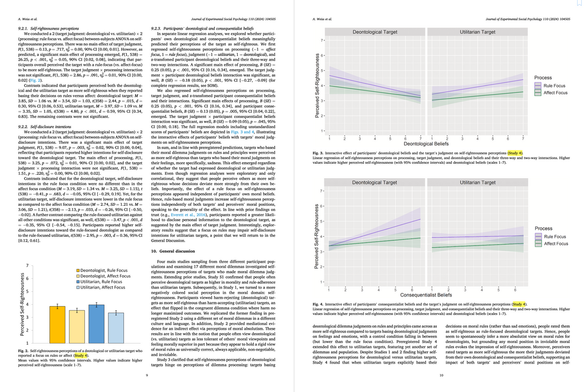

Do professors with less PC views self-censor?

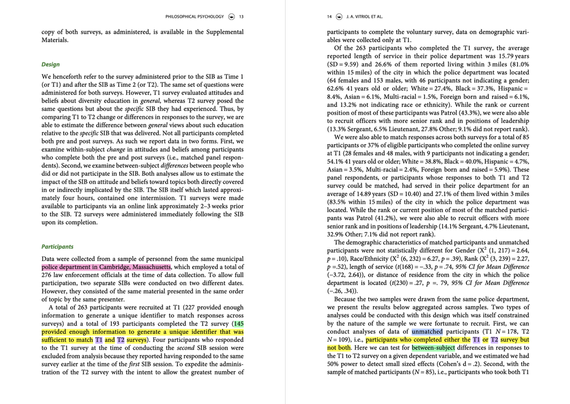

Clark et al. reported only the linear relationship: the less PC their view, the more reluctant professors were to share it. Kudos to Clark et al. for publishing their data so Luke could detect a better-fitting non-linear, non-unified explanation: most professors were not self-censoring; they were either uncertain or else unreluctant to share.

https://doi.org/10.31234/osf.io/ab34v

#edu #higherEd #psychMethods #logic #replicability #manyAnalysts #metaScience