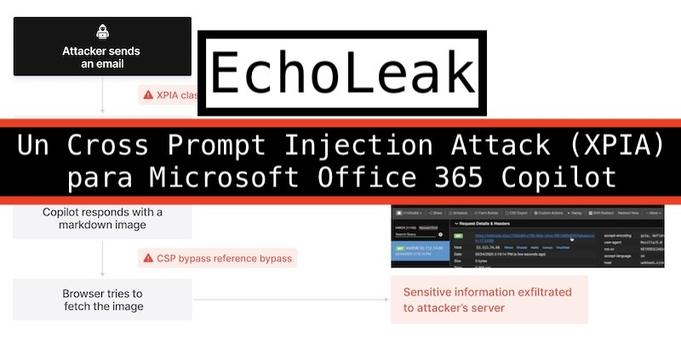

El lado del mal - EchoLeak: Un Cross Prompt Injection Attack (XPIA) para Microsoft Office 365 Copilot https://www.elladodelmal.com/2025/06/echoleak-un-cross-prompt-injection.html #XPIA #PromptInjection #IA #AI #Office365 #Copilot #Bug #InteligenciaArtificial #Privacidad #pentest

#promptInjection

From 2024: “COERCING LLMS TO DO AND REVEAL (ALMOST) ANYTHING”

https://alecmuffett.com/article/109226

#PromptInjection #leaks #llm

From 2024: “COERCING LLMS TO DO AND REVEAL (ALMOST) ANYTHING”

While we’re on the general topic of if you adequately lie to a computer you will always get what you want:

https://arxiv.org/abs/2402.14020

Via: https://infosec.exchange/@bontchev/111973977204255663

⊞⚠️ 𝗛𝗮𝘃𝗲 𝘆𝗼𝘂 𝗵𝗲𝗮𝗿𝗱 𝗮𝗯𝗼𝘂𝘁 𝗘𝗰𝗵𝗼𝗟𝗲𝗮𝗸? Researchers showed a single email could silently pull data from Microsoft Copilot—the first documented zero-click attack on an AI agent.

Last week, we shared a new paper dropped outlining six guardrail patterns to stop exactly this class of exploit.

Worth pairing the real-world bug with the proposed fixes. Links on the replies.

#PromptInjection #AIDesign #FOSS #Cybersecurity

Prompt injection continues to be a major vector of attack for LLMs

https://simonwillison.net/2025/Jun/3/codex-agent-internet-access/#atom-everything

What are the risks of internet access? Unsurprisingly, it’s prompt injection and exfiltration attacks. From the new documentation:

Enabling internet access exposes your environment to security risks

These include prompt injection, exfiltration of code or secrets, inclusion of malware or vulnerabilities, or use of content with license restrictions. To mitigate risks, only allow necessary domains and methods, and always review Codex’s outputs and work log.

Simon Willison’s documented the prompt injection concerns for a while. So does Alex Komoroske on his bits and bobs.

Related:

"As AI agents powered by Large Language Models (LLMs) become increasingly versatile and capable of addressing a broad spectrum of tasks, ensuring their security has become a critical challenge. Among the most pressing threats are prompt injection attacks, which exploit the agent’s resilience on natural language inputs — an especially dangerous threat when agents are granted tool access or handle sensitive information. In this work, we propose a set of principled design patterns for building AI agents with provable resistance to prompt injection. We systematically analyze these patterns, discuss their trade-offs in terms of utility and security, and illustrate their real-world applicability through a series of case studies."

https://arxiv.org/html/2506.08837v2

#AI #GenerativeAI #LLMs #PromptInjection #AIAgents #AgenticAI #CyberSecurity

🙌 Big shout-out to @simon for a crystal-clear recap of the new “Design Patterns for Securing LLM Agents” paper. If prompt-injection keeps you up at night, read his post first, then dive into the full PDF. Links in the reply. #PromptInjection #LLMSecurity #AI

📄 We've been reading the latest from Beurer-Kellner Et al., "Design Patterns for Securing LLM Agents against Prompt Injections.”

They propose six patterns (Action-Selector, Plan-Then-Execute, Map-Reduce, Dual-LLM, Code-Then-Execute, Context-Minimization) that trade a bit of flexibility for a lot of safety.

Great reference if you’re shipping agents any time soon.

#PromptInjection #FOSS #AI #Security

Design Patterns for Securing LLM Agents Against Prompt Injections

https://simonwillison.net/2025/Jun/13/prompt-injection-design-patterns/

#HackerNews #DesignPatterns #LLMAgents #PromptInjection #Cybersecurity #AIResearch

"Aim Labs reported CVE-2025-32711 against Microsoft 365 Copilot back in January, and the fix is now rolled out.

This is an extended variant of the prompt injection exfiltration attacks we've seen in a dozen different products already: an attacker gets malicious instructions into an LLM system which cause it to access private data and then embed that in the URL of a Markdown link, hence stealing that data (to the attacker's own logging server) when that link is clicked.

The lethal trifecta strikes again! Any time a system combines access to private data with exposure to malicious tokens and an exfiltration vector you're going to see the same exact security issue.

In this case the first step is an "XPIA Bypass" - XPIA is the acronym Microsoft use for prompt injection (cross/indirect prompt injection attack). Copilot apparently has classifiers for these, but unsurprisingly these can easily be defeated:"

https://simonwillison.net/2025/Jun/11/echoleak/

#AI #GenerativeAI #CyberSecurity #EchoLeak #Microsoft #Microsof365Copilot #ZeroClickVulnerability #LLMs #PromptInjection #Markdown #Copilot

Tokenization Confusion

Reseacher examines how simple prompt obfuscations, like hyphenation, can bypass LLM safety models due to tokenization quirks.

Knowledge Return Oriented Prompting (KROP): Prompt Injection & Jailbreak con imágenes prohibidas en ChatGPT (y otros MM-LLMs) https://www.elladodelmal.com/2025/06/knowledge-return-oriented-prompting.html #PromptInjection #Jailbreak #ChatGPT #Dalle #Guardrails #GenAI #IA #AI

New research reveals escalating vulnerabilities when LLMs execute code, with prompt injection attacks surging 140%. ❤️ #AISecurity #cloudsecurity #codegeneration #developertools #EUAIAct #LLMVulnerabilities #promptinjection #runtimerisks #redrobot

https://redrobot.online/2025/06/critical-security-gaps-emerge-in-ai-generated-code-execution/

Day 8/10: Your AI Feature Is Also a Backdoor

• Limit who can access your AI tools

• Log everything the LLM sees + says

• Hide system prompts from users

AI isn’t just smart — it’s a surface.

Follow @nickbalancom to build and protect.

#AIThreats #PromptInjection #WebSecurity #LLMFeatures #nickbalancom

AI Coding Assistants Can be Both a Friend & a Foe

New research shows that GitLab's AI assistant, Duo, can be tricked into writing malicious code and even leaking private source data through hidden instructions embedded in developer content like merge requests and bug reports.

How? Through a classic prompt injection exploit that inserts secret commands into code that Duo reads. This results in Duo unknowingly outputting clickable malicious links or exposing confidential information.

While GitLab has taken steps to mitigate this, the takeaway is clear: AI assistants are now part of your attack surface. If you’re using tools like Duo, assume all inputs are untrusted, and rigorously review every output.

Read the details: https://arstechnica.com/security/2025/05/researchers-cause-gitlab-ai-developer-assistant-to-turn-safe-code-malicious/

#AIsecurity #GitLab #AI #PromptInjection #Cybersecurity #DevSecOps #CISO #Infosec #IT #AIAttackSurface #SoftwareSecurity #CISO

𝟸𝟶+ 𝙰𝙸 𝚌𝚑𝚊𝚝𝚋𝚘𝚝𝚜 𝚌𝚘𝚞𝚕𝚍𝚗’𝚝 𝚑𝚘𝚕𝚍 𝚝𝚑𝚎 𝚕𝚒𝚗𝚎 — prompt injections slipped through in what we're calling the Yearbook Attack.

https://www.straiker.ai/blog/weaponizing-wholesome-yearbook-quotes-to-break-ai-chatbot-filters

#AISecurity #SecureAI #AICybersecurity #AIThreats #GenAISecurity #AITrust #PromptInjection

GitHub MCP pomaga wykradać dane z prywatnych repozytoriów – kolejna sprytna technika prompt injection w wydaniu indirect

W naszym ostatnim tekście przybliżyliśmy problemy opisane przez firmę Legit, które pozwalały na wykorzystanie asystenta Duo do kradzieży poufnych informacji z prywatnych repozytoriów lub zmuszania modelu LLM do wykonywania niepożądanych przez prawowitego właściciela projektu działań. Jak można się było domyślić, poruszana tematyka jest związana raczej z architekturą rozwiązań niż z...

Angriff über #GitHub MCP Server: Zugriff auf private Daten | Developer https://www.heise.de/news/Angriff-ueber-GitHub-MCP-Server-Zugriff-auf-private-Daten-10399081.html #ArtificialIntelligence #PromptInjection

"The curse of prompt injection continues to be that we’ve known about the issue for more than two and a half years and we still don’t have convincing mitigations for handling it.

I’m still excited about tool usage—it’s the next big feature I plan to add to my own LLM project—but I have no idea how to make it universally safe.

If you’re using or building on top of MCP, please think very carefully about these issues:

Clients: consider that malicious instructions may try to trigger unwanted tool calls. Make sure users have the interfaces they need to understand what’s going on—don’t hide horizontal scrollbars for example!

Servers: ask yourself how much damage a malicious instruction could do. Be very careful with things like calls to os.system(). As with clients, make sure your users have a fighting chance of preventing unwanted actions that could cause real harm to them.

Users: be thoughtful about what you install, and watch out for dangerous combinations of tools."

https://simonwillison.net/2025/Apr/9/mcp-prompt-injection/

#AI #GenerativeAI #LLMs #Chatbots #CyberSecurity #MCP #PromptInjection

A vulnerability in GitHub MCP lets malicious Issues hijack AI agents to leak data from private repos. Invariant calls this a “toxic agent flow” and shows it can exfiltrate sensitive info via prompt injection. GitHub alone can't fix it—mitigation needs system-level controls.