The New York Times sues Perplexity for producing ‘verbatim’ copies of its work – The Verge

Credit: NYT Times, gettyimages-2249036304

The New York Times sues Perplexity for producing ‘verbatim’ copies of its work

The NYT alleges Perplexity ‘unlawfully crawls, scrapes, copies, and distributes’ work from its website.

by Emma Roth, Dec 5, 2025, 7:42 AM PS, Emma Roth is a news writer who covers the streaming wars, consumer tech, crypto, social media, and much more. Previously, she was a writer and editor at MUO.

The New York Times has escalated its legal battle against the AI startup Perplexity, as it’s now suing the AI “answer engine” for allegedly producing and profiting from responses that are “verbatim or substantially similar copies” of the publication’s work.

The lawsuit, filed in a New York federal court on Friday, claims Perplexity “unlawfully crawls, scrapes, copies, and distributes” content from the NYT. It comes after the outlet’s repeated demands for Perplexity to stop using content from its website, as the NYT sent cease-and-desist notices to the AI startup last year and most recently in July, according to the lawsuit. The Chicago Tribune also filed a copyright lawsuit against Perplexity on Thursday.

The New York Times sued OpenAI for copyright infringement in December 2023, and later inked a deal with Amazon, bringing its content to products like Alexa.

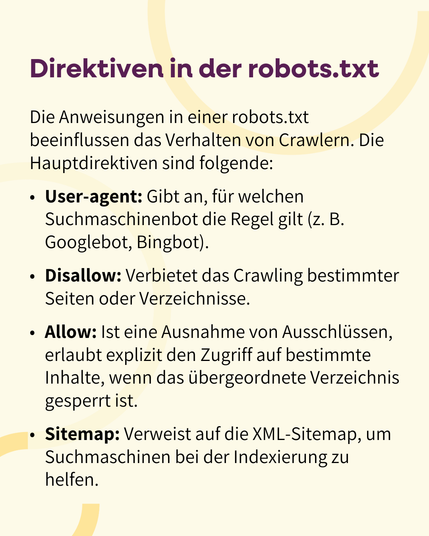

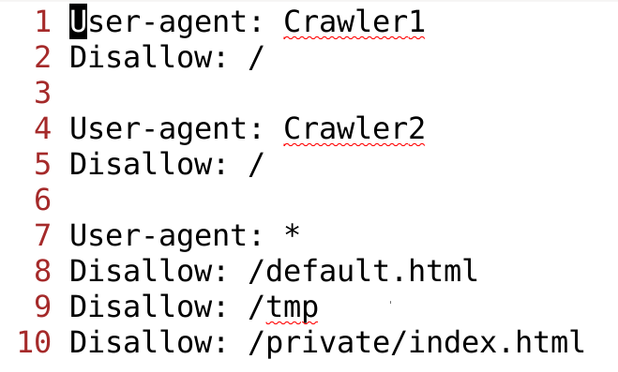

Perplexity became the subject of several lawsuits after reporting from Forbes and Wired revealed that the startup had been skirting websites’ paywalls to provide AI-generated summaries — and in some cases, copies — of their work. TheNYT makes similar accusations in its lawsuit, stating that Perplexity’s crawlers “have intentionally ignored or evaded technical content protection measures,” such as the robots.txt file, which indicates the parts of a website crawlers can access.

Perplexity attempted to smooth things over by launching a program to share ad revenue with publishers last year, which it later expanded to include its Comet web browser in August.

Related

“By copying The Times’s copyrighted content and creating substitutive output derived from its works, obviating the need for users to visit The Times’s website or purchase its newspaper, Perplexity is misappropriating substantial subscription, advertising, licensing, and affiliate revenue opportunities that belong rightfully and exclusively to The Times,” the lawsuit states.

Continue/Read Original Article Here: The New York Times sues Perplexity for producing ‘verbatim’ copies of its work | The Verge

#AI #artificialIntelligence #Copyright #Crawlers #Distribution #Lawsuit #NYTWork #OpenAI #Perplexity #RobotsTxt #Scrapping #Sues #TheNewYorkTimes #TheVerge #VerbatimCopies